Useful or Not: Declarative Self-improving Python

Quick Dive: An honest evaluation of where DSPy excels, what my implementation adds, and how you should (or shouldn't) use it

I was put to a question: Declarative Self-improving Python - how to use to create optimized prompts and pipelines that can replace our existing ways of doing things.

I started with learning as much as I can about DSPy. It was a lot. But great news - this article is a very concise report - just 2 sections (pros & cons and how-to-use)

CONTEXT

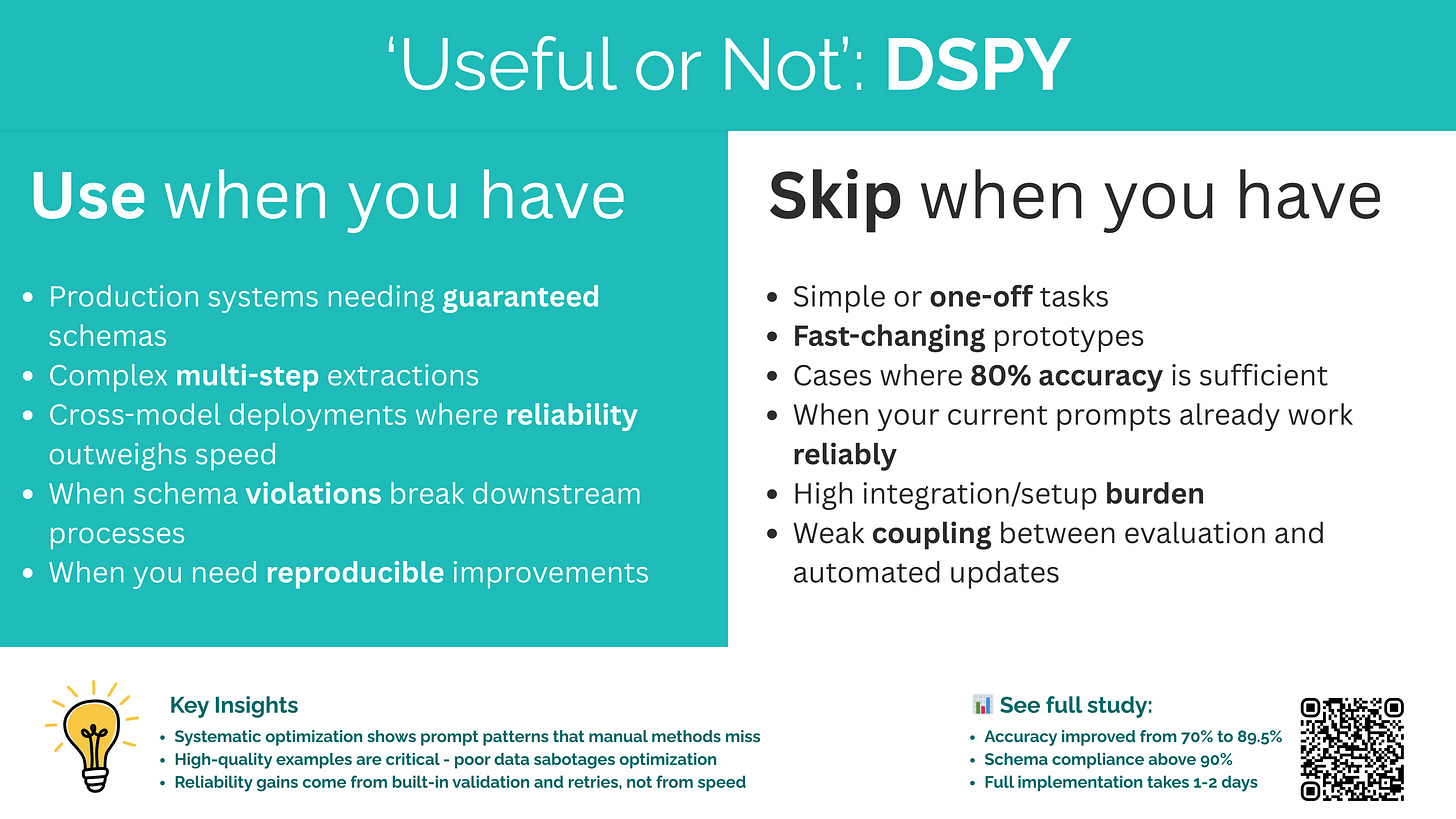

DSPy transforms prompt engineering into systematic optimization. You define success criteria, and DSPy uses automated search to discover the most effective approach. For structured outputs, this means uncovering prompt patterns that consistently produce correct schemas where manual methods fail.

Ok, so I decided to narrow it down. I posted a question in our company chat asking for examples of situations where more consistent and reliable AI performance would be valuable. I got a response:

A concrete example: generating machine-readable JSON. Different models often produce inconsistent outputs, which make automated parsing and downstream use harder.

So can DSPy could solve this problem? Spoiler - yes it could.

Benefits and Drawbacks

DSPy generally outperforms traditional prompt engineering when extracting structured data. Manual GPT-4 prompting delivers about 70–75% accuracy, rising to 85–90% schema compliance with retries and validation. DSPy’s basic setup lifts accuracy to 75–80%, and when paired with optimizers like BootstrapFewShot or MIPROv2, it reaches 80–85% accuracy with schema compliance consistently above 90%. The GEPA optimizer matches MIPROv2 but adds additional complexity.

Beyond accuracy, DSPy’s built-in validation, automatic retries, and Pydantic integration reduce debugging time and help ensure long-term reliability. It can detect subtle patterns that human-crafted prompts might miss and works well across different models. However, these benefits come with trade-offs. Setup typically takes 15–30 minutes, optimization 5–15 minutes.

DSPy excels when consistent, high-compliance structured extraction is required across varied inputs. For simple, one-off tasks, however, the extra cost and setup time make manual prompting a more practical choice.

How to Use DSPy Effectively

Start small. Test on a subset of your problem first. Don't commit fully until you've validated the approach works for your data. The dramatic improvement I saw - from 70% to 89.5% accuracy - came from DSPy exploring combinations I never would have tried manually.

Invest in quality examples. You need 20+ high-quality examples minimum. Bad examples lead to bad optimization. Spend the 2-3 hours to create them properly - this is the foundation DSPy builds on.

Understand the optimization process. DSPy doesn't guess randomly - it methodically learns from each attempt. What surprised me most was the transferability: optimizations that worked for one data format often improved others. But there's a ceiling - ambiguous data still fails no matter how much you optimize.

Practical implementation tips:

Plan for multiple optimization rounds - first attempts rarely perfect

Expect total time investment of 1-2 days (understanding DSPy: 1 day, setup: 2-4 hours, creating examples: 2-3 hours, optimization: 30-60 minutes per run)

Monitor what DSPy actually does - it's systematic optimization, not magic

Managing expectations: DSPy cannot fix ambiguous requirements, turn weak models into strong ones, or work without good examples. It's a tool for systematic improvement, not a silver bullet.

Production considerations: While Praveen's evaluation showed RL frameworks aren't production-ready, DSPy is already deployed at companies like Databricks and Moody's. It's mature enough for real use, but remember: the value comes from long-term reliability, not quick wins.

My verdict after extensive testing: For structured outputs requiring guaranteed schemas, DSPy delivers. The 100% schema compliance alone justified the investment. But success depends on your willingness to invest upfront time and your specific data requirements.

If you can spend 1-2 days and need guaranteed structure, DSPy will transform your reliability.

If you need speed or simplicity, look elsewhere. The systematic optimization finds solutions manual prompting never will - but only if you're solving a problem that needs that level of rigor.

This conclusion is based on extensive testing with real-world data. Your results will depend on your specific use case and data quality.

Nice new format!

Ephor link - https://labs.ephor.ai/join/177bb7b3