Synthesizing a New Architectural Paradigm

The discourse around AI agents has reached a fever pitch. In the span of a few months, the community has moved from foundational theory to a high-stakes architectural debate with major labs seemingly taking opposing sides. This whirlwind of publications reveals the fundamental questions we face as we push beyond simple chatbots toward truly autonomous systems.

The stage was set early in the year. On January 14th, LangChain introduced the concept of "Ambient Agents," proposing a shift away from the reactive "chat UX" toward persistent agents that respond to ambient signals and operate in the background. This vision of proactive, always-on systems was further developed on May 27th when Orin published "Building proactive AI agents," detailing the architectural patterns needed to create agents that take responsibility, manage their own schedules, and learn over time. These pieces laid the groundwork, defining the ambition for what next-generation agents could be.

The debate ignited in mid-June. On June 12th, Cognition AI, the creators of Devin, published a provocative article titled "Don't Build Multi-Agents." They argued that the tempting architecture of parallel sub-agents is inherently fragile, doomed by context isolation that leads to conflicting decisions and unusable results. The very next day, on June 13th, Anthropic released a detailed account of their own system, "How we built our multi-agent research system," presenting a powerful counter-narrative. They demonstrated how a lead "orchestrator" agent delegating to parallel sub-agents was not only viable but essential for scaling performance on complex research tasks.

The community immediately seized upon the clash. That same day, June 13th, smol.ai’s AINews newsletter framed the Cognition vs. Anthropic exchange as the central debate in agent architecture. By June 19th, the conversation had reached the highest echelons of AI research. In the Latent Space podcast, "Scaling Test Time Compute to Multi-Agent Civilizations," OpenAI's Noam Brown revealed that his new team is focused heavily on the multi-agent paradigm, framing it as a crucial step toward "AI Civilizations" and a necessary evolution beyond the limits of single agents.

This rapid, public discourse reveals not a simple right-or-wrong answer, but a nuanced roadmap for building the next generation of AI. By synthesizing these perspectives, we can identify the core challenges and, more importantly, the specific, engineered solutions required to build robust and scalable multi-agent systems. This analysis directly informs the evolution of my own work, demonstrating how the principles validated by this debate can enhance the Multi-Agent Deep Research Architecture I proposed in February, for a new "Nexus Agents" system.

The Central Debate: Fragility vs. Scalability

The Case Against (Cognition): Cognition's core argument is that multi-agent systems, especially those with parallel sub-agents, are inherently fragile due to context isolation. Their "Flappy Bird" example is illustrative: one sub-agent builds a Super Mario background while another builds a non-game-asset bird because neither has the shared context of the other's actions or implicit design decisions. This leads to compounding errors. Their proposed solution favors a single-threaded, linear agent for reliability.

The Case For (Anthropic & OpenAI): The primary benefit of multi-agent systems is not just task division but scaling performance by scaling token usage and parallelism. For complex research, a single agent's context window is a hard limit. Anthropic's system solves this by giving each sub-agent its own context window, leading to a 90.2% performance increase on breadth-first research. Noam Brown at OpenAI takes this further, envisioning a future where "AI Civilizations" are the key to superintelligence, a scale unachievable with single agents.

Synthesis: A Framework for Reliable Multi-Agent Systems

The path forward lies in capturing the scalability benefits described by Anthropic and OpenAI while rigorously addressing the fragility concerns raised by Cognition. The articles collectively provide a comprehensive set of strategies.

Challenge: Context & Coordination

Problem: Sub-agents operate in isolation, leading to conflicting work.

Strategies & Solutions:

Orchestrator-Worker Pattern (Anthropic): A lead agent acts as a project manager, explicitly prompted to delegate with detail: define a clear objective, output format, and task boundaries for each sub-agent.

Explicit Scaling Rules (Anthropic): Embed heuristics in the orchestrator's prompt to scale effort based on query complexity.

Interleaved Thinking (Anthropic): Sub-agents must be prompted to evaluate tool results, identify gaps, and refine their next query, making them adaptive.

Challenge: Persistence & Proactivity (The Ambient Layer)

Problem: Systems are often "one-and-done," lacking the ability to evolve.

Strategies & Solutions:

Ambient & Proactive Design (Langchain, Orin): Shift the paradigm from a reactive "tool" to a proactive "service."

LLM-Managed Scheduling (Orin): Implement a dynamic control loop where the agent itself decides how long to "sleep" before its next proactive run.

Wake Events (Orin): Combine proactive schedules with reactive triggers (e.g., new information, user feedback) for immediate responsiveness.

Challenge: Memory & Context Overflow

Problem: Long-running tasks inevitably exceed model context windows.

Strategies & Solutions:

Decaying-Resolution Memory (DRM) (Orin): Maintain high-resolution memory for the recent past and use an LLM to create progressively lower-resolution summaries for older periods.

Stateful, Deterministic Tools (Orin): Offload memory needs to tools. An agent doesn't need to remember calendar events if it has a calendar tool it can query.

External Memory & Checkpoints (Anthropic): The lead agent saves its research plan and key findings to an external database to prevent context loss and allow resumption from failure.

Challenge: Evaluation & Reliability

Problem: The non-deterministic nature of multi-agent systems makes traditional evaluation impossible.

Strategies & Solutions:

Flexible, Outcome-Based Evaluation (Anthropic): Judge the final outcome against a rubric, not the specific path taken.

LLM-as-Judge (Anthropic): Use a powerful LLM with a detailed rubric to scalably evaluate outputs.

Human Evaluation is Essential (Anthropic): Manual testing remains critical to catch subtle biases and edge cases.

Rainbow Deployments (Anthropic): To avoid breaking stateful agents, deploy updates by gradually shifting traffic from old versions to new ones while both run simultaneously.

Nexus Agents Architecture

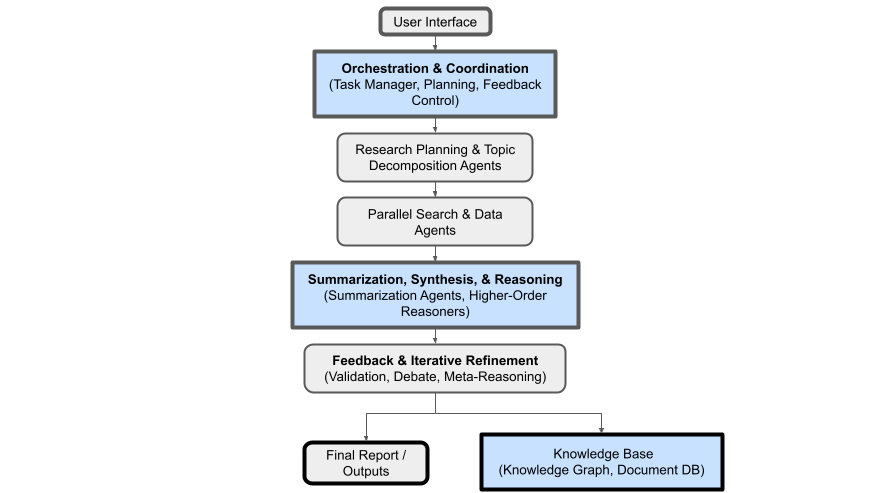

This vibrant discourse provides the perfect context for revisiting and refining my own architectural proposal from February. My Multi-Agent Deep Research Architecture was conceived as a multi-agent system specifically for continuous, deep research. The goal was to create "living documents" that evolve with new information, moving beyond the one-shot nature of most existing AI tools. The initial design anticipated many of the challenges and patterns now at the forefront of the community debate. What follows is an analysis of how that original architecture aligns with recent industry findings and a detailed plan for enhancing it with the synthesized principles discussed above.

Points of Alignment Between My Architecture and Anthropic's System

My original architecture document correctly identified the foundational pillars of a robust multi-agent research system, aligning almost perfectly with Anthropic's later publication:

Orchestration & Coordination: My Task Manager and Communication Bus are functionally identical to Anthropic's Lead agent (orchestrator).

Hierarchical & Parallel Processing: My concept of a Topic Decomposer Agent creating a "Tree of Thoughts" and spawning parallel Search Agents is the exact architecture Anthropic implemented.

Specialized Agent Roles: I defined distinct agents for decomposition, searching, summarizing, and reasoning, mirroring Anthropic's use of specialized sub-agents.

Persistence & Living Documents: My vision of a Knowledge Base to create evolving artifacts is the core goal that distinguishes these systems.

Human-in-the-Loop & Continuous Augmentation: My "continuous mode" and inclusion of human feedback were direct precursors to the proactive and ambient agent concepts detailed by Orin and Langchain.

Actionable Enhancements for Nexus Agents Architecture

Based on this synthesis, I can significantly enhance the original blueprint to make it more robust, dynamic, and powerful.

1. Evolve the Orchestration Layer into a Proactive Core:

Modification: The Task Manager's "continuous mode" will be upgraded to an Ambient Control Loop.

Implementation: Instead of a simple periodic trigger, this loop will be driven by an LLM-managed schedule (per Orin). The Task Manager will ask a meta-agent, "Based on the current state of research, when should the next iteration run?" It will also incorporate Wake Event Listeners on the Communication Bus that can interrupt the sleep cycle if high-priority new information arrives.

2. Formalize the Human-in-the-Loop (HITL) Interface:

Addition: Introduce a new component: an Agent Inbox & Review Service.

Implementation: This service (per Langchain) provides a dedicated UI for managing agent interactions. When an agent needs human input (Notify, Question, or Review), it publishes a request to the Communication Bus. The Agent Inbox service presents this to the user and routes feedback back to the requesting agent.

3. Implement an Advanced Memory Management System:

Modification: Augment the Knowledge Base with a dedicated Decaying-Resolution Memory (DRM) Cache.

Implementation: The core Knowledge Base (composed of a Document Database and Graph Database) remains the permanent, structured store of facts. The DRM Cache (per Orin) will store the conversational and operational context of research tasks. When an agent is spawned, it receives high-resolution context from recent steps and lower-resolution summaries of older steps from the DRM, ensuring it always has the most relevant information without context overflow.

4. Adopt Emerging Standards for Agent Communication and Tool Use:

Modification: Specify the protocols running on the Communication Bus and used by agents for tools.

Implementation:

Agent tool use: To decouple agents from specific tool implementations, the system should adopt the Model-Context-Protocol (MCP) as the de facto standard for tool use whenever possible. Instead of building custom clients, the Agent Spawner can simply provide an agent with an MCP endpoint for a given tool, promoting modularity and interoperability.

Inter-agent collaboration: The Communication Bus will adopt a standardized messaging format. This could be an implementation of the A2A (Agent-to-Agent) Protocol, or for more advanced capabilities, the emerging Coral Protocol. While A2A focuses on standardizing the message format itself, Coral provides a higher-level framework for coordination and trust.

5. Integrate a Dedicated Evaluation & Observability Layer:

Addition: Create a new top-level layer for Automated Evaluation & Observability.

Implementation: After Artifact Generation, an LLM-as-Judge Agent (per Anthropic) scores the output using a predefined rubric. These results are logged and fed back to the Orchestration Layer's meta-reasoning components to adjust strategies in future runs (e.g., "the system consistently fails on financial data; prioritize the 'Financial' search agent").

The principles and enhancements detailed here are not merely theoretical; they form the blueprint for the Nexus Agents system, which is currently under active development. I look forward to sharing the implementation and results with the community soon. Stay tuned.

From Debate to a New Design Paradigm

What began as a seemingly binary debate ("to build multi-agent or not") has rapidly evolved into a sophisticated and unified engineering paradigm. The question is no longer if we should build multi-agent systems, but how we build them to be both scalable and reliable. The answer is not to choose between Cognition's caution and Anthropic's ambition, but to synthesize them. We must embrace parallel, multi-agent architectures to overcome the fundamental limits of single-model context, but do so with the rigorous engineering discipline required to manage their inherent complexity.

The path forward is clear. It requires a new class of agent systems architected around proactive, ambient control loops. They must feature robust orchestration that delegates with precision, advanced memory management that scales sub-linearly, and stateful tools that serve as a deterministic extension of the agent's mind. Critically, these systems demand a new approach to evaluation: one that is outcome-based, LLM-assisted, and relentlessly validated by human oversight.

This moment marks an inflection point in AI development. We are moving past the era of building clever prompts for monolithic models and into the era of engineering complex, stateful systems. The "last mile" of creating production-ready applications is now the central challenge, and as this discourse proves, it is through open debate, shared learnings, and iterative design that we will build the truly autonomous, reliable, and intelligent agentic systems of the future.