Evaluating Agent Systems and Human AI Fluency (Part 2)

Assessing Human Readiness and Synergies in Human-AI Evaluation

The Human Element in the Agentic AI Era

Building upon the challenges in evaluating AI agent capabilities, reliability, and coordination discussed in Part 1, this second part shifts focus to the equally critical human dimension of agentic systems. As AI agents become more integrated into our workflows and daily lives, their effectiveness and safety depend significantly on the ability of humans to interact with them appropriately. This necessitates a clear understanding and assessment of Human AI Fluency or AI Literacy—the constellation of skills, knowledge, and critical perspectives required to use, manage, and collaborate with AI responsibly.

This part explores the emerging landscape of frameworks designed to define and measure human AI fluency (2023-2025). We will examine the core competencies identified—ranging from practical skills like prompt engineering and tool selection to crucial abilities like understanding AI limitations, verifying outputs, and navigating ethical considerations. Furthermore, we will delve deeper into the synergy between human AI competencies and desirable AI agent capabilities, highlighting how insights from evaluating one can inform the development and assessment of the other. Finally, we present recommendations focused on developing robust methods for assessing human fluency and integrating this understanding into a holistic view of human-AI system performance.

Human AI Literacy and Fluency Frameworks & Connection to Agent Benchmarking

As AI agents become more capable and integrated into various aspects of life and work, there is a parallel imperative to develop a human workforce that is "AI-fluent" – possessing the necessary competencies to understand, interact with, and harness AI tools effectively and responsibly. "AI literacy" or "AI fluency" encompasses the skills and knowledge enabling people to work alongside AI, analogous to how computer literacy enabled interaction with PCs. Researchers, educational institutions, and industry leaders have begun outlining the competencies constituting AI fluency and proposing frameworks to define and assess these skills. This section reviews these frameworks (from 2023–2025), focusing on practical competencies relevant in professional settings, and explores the crucial, yet underexplored, connection between these human skills and the capabilities evaluated in AI agent benchmarks.

Defining AI Literacy and Fluency

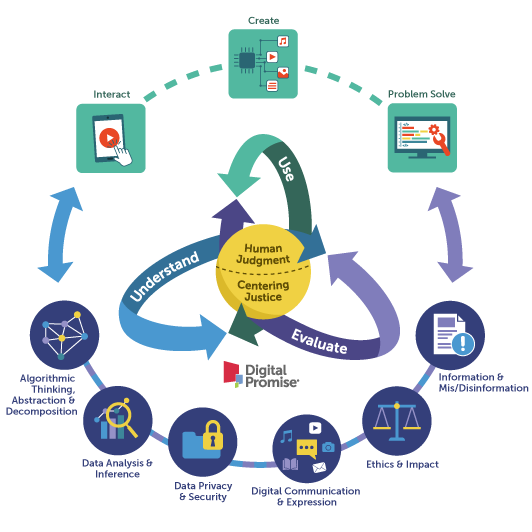

A core concept, articulated by the Digital Promise framework (2024), defines AI literacy as encompassing the ability to Understand, Evaluate, and Use AI systems critically, safely, and ethically. An AI-fluent individual should grasp AI's capabilities and limitations, interpret and assess the trustworthiness of AI outputs, and apply AI tools appropriately to solve problems . This involves:

Understand: Comprehending fundamental AI concepts (data usage, algorithms, pattern recognition) and how AI systems develop associations and predictions.

Evaluate: Critically assessing AI systems regarding transparency (data, methods), safety (privacy, security), ethics (bias, equity), and impact (credibility, efficacy), ensuring human judgment remains central.

Use: Engaging with AI through various modes like interacting with AI-driven features, creating content with generative AI, or even applying/developing AI systems.

Related concepts include "AI readiness," which adds mindset and awareness, emphasizing the need for employees to understand AI's strengths and weaknesses for appropriate deployment – a baseline understanding now seen as fundamental across roles.

Key Competencies for Professionals

Recent frameworks and industry analyses highlight several concrete skills valued by employers:

Prompt Engineering (Prompt Design): The ability to craft effective inputs (prompts) for AI systems, especially generative models, to elicit desired outputs. This includes phrasing requests clearly, providing necessary context, structuring prompts (e.g., step-by-step reasoning), and iterating based on results. This skill saw a surge in demand across technical and non-technical roles (e.g., marketing, HR) by late 2023, often featuring in job interviews with expectations that candidates can articulate their prompting strategies. AI fluency here is often role-specific, with engineers focusing on code generation/debugging prompts and product managers on user story drafting, but the core skill remains consistent.

Tool Selection and Integration: Knowing which AI or traditional tool is appropriate for a given task and potentially integrating multiple tools. As the AI landscape diversifies (chatbots, code assistants, analysis tools), professionals need to select judiciously based on task requirements and tool capabilities (e.g., choosing between a general LLM and a specialized tool, knowing API limitations like context length). AI-fluent professionals act somewhat like orchestrators, assigning sub-tasks to the right tools or deciding against AI use when appropriate. This is often tested via scenario questions in interviews.

Understanding Model Limitations and Verification: Possessing a realistic understanding of AI's limitations (e.g., hallucinations, biases, lack of domain expertise) and developing a habit of critically verifying AI outputs. AI-fluent individuals are skeptical by default, double-checking critical information, testing AI-generated code, validating summaries against sources, or recognizing implausible outputs. This aligns strongly with the 'Evaluate' component of literacy frameworks and is emphasized in corporate upskilling programs. Demonstrating awareness of failure modes and proactive verification is key.

Data and Domain Knowledge Symbiosis: Understanding that AI systems often require context and data, and knowing how to prepare and provide appropriate inputs. This involves data literacy and the ability to format information effectively for the AI. This competency merges AI usage with traditional domain expertise, as the user must know their field well enough to provide relevant context and judge the sensibility of the AI's output.

Ethical and Responsible AI Use: Understanding and applying principles of AI ethics, including awareness of potential biases, fairness concerns, privacy implications, and security risks. In practice, this involves adhering to organizational policies (e.g., regarding sensitive data) and actively considering the potential for AI systems to perpetuate or create inequities. This is a core component in frameworks like UNESCO's (emphasizing human-centered mindset and ethical governance) and Digital Promise's 'Evaluate' dimension.

Formal AI Literacy Frameworks

Several structured frameworks formalize these competencies:

Digital Promise Framework: Structures literacy around Understanding, Using, and Evaluating AI, emphasizing critical engagement.

UNESCO AI Competency Framework for Teachers (and Students): Provides a comprehensive global standard outlining competencies across dimensions like Human-centred Mindset, Ethics, AI Foundations, Pedagogy, and Professional Learning, with defined progression levels (Acquire, Deepen, Create).

Other Frameworks: Institutions like EDUCAUSE (focusing on Technical Understanding, Evaluative Skills, Practical Application, Ethical Considerations) and initiatives like the AI Literacy Institute adapt existing literacy models. Related efforts exist in specific domains (leadership, cybersecurity, finance) and through organizations like the OECD.

These frameworks consistently highlight the need for foundational understanding, practical application skills, and, critically, the capacity for ethical and evaluative judgment regarding AI systems and their outputs.

Mapping Human AI Competencies to Agent Benchmarking

Interestingly, the skills required for human AI fluency show direct parallels and potential synergies with the capabilities being evaluated in benchmarks for AI agents, yet also reveal significant gaps:

Prompt Engineering vs. Agent Communication/Prompting: Human prompt engineering skill mirrors an AI agent's need to formulate effective prompts for itself (planning) or messages (prompts) for other agents/tools. Evaluating an agent's communication quality (clarity, sufficiency of context, iterative refinement) in benchmarks like MultiAgentBench or our proposed dual-layer design is analogous to assessing human prompt skill. Human best practices (e.g., chain-of-thought) could inform agent evaluation, and vice-versa.

Tool Selection vs. Agent Task Routing: An AI-fluent human choosing the right tool parallels a coordinator agent routing tasks to appropriate specialist agents/APIs. While current benchmarks touch upon this, explicitly scoring an agent's optimal tool/agent selection (as in our proposed dual-layer evaluation) mirrors assessments of human competency in this area. Both humans and agents need accurate models of available capabilities (cf. A2A protocol's capability profiles). Agent benchmark metrics related to Task Completion Rate and Efficiency evaluate the outcome of agent 'Use'.

Understanding Limitations & Verification vs. Agent Self-Monitoring & Robustness: Humans must critically evaluate AI outputs and understand limitations precisely because current agents often lack robust reliability. This human competency directly addresses the capability-reliability gap in AI. AI Fluency requires understanding that high capability doesn't mean consistent correctness and developing verification habits. Agent benchmarks assessing self-awareness, uncertainty quantification, and proactive verification are needed to measure the agent side of this equation.

Collaboration & Ethics vs. Agent Teamwork & Ethical Alignment: Human AI fluency involves collaborating effectively with AI ("robot-friendly colleague") and adhering to ethical norms. This mirrors multi-agent systems requiring effective agent-to-agent collaboration (facilitated by protocols like A2A) and alignment with ethical guidelines. Agent benchmarks are beginning to measure collaboration quality, but metrics for Fairness, Bias Detection, Privacy, and Explainability / Interpretability (crucial for human ethical evaluation and understanding) remain underdeveloped or uncommon in standard benchmarks.

The Disconnect & The Human Validator Role: A significant gap exists: agent benchmarks focus heavily on performance/capability, often neglecting metrics (transparency, explainability, uncertainty reporting, consistent reliability) crucial for the human 'Evaluate' competency. In practice, the AI-fluent human often acts as the final validator and reliability check, making their ability to critically assess agent limitations and outputs absolutely essential for safe and effective use. Benchmarks need to provide the data (e.g., reliability scores, confidence intervals) that empower this human oversight role.

Assessing Human AI Fluency

As AI fluency becomes a critical competency across roles, effective assessment methods are needed, moving beyond self-assessment towards practical evaluation. The approaches are evolving rapidly:

Educational Settings: Often utilize rubrics aligned with frameworks (like Digital Promise or UNESCO), evaluating student understanding through practical projects, presentations, and potentially portfolio reviews demonstrating AI interaction and critical evaluation. Self-reporting might supplement these methods.

Professional Settings & General Recruiting (Prompt Engineering Focus): Companies increasingly incorporate AI fluency checks into hiring processes for a wide range of roles (marketing, HR, project management, etc.), recognizing prompt engineering as a foundational skill. Assessment methods include:

Scenario-Based Interviews: Asking candidates behavioral questions ("Describe a time you used an AI tool to solve a complex problem. What was your process?") or situational questions ("How would you use an LLM to draft customer communications for situation X?").

Live or Take-Home Performance Tasks (General Roles):

Prompt Crafting: Provide a role-relevant scenario (e.g., marketing brief, data summary need) and ask the candidate to write 2-3 distinct prompts for an LLM to generate suitable outputs. Evaluation focuses on clarity, context inclusion, constraint specification, and tailoring to the desired output format.

Prompt Critique & Refinement: Present a flawed prompt and its poor AI output. Ask the candidate to diagnose the failure and rewrite the prompt, justifying their changes. Evaluation assesses analytical reasoning and effective refinement strategies.

Comparative Prompting: Ask for prompts targeting different outcomes from the same core task (e.g., creative vs. factual, concise vs. detailed). Evaluation tests understanding of targeted prompt design.

Specialized Skills Tests & Standardized Exams: Services like Glider AI have began developing dedicated skills tests, particularly for prompt engineering, often involving observed problem-solving using standard AI tools within a timed environment. The development of more comprehensive, standardized "AI fluency exams" is a needed future direction. These could present candidates with a scenario and access to multiple AI tools (e.g., LLM chatbot, code assistant, data analysis tool), scoring them on:

Appropriate Tool Selection: Did they choose the right tool(s) for the sub-tasks?

Effective Prompting Strategy: Quality, clarity, and iterative refinement of prompts used.

Result Verification: Did they critically evaluate the AI outputs? Did they identify and attempt to correct errors or inconsistencies?

Efficiency: How effectively did they leverage the tools to achieve the goal within constraints?

Recruiting Engineers (AI-Assisted Software Development Focus)

Evaluating engineers requires assessing their ability to leverage AI tools within software development, emphasizing critical thinking, process-oriented skills, and human-AI collaboration. The following tasks evaluate proficiency with agentic IDEs and AI-assisted programming:

AI-Augmented Coding Task

Candidates use an agentic IDE (e.g., Cursor, Windsurf) to implement a feature, refining AI-generated code to meet production standards. Evaluation focuses on prompting, critique, refinement, and code quality.AI-Assisted Debugging Challenge

Candidates diagnose and fix buggy code using an agentic IDE, leveraging AI suggestions. Evaluation centers on diagnosis, AI use, and verification.AI Code Review Task

Candidates review AI-generated code, identifying flaws (e.g., logic errors, security issues). Evaluation assesses critical analysis and AI-specific failure awareness.Agentic IDE Workflow Simulation

Candidates complete a development workflow in a simulated agentic IDE, implementing a feature through:Planning and Task Decomposition: Define product requirements and technical architecture, break down tasks, and set milestones. Create documentation artifacts that will be used in implementation stages.

Prompt Crafting and Iteration: Craft and refine prompts for code, tests, or debugging.

Code Implementation and AI Collaboration: Generate and enhance AI-assisted code.

Progress Tracking: Monitor milestones using documents to plan and update status of tasks.

Regression Prevention and Validation: Leverage AI to generate automated tests, refining prompts for comprehensive coverage. Critically evaluate and enhance tests, complementing with static analysis or manual reviews. Validate test effectiveness.

Debugging: Resolve bugs with AI and independent judgment.

Documentation: Produce clear, AI-assisted documentation for maintainability.

AI-Assisted Programming Knowledge Assessment

Candidates answer open-ended questions on agentic IDE best practices (e.g., “How do you plan before implementing functionality?”). Evaluation assesses proper use of Product Requirements Document, progress tracking, regression mitigation, etc.Scenario-Based Tool Choice

Candidates justify agentic IDE usage for a scenario, balancing AI reliance with reliability. Evaluation assesses decision-making and tool applicability.Process-Oriented Assessment

Evaluation tracks:Prompt crafting and iteration.

Strategic AI tool use.

Critical validation of outputs.

Criteria include efficiency, collaboration, and validation rigor.

Scoring Rubrics

Rubrics prioritize:Critical evaluation of AI outputs.

Strategic and tactical use of agentic IDEs.

Process quality in human-AI workflows.

This ensures focus on judgment, expertise, and effective AI integration.

Research Directions for Assessment: Developing robust, validated, and scalable assessment methods for AI fluency across diverse roles remains a key research area. This includes creating standardized task libraries, developing reliable scoring rubrics for performance tasks (especially evaluating the process of human-AI interaction, not just the output), exploring simulation-based assessments, and ensuring assessments are fair and minimize bias. Furthermore, research is needed on how best to assess the crucial "Evaluate" competency – particularly the ability to recognize and mitigate AI unreliability and ethical risks in practical scenarios.

Human AI-fluency frameworks converge on the importance of effective usage (prompting, tool selection), critical oversight (evaluation of limitations, verification), and ethical considerations. These competencies map closely to desired agent capabilities (communication, routing, self-monitoring, alignment), presenting an opportunity. Aligning human AI literacy training with agent design principles, and evolving agent benchmarks to measure characteristics crucial for human evaluation (like safety, fairness, explainability), can create a positive feedback loop, fostering more effective and responsible human-AI systems

Recommendations and Research Agenda

Complementing the recommendations for improving agent benchmarks in Part 1, this set focuses on assessing human AI fluency and integrating human factors into the overall evaluation picture:

Develop and Standardize Human AI-Fluency Assessments:

Create standardized, role-specific AI fluency tests for humans, moving beyond self-reporting towards practical, performance-based assessments.

These tests should involve AI-augmented tasks (e.g., using AI for research, analysis, creation) and evaluate key competencies: effective prompting, appropriate tool selection, critical verification of AI outputs (recognizing unreliability), handling AI errors, and applying ethical considerations.

Foster collaboration between industry, academia, and certification bodies to establish recognized assessment methods and benchmarks for human skills.

Create and Utilize Human-AI Interaction Benchmarks:

Develop "cross-over" benchmarks where humans and AI agents collaborate directly on meaningful tasks.

Evaluate these interactions holistically, measuring not only task outcome but also the process: AI usability, adaptability, clarity of communication, alongside human effectiveness in delegation, trust calibration (avoiding over/under-reliance), and critical oversight.

Use these benchmarks to test the practical reliability and usability of AI systems in realistic collaborative settings and identify friction points in human-AI teaming.

Align Agent Benchmark Design with Human Evaluation Needs:

Ensure agent benchmarks generate data relevant to human AI literacy. Specifically, incorporate and report metrics on agent reliability, consistency, uncertainty estimation, explainability, fairness, and safety guarantees, allowing humans to practice and validate their 'Evaluate' competencies.

Benchmark transparency should allow users to understand why an agent succeeded or failed, informing their mental models and interaction strategies.

Promote Education and Transparency Rooted in Evaluation Insights:

Disseminate findings from both agent benchmarks and human fluency assessments accessibly. Highlight common agent failure modes (especially reliability issues) and effective human mitigation strategies.

Use evaluation results to inform best practices for AI developers (building more reliable, understandable agents) and training curricula for end-users (focusing on critical engagement and verification).

Foster an Interdisciplinary Community for Unified Evaluation:

Establish collaborations that explicitly bring together AI researchers, benchmark creators, HCI experts, educators defining AI literacy, ethicists, and social scientists.

Collaborate on updating evaluation criteria for both agents and human fluency, co-designing interaction benchmarks, and developing a shared understanding of effective and responsible human-AI systems.

Towards Effective and Responsible Human-AI Partnership

The journey towards harnessing the full potential of agentic AI requires a sophisticated understanding of both the technology and the humans who wield it. As explored across this two-part series, significant progress has been made in developing benchmarks to evaluate increasingly complex AI agents, yet critical gaps remain in assessing multi-agent coordination, reliability, cost-effectiveness, and adherence to interoperability standards crucial for real-world deployment (Part 1). Addressing these requires evolving benchmarks significantly, notably through approaches like the proposed Dual-Layer Evaluation framework.

However, technological proficiency alone is insufficient. Part 2 highlighted the parallel need to define, measure, and cultivate Human AI Fluency. Effective human interaction demands competencies in prompting, tool selection, ethical judgment, and, critically, the ability to understand and verify outputs from systems that are powerful but inherently unreliable. The synergy between these human skills and desirable agent capabilities – where robust agents support human verification and fluent humans guide agent actions effectively – is central to successful integration.

Therefore, the path forward necessitates a unified and synchronized research agenda. We must advance agent benchmarks to measure not just capability but reliable, cost-effective, and transparent performance within standardized ecosystems. Concurrently, we must develop robust assessments for human AI fluency and design human-AI interaction benchmarks that evaluate the collaborative system holistically. By fostering an interdisciplinary community committed to this integrated vision (where insights from agent limitations inform human training, and understanding human needs shapes agent design and evaluation) we can steer the development of AI towards systems that are not only technically advanced but are truly ready to function as effective, safe, and trustworthy partners for humanity.

References

Altis Technology. “Must-Have AI Skills for Today’s Job Seekers.” Altis Technology Blog, 2024.

Brodnitz, Dan. “A New Framework for AI Upskilling Across Your Organization.” LinkedIn Talent Blog, LinkedIn, 2023.

Cole, Joseph. “AI Prompt Engineering Skills.” Glider AI Blog, 31 Mar. 2025.

Gonzalez, Leonardo. “Evaluating Agent Systems and Human AI Fluency (Part 1).” Trilogy AI Center of Excellence, 22 Apr. 2025

Lee, Keun-woo, et al. “AI Literacy: A Framework to Understand, Evaluate, and Use Emerging Technology.” Digital Promise, 18 Jun. 2024.