Executive Summary

X (formerly Twitter) has now open-sourced its recommendation algorithm as of September 8, 2025, bringing the codebase current with production and committing to biweekly updates. By November, the company aims to evolve into a fully AI-powered, customizable timeline.

This is the most significant transparency move we’ve seen from a major social platform. But one paradox remains:

transparency without weights, data, or live signals isn’t true accountability.

The next step isn’t just waiting for platforms to disclose more. It’s building independent AI systems to audit how these algorithms behave in the wild.

The Transparency Paradox

What X released (Sept 2025):

Feed ranking architecture

Updated engagement weighting (replies, author responses, quality likes emphasized)

Biweekly updates pledged going forward

What’s still missing:

Training datasets

Model weights

Moderation and trust & safety filters

Full live configuration

This means researchers can’t exactly replicate the feed experience. The kitchen is cleaner, but the recipe is still private.

What X Actually Disclosed

X’s recommendation system follows a three-stage pipeline:

Candidate Sourcing – narrow hundreds of millions of tweets to ~1,500.

Ranking – score with a 48M parameter neural net.

Filtering – apply visibility rules, content balance, and moderation.

The most striking detail remains the engagement weights:

Author reply = 75x

Reply = 13.5x

Profile click + engagement = 12x

Retweet = 1x

Like = 0.5x

Negative feedback (blocks, reports, “show less”) = heavy penalties

In the September 2025 update, X emphasized prioritizing “productive” engagement and penalizing negative signals. But the paradox holds: by knowing these weights, AI can just as easily game them as study them.

AI Changes the Transparency Equation

In 2019, a partial release like this meant little beyond commentary.

In 2025, AI makes it actionable. Generative and agentic systems can:

Infer and probe ranking behavior

Generate controlled content variants to test hypotheses

Automate feedback loops, updating beliefs in real time

Instead of treating X’s release as the end of the transparency conversation, we can treat it as raw input for AI-driven, independent audits.

Where AI Fits In

Here’s how AI makes this shift concrete:

Variant generation: LLMs can create semantically equivalent posts with controlled structural differences (reply-seeking vs like-seeking).

Hypothesis-driven probes: Encode specific claims (e.g., “author replies boost visibility”) as testable experiments.

Automated loops: Agents can post, observe, collect metrics, and update hypotheses iteratively.

Cross-model validation: Different AI systems can evaluate content effects independently, reducing bias.

AI makes it possible to treat X’s transparency drops as inputs into a continuous, reproducible audit cycle.

How an AI Audit Framework Could Work

Pipeline concept:

Hypothesis definition

Example: “Posts with meaningful author replies gain disproportionately higher reach.”

Variant generation

AI generates multiple post variants that isolate a single factor (e.g., CTA wording).

Probe execution

Variants could be tested in simulation, or compared against naturally occurring posts that differ only in structure. Automated posting at scale would violate X’s terms of service, but the framework illustrates how AI could support independent auditing if safe and sanctioned pathways were provided.

Metrics collection

Observable signals (replies, reposts, likes, negative feedback, profile clicks).

Evaluation

Compare variant outcomes, compute effect sizes, log into reproducible reports.

Iteration

Hypotheses are updated, retired, or confirmed as new data arrives.

Guardrails for Responsible Auditing

Such a system would need strong boundaries:

No brigading, sock-puppets, or manipulative campaigns.

Only benign, non-controversial content.

Transparent disclosure of assumptions and methods.

Kill-switches if tests degrade user experience.

The goal is measurement, not manipulation.

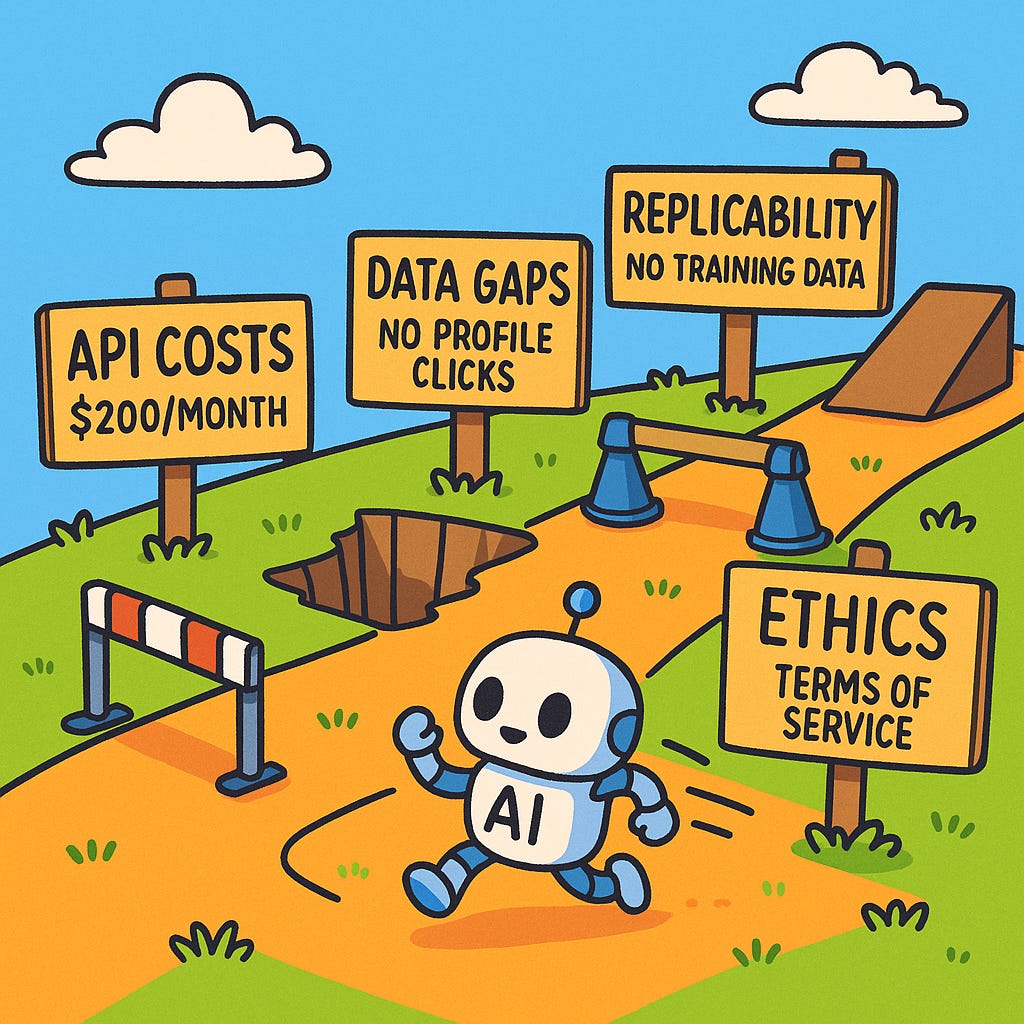

There are some blockers

As promising as this framework sounds, several real-world challenges stand in the way:

API access costs: Even X’s $200/month basic API tier provides very limited access to historical or large-scale engagement data. Running systematic probes would quickly exceed those limits.

Data availability: Some signals (e.g., profile clicks, “show less” actions) aren’t exposed through APIs at all. Alternative methods like RSS feeds or scraping can capture content, but they don’t provide the deeper analytics needed for robust auditing.

Replicability gaps: Without model weights or training data, audits can only approximate outcomes rather than reproduce the exact behavior of the live feed.

Ethical and policy boundaries: Automated posting for probes would violate X’s terms of service, making sanctioned, simulation-first methods the only responsible path forward.

These obstacles don’t make auditing impossible, they just underline why true algorithmic accountability will require cooperation between independent researchers, platform providers, and policymakers.

Why This Matters

For researchers: Moves beyond static code releases to dynamic, reproducible tests.

For platforms: Independent audits provide external validation and highlight unintended consequences.

For the public: Builds algorithmic literacy, helping users understand how small differences in structure impact visibility.

Conclusion: From Theater to Accountability

X’s September 2025 release is the most substantial transparency move we’ve seen. But without independent validation, it’s still performance.

AI offers a way forward. With the right guardrails, agentic systems can probe, replicate, and evaluate how algorithms shape discourse in real time.

This is the paradox: platforms stage transparency theater, but AI can turn it into accountability. The question is whether we, as researchers, technologists, and citizens, will build the tools to do it.