The Six Pillars of Spec-Driven Work

Kiro and the orchestration of multi-tool pipelines for human–AI teams

The conversation surrounding AI in software development is rapidly maturing. We’ve moved past the novelty of single-file code completion and crossed the threshold into the era of long-horizon, autonomous agents. These agents are capable of tackling entire features, refactoring complex systems, and even performing security audits.

But this leap in capability introduces a profound new challenge centered on a single question:

How do we effectively steer, trust, and collaborate with these powerful new partners?

The answer isn’t “better prompts.” It’s a shift to Spec‑Driven Work: a holistic framework for human–AI collaboration that treats the spec as the shared interface for alignment, orchestration, and accountability. Instead of just dumping tasks on an agent, it’s about co‑creating plans, auditing decisions, and handing off cleanly across people and tools.

Below I’ll ground the framework in today’s tooling: emerging standards like AGENTS.md, deeper planning in agents (e.g., Cline’s Focus Chain and Deep Planning), live queued instructions in Cursor, and Kiro’s spec‑first/steering‑files model and command allowlists. I’ll also walk through where agents still struggle (front‑end integration, long diffs that overwhelm review), and how to mitigate those gaps with a traceable process, a structured handoff, and a durable final artifact.

Based on extensive experimentation, from utilizing modular frameworks like OpenHands (at incredible speeds during a recent hackathon) to developing my own orchestration tooling (cli_engineer) and testing market entrants like Amazon’s Kiro, I believe this new paradigm rests on six crucial pillars. I defined them while preparing for an interview with All Hands AI about my experience and thoughts on spec-driven development. But first, let’s examine the rapidly evolving landscape that makes this framework so urgent.

The Shifting Ground:

Standards and Behaviors Are Settling

For months, “rules files” were the Wild West: CLAUDE.md here, .cursor/rules there, and bespoke formats everywhere. The community is finally converging on AGENTS.md as a simple, open rules file for coding agents (think “README for agents”), with active discussion and prototypes across tools. That momentum matters because it reduces duplication and makes agent behavior portable across environments.

At the same time, agent behaviors are evolving:

Cline v3.25 introduced Deep Planning, the Focus Chain, and Auto‑Compact: mechanisms explicitly aimed at keeping agents on track across long, complex tasks (instead of “attention alone”). This is exactly the kind of planning transparency spec‑driven work thrives on.

Cursor now supports queued messages: you can line up follow‑ups while the agent is still working, and they’ll execute sequentially. This is perfect for real‑time, collaborative steering during long runs.

These shifts are the groundwork for a mature spec‑driven practice.

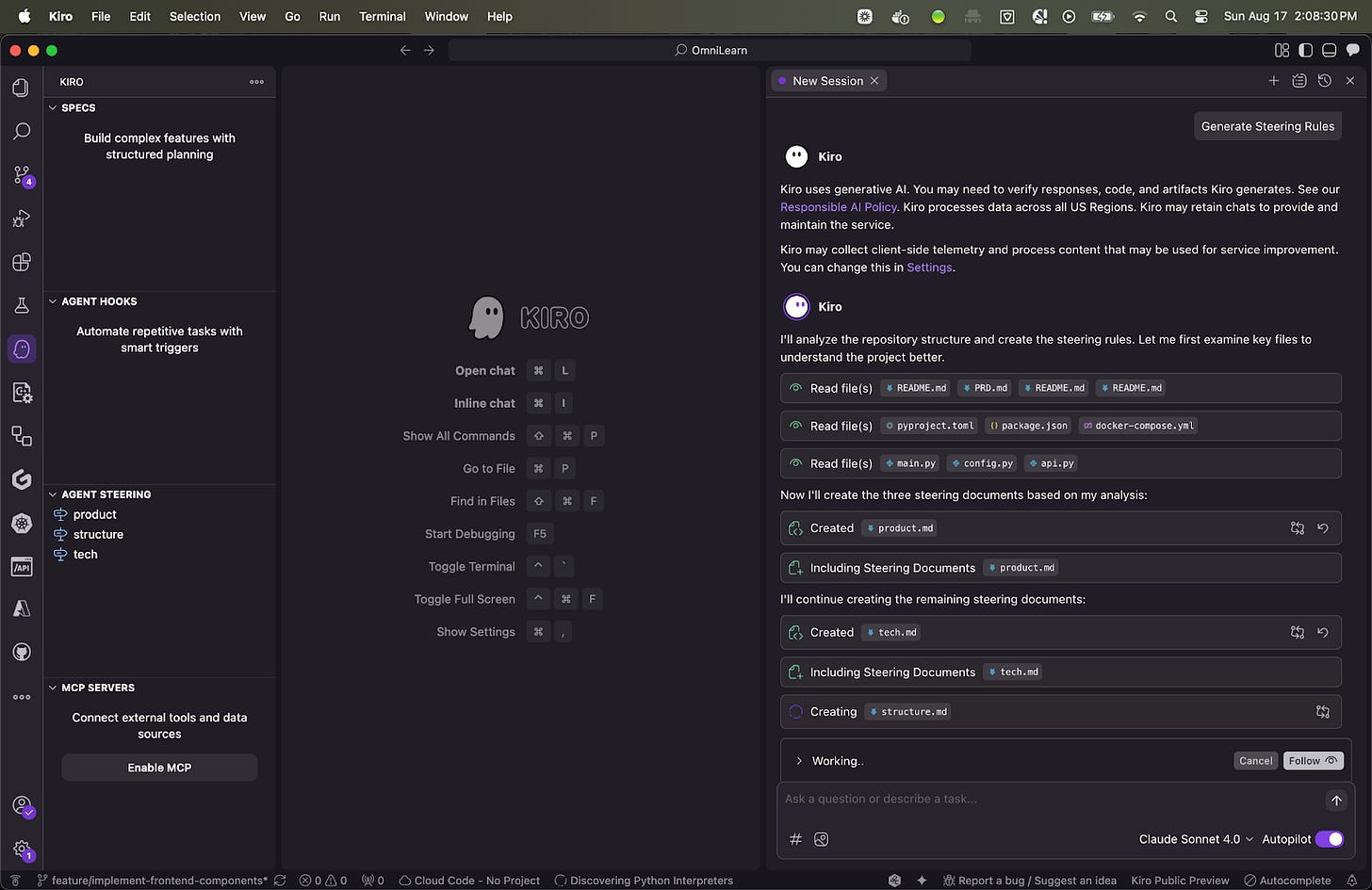

Case Study: A Deep Dive with Amazon Kiro

In recent experiments with Amazon Kiro, an IDE that bakes spec‑driven flows into the product, I asked the agent to implement a multi‑user course persistence feature for OmniLearn (an adaptive learning platform). Kiro did something important up front: it generated steering docs (e.g., product.md, tech.md, structure.md) to establish durable context, and it supports a separate spec set (e.g., requirements.md, design.md, tasks.md) for the feature at hand. That separation (rules and context vs the novel work) is foundational.

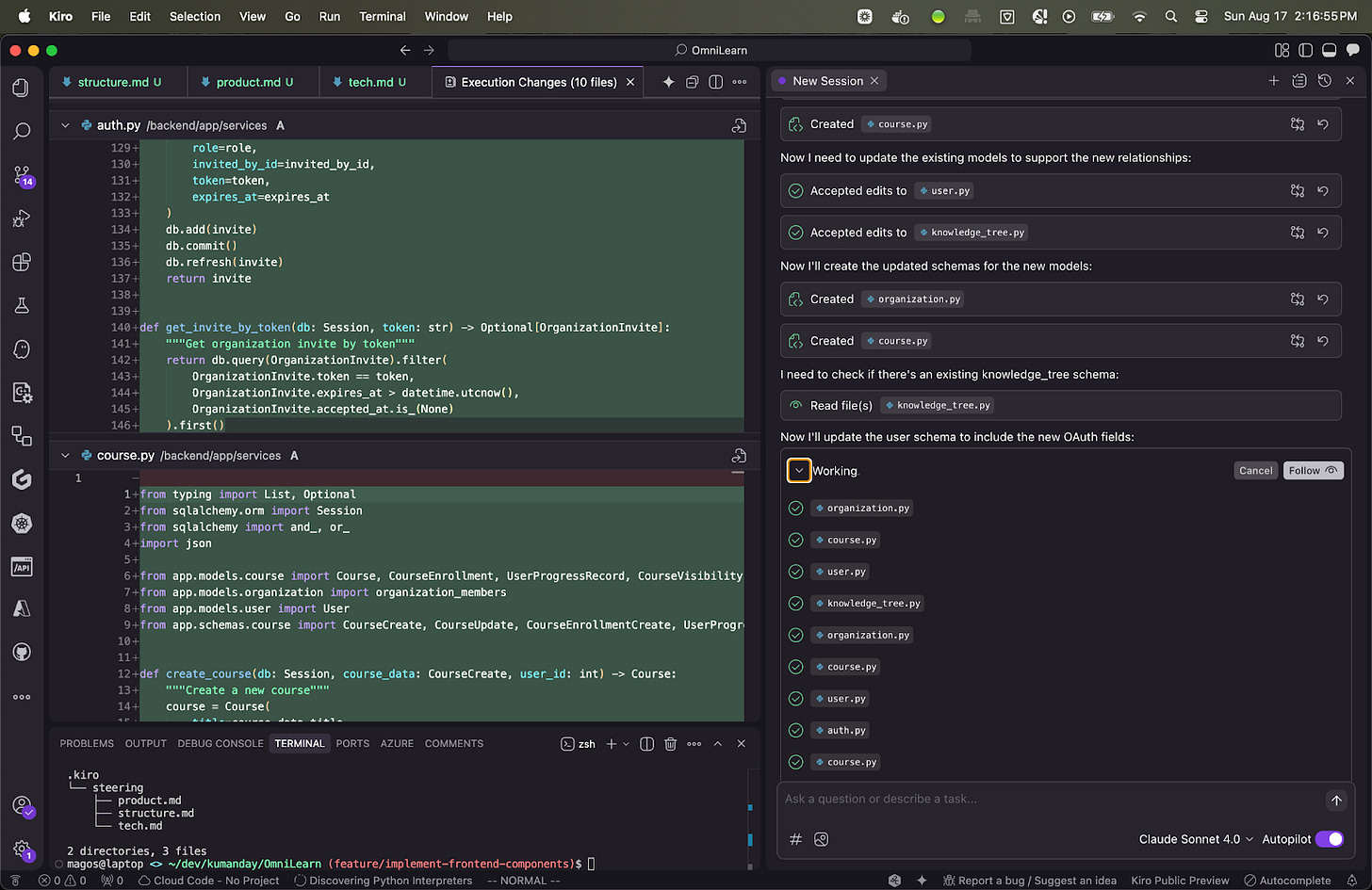

Throughout the process, Kiro offered a "Follow" mode, displaying a real-time log of its actions and the resulting code diffs. While seeing the diff is necessary, it isn't sufficient. A massive code change lacks the why. This highlights a core tension in agent observability: My desire is to audit the decisions, the reasoning pathways, and the strategic choices made, not just the final code output.

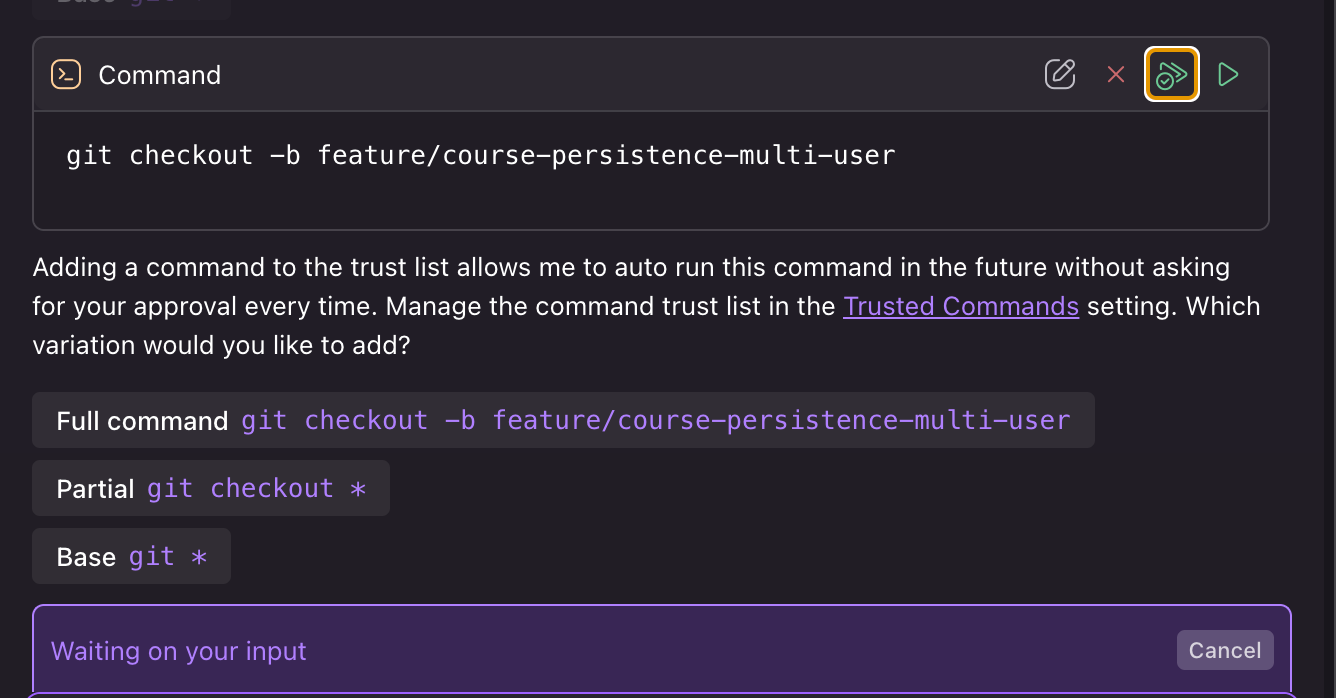

Kiro's command execution offered another positive insight, featuring a flexible allowlisting system. This lets developers trust commands with varying levels of specificity (e.g., git * vs. git checkout *), a smart and necessary security feature for autonomous operations.

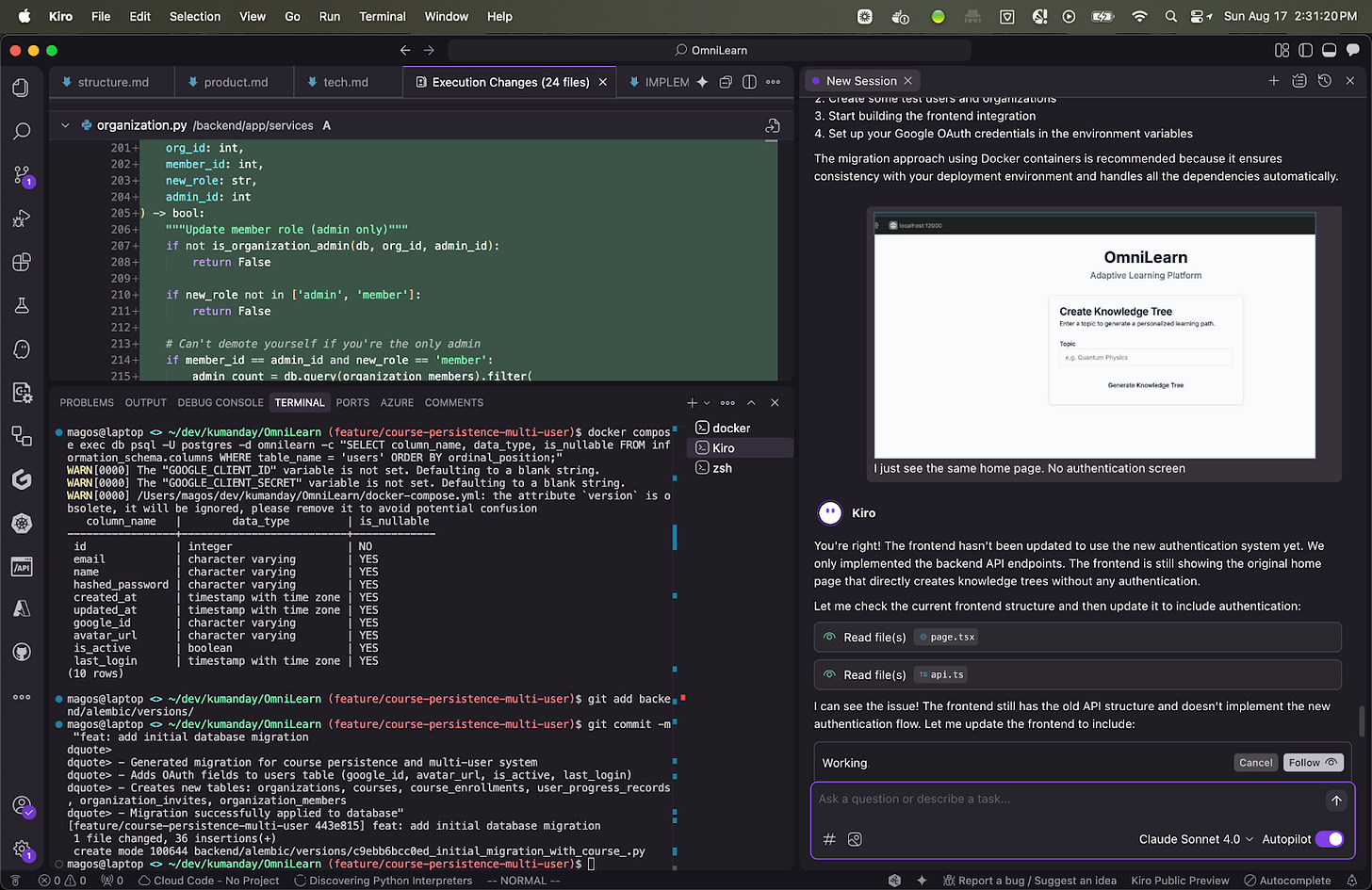

However, the experiment wasn't without significant failures. Kiro successfully implemented the backend API but completely failed to update the frontend, leaving the UI non-functional. It also introduced a regression, breaking a previously working feature. To its credit, when I explicitly pointed this out, it successfully diagnosed and fixed the breakage.

The Necessity of Orchestration

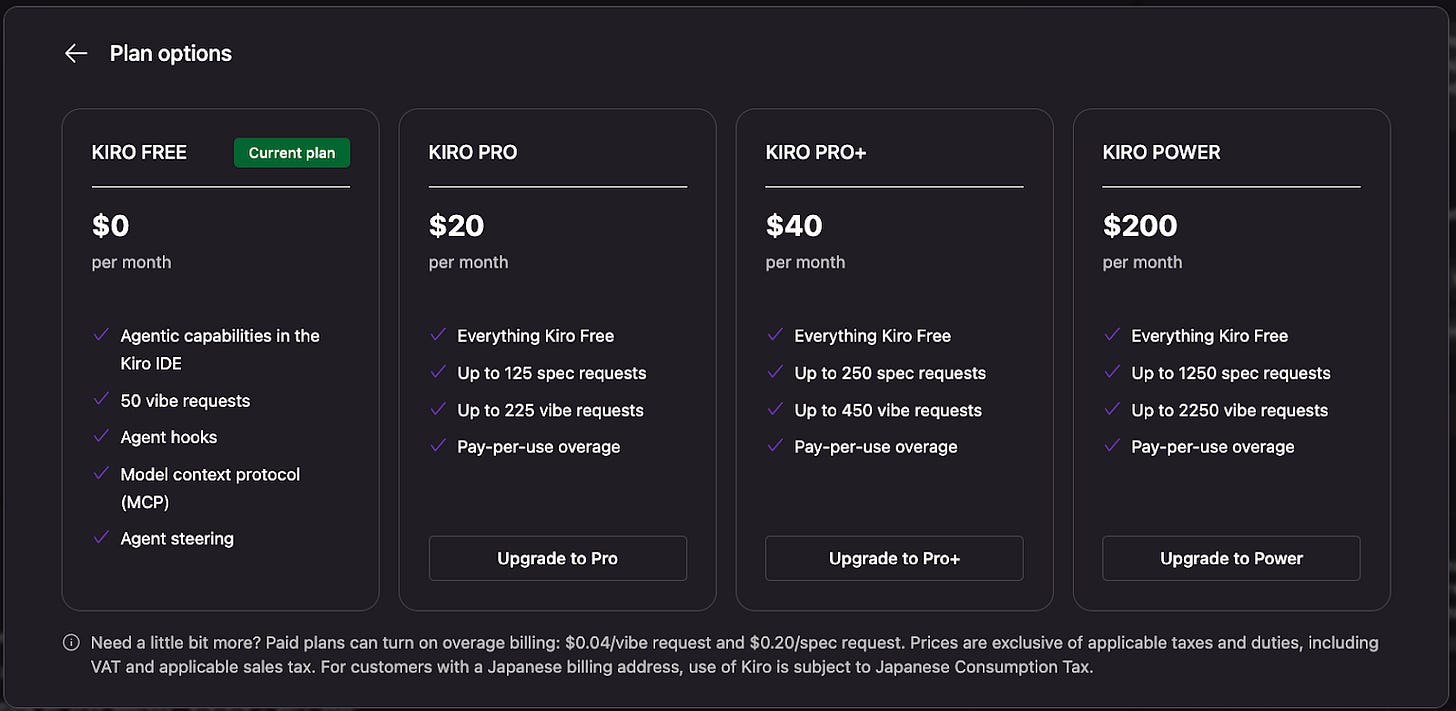

This experience underscored a crucial insight into resource management and workflow strategy. I quickly exhausted my request quota. Analyzing the pricing, the $0.20/spec request seems cost-effective for complex, long-horizon tasks. However, the $0.04/vibe request (for smaller, interactive tasks) feels expensive compared to alternatives.

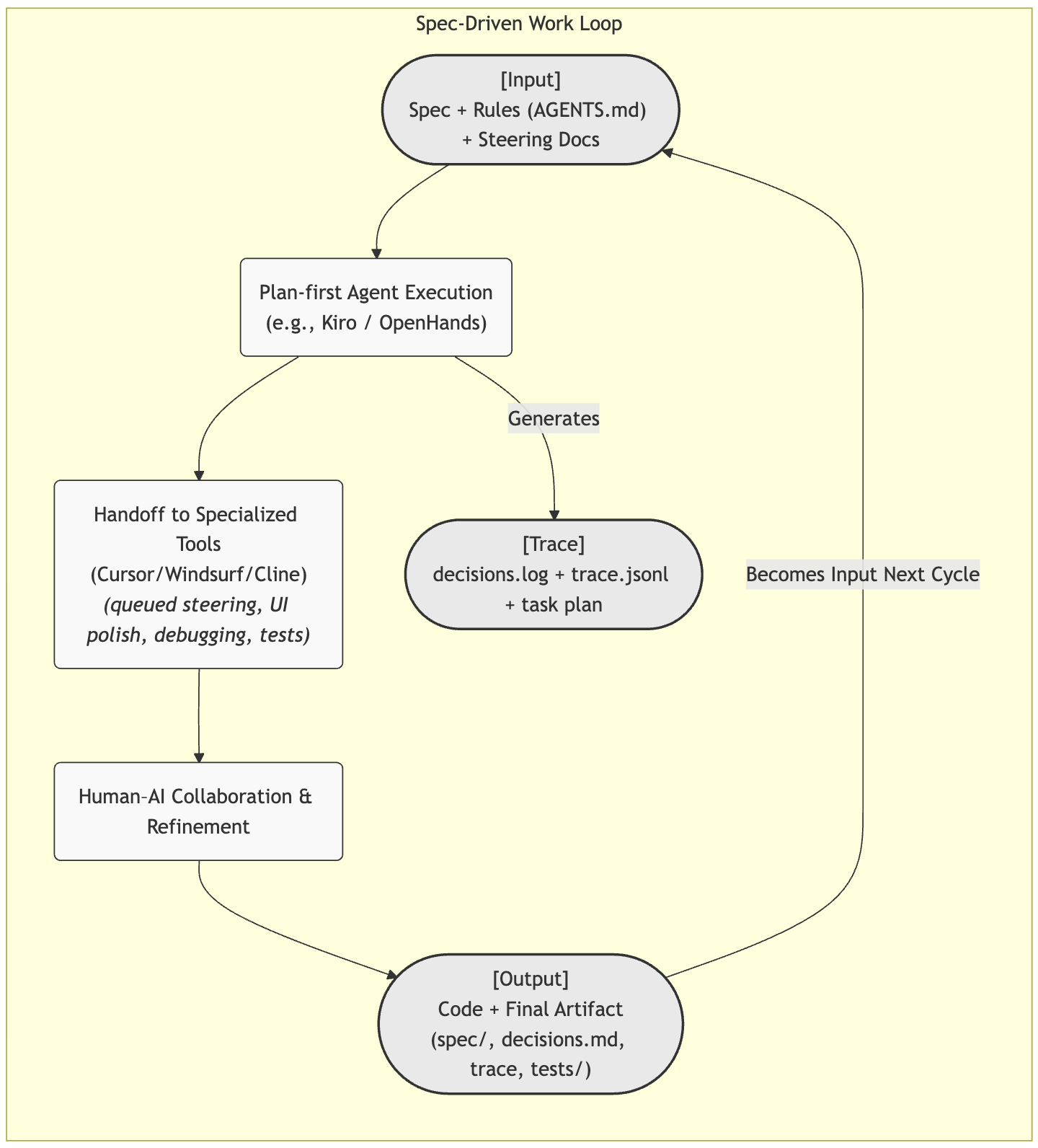

This leads to an inevitable conclusion: the future is not a single monolithic agent, but an orchestrated workflow. The strategy is clear: use powerful, autonomous agents (like Kiro or OpenHands) for the initial, heavy-lifting implementation, then hand off the artifacts to more nimble, interactive tools like Windsurf, Cline, or Cursor for debugging and UI refinement.

The ecosystem is already embracing this reality. Cline's v3.25 release focuses on how "Focus & Attention Isn't Enough," introducing mechanisms for sophisticated context management required for these handoffs. Similarly, Cursor's recent updates allow users to queue messages that execute after a tool call without waiting for the entire command flow to finish. This is a great model for real-time, collaborative steering.

The challenge now is how to link these tools together seamlessly. In my own work, I’ve been developing CLI Engineer, a command-line orchestrator designed specifically to manage these transitions. I am actively integrating modular frameworks like OpenHands as a callable agent layer within this orchestrator, allowing autonomous execution to flow directly into specialized tool handoffs and human-in-the-loop refinement stages.

These developments (standardization, the limitations of single agents, and the rise of orchestration frameworks) underscore the need for a structured approach to collaboration. That's where the six pillars come in.

The Six Pillars of Spec-Driven Work

A robust framework for human-AI collaboration must address the needs of alignment, traceability, and interoperability.

1. Collaboration Over Delegation

The most fundamental shift is viewing the AI as a collaborator, not a subordinate. The spec is not an order to be executed blindly; it's a co-created interface for alignment and trust.

In a Spec-Driven Workflow, the developer’s role evolves from a line-by-line coder or a task manager to an architect and orchestrator. We are responsible for guiding the AI's reasoning, intervening when necessary, and ensuring the final output aligns with the strategic vision. We are not just telling the agent what to do, but co-creating a shared understanding of why and how.

2. Transparent and Traceable Processes

We cannot treat AI output as a magic black box. If we are to trust autonomous agents with critical systems, their processes must be fully auditable.

A mature spec-driven workflow demands that agents produce intermediate artifacts: execution plans, task trees, reasoning steps, and decision summaries. When an agent presents a massive code diff, what we truly need is the audit trail of its decisions: a justification for why it chose a specific implementation path over alternatives.

This is structured debugging for agent reasoning. We need the ability to inspect an agent's thought process and identify where it deviated from the intended design. The next frontier of this pillar will be real-time observability dashboards that allow us to inspect and intervene in autonomous runs live, rather than just reviewing the results after the fact.

3. Orchestrate and Handoff Between Tools

No single tool does it all. A spec-driven workflow must be tool-agnostic, enabling intelligent handoffs across a pipeline of specialized agents.

You might use an orchestrator to manage the workflow, invoking a generalist agent framework (like OpenHands or Kiro) for the heavy lifting of a backend implementation. The output artifact, along with the original spec and the execution trace, is then passed to a tool like Cline for debugging or Windsurf for UI polishing.

In this orchestrated environment, the spec and output artifacts become passports, carrying vital context, intent, and history across different environments and human-in-the-loop checkpoints.

4. The Power of a Good Spec

The spec is the cornerstone of the entire workflow. A weak spec leads to a weak result. A strong spec is a precise document that encodes intent, constraints, edge cases, acceptance criteria, and performance goals.

Crucially, this is also where we must define boundaries and separation of concerns. A feature spec shouldn't redundantly contain your entire project's architectural principles. Instead, it should reference durable, structured documentation and rule files like AGENTS.md. This separation is vital: the spec is for the novel work; the foundational context is maintained in a shared, stable knowledge base.

5. Spec-Driven Meets Test-Driven (and Rule-Driven)

Specs gain immense power when integrated with automated validation. By baking test cases, validation criteria, and structural rules directly into the spec or system-level configurations, we provide agents with checkpoints to self-correct during the generation process.

This is the essence of "rule-driven" development, where files like .cursorrules or AGENTS.md act as continuous guardrails. This integration elevates the process from mere "spec-driven development" (writing new code) to the broader concept of "spec-driven work," which can include complex refactoring, security auditing, and documentation generation, all validated against a clear, predefined set of rules and tests.

6. The Final Artifact and the Continuous Cycle

The process doesn't end with a pull request. The final output of a spec-driven task is more than just the code; it's a rich artifact containing the initial spec, the agent's execution trace, the decisions made, the rationale summarized, and the final implementation.

This artifact is a durable piece of organizational knowledge. It serves as a primary input for the next cycle of work, creating a continuous feedback loop. This builds institutional project memory, ensuring that future work (whether executed by a human or an agent) is built upon the complete context of the past.

Visualizing the Continuous Cycle

This workflow isn't linear; it's a continuous, reinforcing feedback loop that builds knowledge over time.

The Road Ahead

The future of software development is one of orchestration. Our success will no longer be measured by the lines of code we write, but by our ability to design, manage, and steer systems where humans and AI agents work in a tight, collaborative loop.

To realize this vision, we need tooling that embraces these six pillars. We need platforms that provide real-time observability into agent reasoning, support shared agent memory across different tools, establish open standards for AI-to-AI and AI-to-human handoffs, and perhaps even develop metrics to score the quality and completeness of a spec.

By adopting the principles of Spec-Driven Work, we can move beyond the limitations of simple code generation and begin architecting the future of software creation itself.

Agents.md is a great step forward to orchestrating agents at scale. Looks like Claude Code does not currently support it but hopefully they do soon.

As Bowie wrote, “I'm an absolute beginner/And I'm absolutely sane.” Thanks for sharing your experience. I couldn’t agree more that the powerful way forward is collaborative.