The Autonomous Developer

A Guide to Tools, Trust, and Transparency in AI Coding

The field of software development is rapidly being reshaped by the emergence of sophisticated AI-powered coding agents. These tools are moving beyond simple code completion to automate complex aspects of the development lifecycle, including writing, debugging, and even managing entire projects. This report offers a detailed analysis and comparison of prominent autonomous coding agents, examining their code execution environments, agentic capabilities such as tool use and reasoning, flexibility in Large Language Model (LLM) providers, their open-source or proprietary nature, levels of autonomy, and the scope of tasks they can undertake. Understanding these nuances is crucial for developers, engineering leads, and organizations aiming to leverage AI for enhanced productivity and innovation. The agents under review include Devin, OpenHands, Claude Code, OpenAI Codex (and its CLI variant), Cline, Cursor (specifically its background agents), Aider, ZenCoder, Augment Code Remote Agents, Goose, and cli_engineer.

A Call for Developer Autonomy: The Genesis of an Open-Source Agent

Before diving into a comparative analysis, it's important to frame this exploration with the catalyst that led to the creation of one of these agents: cli_engineer. The impetus was a stark wake-up call for the developer community: the story of the AI IDE Windsurf, which had its access to Anthropic's Claude API abruptly terminated, deeply affecting its core product. This event highlighted a critical vulnerability at the heart of the AI revolution: the profound risk of building innovative tools on closed-source, proprietary platforms where access can be revoked without warning.

This dependency creates a single point of failure, forcing developers to cede control over their own tools and futures to large corporations. The incident served as a powerful reminder that true innovation requires autonomy. In response to this challenge, I intensified the development of cli_engineer. The project was founded on a simple but crucial principle: to provide a powerful, effective, and completely open-source AI agent that frees developers from vendor lock-in. By supporting a wide array of LLMs, from proprietary APIs to locally run open-source models, cli_engineer is a deliberate step towards a more resilient, transparent, and developer-controlled ecosystem.

This analysis, therefore, is not just an academic exercise. It is an exploration of a dynamic and contested landscape, viewed through the lens of a developer who believes that the future of AI in software engineering must be open, flexible, and firmly in the hands of the creators themselves.

The Evolving Role of AI in Software Development

Artificial intelligence has progressively expanded its influence in software development. Initially, AI tools concentrated on tasks such as code completion (e.g., early GitHub Copilot powered by OpenAI Codex ), syntax checking, and basic bug detection. These early tools functioned primarily as assistants, augmenting developer capabilities but requiring continuous human guidance. The advent of more powerful LLMs and agentic frameworks has spurred a shift towards AI systems capable of performing more intricate, multi-step tasks with greater autonomy. This new generation of "autonomous coding agents" aims to understand developer intent from natural language, plan and execute coding tasks, interact with development environments (files, terminals, browsers), integrate with version control, and learn from feedback. The ambition ranges from automating routine chores to tackling significant software project segments, potentially reshaping developer workflows. This evolution presents immense opportunities for productivity gains alongside new challenges concerning trust, security, code quality, and the changing role of human developers.

Detailed Analysis of Selected Coding Agents

This section provides an in-depth examination of each selected coding agent, focusing on their core functionalities, technical underpinnings, and operational characteristics.

Devin

Overview: Introduced as the "world's first AI software engineer," designed for autonomous end-to-end complex software engineering tasks.

Code Execution Environment: Operates in a sandboxed cloud environment with Linux shell, code editor (VSCode-like), and web browser. Enterprise clients offered VPC deployment (Dev Box in customer VPC, Devin Backend communicates securely). Docker container usage mentioned for isolation. Professional-grade cloud sandboxing.

Agentic Capabilities:

Tool Integration: Uses integrated shell, editor, browser for online resources. GitHub integration for PRs, Slack interaction. Devin 2.0 has "Devin Search" for codebase exploration and "Devin Wiki" for automated documentation indexing.

Reasoning: Complex reasoning, planning, problem-solving. Formulates step-by-step plans, executes, debugs, updates plan. Long-term memory, adaptive reasoning (retains context across thousands of steps). Devin 2.0's "Interactive Planning": researches codebase, develops detailed plan for user review before autonomous work. Learns from feedback, corrects own mistakes from test outcomes.

LLM Provider: Compound AI system using LLMs (akin to GPT-4/Turbo) augmented with custom planning/reasoning algorithms. Does not support third-party LLM API keys.

Licensing: Proprietary, commercial SaaS product with plans involving Agent Compute Units (ACUs).

Autonomy and Human Oversight: Initially marketed with strong emphasis on full autonomy (task to complete PR). Devin 2.0 shifts towards more collaboration, bringing user in as needed, allowing review/edit in Devin IDE. Very High autonomy.

Task Scope: Building full-stack apps, finding/fixing bugs, learning/applying new technologies, fine-tuning ML models. Reported 13.86% unassisted SWE-Bench resolution.

Unique Features: Integrated dev environment (shell, editor, browser) in sandbox; end-to-end task autonomy goal; Devin 2.0 features (Interactive Planning, Devin Search, Devin Wiki); MultiDevin concept (manager Devin coordinating worker Devins).

Strengths: High ambition for autonomy; comprehensive integrated toolset; strong initial benchmark claims.

Limitations: High cost; mixed independent reviews (struggling with real-world tasks, taking excessive time, failing to complete demoed tasks); "Upwork task controversy." Users cannot choose underlying LLM. Workflow can be opaque.

OpenHands (formerly OpenDevin, by All Hands AI)

Overview: An open-source platform for developing AI agents that interact like human software developers (writing code, using CLI, web Browse). It aims to replicate and enhance autonomous AI software engineers and is community-driven.

Code Execution Environment: Allows agents to execute commands in a sandboxed environment, often a Docker container, to isolate actions. Users running locally via Docker are advised to use hardened configurations for security. Primarily for single-user local workstations, lacking built-in multi-tenant security. Daytona infrastructure can provide isolated sandbox management.

Agentic Capabilities:

Tool Integration: Agents can write/edit code, run shell commands, browse the web, and call APIs. Features an extensible agent skills library and the Agent-Computer Interface (ACI) package for code editing, linting, and shell command execution.

Reasoning: Supports multi-agent collaboration through event streaming for delegating subtasks. Uses an event stream architecture for agent actions and observations. Includes "Microagents" for specific tasks.

LLM Provider: Supports various LLMs; documentation suggests Anthropic's Claude Sonnet 4 works best, but many options are available locally. It is LLM-agnostic.

Licensing: Released under the MIT license.

Autonomy and Human Oversight: Aims for high autonomy, with agents performing tasks like human developers. The agent typically decides if human input is needed. It supports both autonomous operation and collaborative modes.

Task Scope: Designed for complex software engineering tasks (SWE-Bench) and web browsing (WebArena, MiniWoB++). Can handle modifying code, running commands, Browse, and API calls.

Unique Features: Open-source, community-driven nature, AgentHub for sharing agent architectures, event stream architecture, multi-agent collaboration, and built-in evaluation framework.

Strengths: Open-source model fostering collaboration and transparency; active and welcoming community; comprehensive agent capabilities; robust evaluation framework; LLM-agnostic; extensible agent skills library.

Limitations: Complexities of managing security for a locally run agent; designed for single-user local workstations without further customization; lacks sophisticated backtracking, potentially leading to loops without user intervention. Lacks built-in enterprise features like centralized authentication or fine-grained access control without custom setup. Several of these limitations are addressed in their commercial cloud offering.

Claude Code

Overview: Developed by Anthropic, Claude Code is an agentic coding tool designed for the user's terminal, integrating with popular IDEs to accelerate coding by understanding the codebase and assisting through natural language commands. It aims to be a "code's new collaborator."

Code Execution Environment: Claude Code runs locally in the user's terminal and communicates directly with model APIs without an intermediary backend server or remote code index. It does not use a separate sandbox environment but works directly in the user's system with guardrails. File modifications require explicit user approval. Security relies heavily on user-granted permissions and the recommendation for users to implement external isolation like Docker Dev Containers.

Agentic Capabilities:

Tool Integration: Integrates with user's command-line tools, VS Code, and JetBrains IDEs. It can use existing test suites, build systems, and Git for commits and PRs. It also uses web search for documentation. It supports multi-agent workflows and integrates deeply with the shell, Git, GitHub CLI, and MCP servers.

Reasoning: Employs "agentic search" for codebase understanding, mapping project structure and dependencies. Optimized for code understanding and generation, it can make coordinated multi-file changes. It offers tiered "thinking" budgets for varying reasoning depth.

LLM Provider: Powered by Anthropic's models (Claude Opus 4, Sonnet 4, Haiku 3.5). Enterprise deployments can connect to Amazon Bedrock or Google Cloud Vertex AI. It is effectively locked into the Anthropic ecosystem.

Licensing: A proprietary product from Anthropic, available via Max subscription plans or token-based pricing. It's released under a restrictive Business Source License (BUSL 1.1).

Autonomy and Human Oversight: Designed as a collaborator requiring explicit user approval for file modifications, positioning it as an advanced assistant rather than fully autonomous. It has medium-high autonomy, able to independently tackle tasks with minimal guidance but needs confirmation for file changes/commands.

Task Scope: Handles code architecture questions, file editing, bug fixing, test execution and fixing, linting, Git operations, and web documentation Browse. Suited for code onboarding and multi-file edits.

Unique Features: Direct terminal operation, agentic search for codebase awareness, enterprise integration with Bedrock/Vertex AI, and IDE integrations for diff viewing and context sharing.

Strengths: Strong integration with Anthropic's models, local execution offering data privacy (feedback transcripts stored for 30 days, not used for training generative models ), enterprise-friendly deployment. Superior code understanding due to large context windows.

Limitations: Reliance on Anthropic model ecosystem; explicit approval for modifications can slow workflows. Can be expensive for heavy use. The core agent executable is not open-source. The source code was leaked and forked at some point in an attempt to provide model flexibility, but this project was disabled due to a DMCA takedown – another demonstration of Anthropic’s stance on developers vs. their corporate interests.

OpenAI Codex and Codex CLI

Codex Cloud Agent (New, May 2025): A cloud-based agent in ChatGPT, powered by a fine-tuned O3 model (codex-1), for autonomous feature building, bug fixing, and handling development workflows.

Execution Environment: Each task runs in its own secure, isolated Docker container in the cloud; network access disabled after initial setup scripts.

Agentic Capabilities: Runs terminal commands, writes code, runs tests, interacts with Git (PRs), uses AGENTS.MD files for instructions. Interprets natural language, handles multi-step instructions.

LLM Provider: Powered by codex-1 (specialized O3 model).

Licensing: Proprietary, part of ChatGPT (Pro, Team, Enterprise).

Autonomy: Designed for autonomous task handling (delegation model) from prompt to PR, with real-time monitoring. Two modes: "ask" (read-only) and "code" (modifies code).

Task Scope: Bug fixing, code review, adding tests, refactoring, feature implementation, optimized for small, self-contained tasks.

Strengths: Strong security model for cloud execution; AGENTS.MD allows deep customization.

Limitations: Proprietary, fixed LLM choice; requires trust in OpenAI's cloud; no mid-task guidance; outdated knowledge base if not updated. When tasked with more complex projects, it’s prone to implementing stub or placeholder code despite instructions to the contrary.

Codex CLI: An open-source command-line tool bringing reasoning models (default O4-mini) to the terminal as a local coding agent. Built on Node.js.

Execution Environment: Runs locally. For "Full Auto" mode, uses sandbox-exec on macOS and recommends Docker on Linux (with restricted network access). Windows support is experimental (WSL).

Agentic Capabilities: Reads/writes files (via patches), executes sandboxed shell commands, interacts with Git. Can use multimodal inputs. Iterates based on results and user feedback.

LLM Provider: Defaults to O4-mini; can use any OpenAI Responses API model; via LiteLLM, can use 100+ LLMs including Claude and Gemini.

Licensing: Open-source.

Autonomy: Configurable via approval modes: Suggest (default), Auto Edit, Full Auto (sandboxed, network-disabled).

Task Scope: Refactoring, debugging, writing tests, migrations, batch file operations, code explanation.

Strengths: Open-source, high LLM flexibility (especially via LiteLLM); local execution control; robust sandboxing options; Git integration; lower cost potential.

Limitations: Windows support experimental; Full Auto mode carries inherent risks; network disabled in sandbox can affect tests requiring external services; early-stage performance lagged some rivals; Node.js dependency.

Cline

Overview: An open-source AI autonomous coding agent designed as a VS Code extension, aiming to be a collaborative AI partner.

Code Execution Environment: Operates within the user's local development environment via VS Code. Employs a human-in-the-loop GUI for approving file changes and terminal commands. Can leverage Shakudo's infrastructure for secure operations if integrated. Relies on user approval more than an automated sandbox.

Agentic Capabilities:

Tool Integration: Creates/edits files, executes terminal commands, uses a browser (with permission). Integrates with Model Context Protocol (MCP) servers for external databases, GitHub, web services, etc. Features an "MCP Marketplace" for tools.

Reasoning: Features "Plan & Act" modes for collaborative planning before action. Analyzes file structure and ASTs. Can autonomously detect issues and propose fixes.

Computer Use Automation: Cline can launch a browser, click elements, type, scroll, and capture screenshots or console logs for interactive debugging, end-to-end testing, and web use.

LLM Provider: Model agnostic with BYOK API model. Supports Anthropic Claude 3.7 Sonnet, DeepSeek Chat, Google Gemini 2.0 Flash, OpenRouter, OpenAI, AWS Bedrock, Azure, GCP Vertex, and local models (LM Studio/Ollama).

Licensing: Open-source (stated as Apache 2.0 by some aggregators).

Autonomy and Human Oversight: Described as an autonomous agent but emphasizes partnership. Requires user permission for actions via GUI. "Plan & Act" involves collaborative planning.

Task Scope: Handles entire repositories, builds/maintains complex software, generates/edits files, executes terminal commands, debugs with headless browser. Can convert mockups from images, fix bugs with screenshots.

Unique Features: Open-source VS Code integration, "Plan & Act" modes, extensive LLM flexibility (BYOK), MCP Marketplace, .clinerules for project-specific instructions.

Strengths: Robust tool integration via MCP; high LLM flexibility; open-source transparency; collaborative planning features; human-in-the-loop for safety. In-editor UX.

Limitations: Permission-based system might be cumbersome for highly iterative tasks. VS Code only. Potential system access risks mitigated by user approval. Step-by-step approval can be tedious.

Cursor with Background Agents

Overview: An AI-first code editor (fork of VS Code) designed for AI-powered development. "Background Agents" allow spawning asynchronous agents that edit and run code in a remote environment.

Code Execution Environment: Background Agents operate in remote environments, typically Cursor's AWS infrastructure. Setup via .cursor/environment.json (specifying base image or Dockerfile). The agent clones the repo from GitHub. Local terminal commands invoked through Cursor UI execute directly on the user's system without internal sandboxing, relying on "Cursor Rules" for approval.

Agentic Capabilities:

Tool Integration: Background agents edit files, run code, and execute commands remotely. Integrate with GitHub for cloning and pushing changes. Regular agent mode has tools for searching, editing, file creation, terminal commands, and MCP tools.

Reasoning: Background agents tackle tasks asynchronously. Regular agent mode features multi-step planning and contextual project understanding. "Thinking" capability limits models to step-by-step reasoning ones.

LLM Provider: Background Agents use Max Mode-compatible models. Cursor supports models from OpenAI, Anthropic, Google, xAI. Users can bring own API keys for some. Key agentic features may work best or exclusively with natively supported LLMs.

Licensing: Proprietary, commercial product. Core editor is open source, some agent features are proprietary.

Autonomy and Human Oversight: Background Agents run asynchronously and in parallel; users can view status, send instructions, or take over. Initial remote machine setup can be complex. Regular agent mode can operate autonomously but allows review, especially with auto-run. Very high autonomy for background agents.

Task Scope: Background Agents for parallelizing work, fixing "nits," investigations, first drafts of medium PRs. Regular agent for complex refactors, multi-file edits.

Unique Features: Parallel, asynchronous execution in configurable remote environments. Deep IDE integration, flexible context management (documentation indexing), Max Mode, native terminal emulation.

Strengths: Offloading tasks to remote environments; parallel execution; integration with Cursor's AI capabilities; integrated development experience; strong AI capability; predictable cost for heavy users.

Limitations: Preview status for Background Agents; privacy mode off for Background Agents; complex remote environment setup; pricing based on tokens + potential compute charges; attack surface larger due to GitHub R/W privileges and code on Cursor's AWS; closed & model-locked; editor lock-in; click-intensive approval for agent mode unless YOLO enabled; no web access for agent.

Aider

Overview: An open-source AI pair programming tool running in the user's terminal, allowing chat with LLMs to edit code in local git repositories.

Code Execution Environment: Operates directly in the user's local terminal and interacts with local files. /run <command> allows shell command execution in the user's local environment. No explicit built-in sandboxing; relies on user's environment security or external tools like CodeGate.

Agentic Capabilities:

Tool Integration: Strong Git integration, auto-committing changes. Can execute shell commands (/run). Can process images and web page URLs provided in chat as context. Automatically runs linters and test suites after modifications.

Reasoning: Can be given a map of the entire git repo for understanding large codebases. Supports multi-file edits. Different chat modes ("code," "architect," "ask"). Can fix problems detected by linters/tests.

LLM Provider: Highly flexible. Works best with Claude Sonnet, DeepSeek R1, OpenAI O3 & GPT-4.1. Can connect to almost any LLM, including local models (e.g., via Ollama) and models via web chat interfaces. Supports LiteLLM.

Licensing: Open-source, typically Apache 2.0.

Autonomy and Human Oversight: Operates as an AI pair programmer, implying a collaborative model where the human guides and reviews. Users can interrupt, undo, and edit manually. Medium autonomy, works collaboratively.

Task Scope: New features, changes, bug fixes, test cases, documentation updates, refactors. Effective in large codebases due to repo map. High scores on SWE-Bench.

Unique Features: Terminal-based, strong Git integration, repo map, voice-to-code, image/URL context, flexible LLM support, in-chat commands (/run, /add, /drop, /undo), prompt caching.

Strengths: Open-source; powerful Git integration; LLM flexibility; strong contextual understanding of large repos; robust CLI; good balance of automation and control; simplicity.

Limitations: UX for those preferring IDEs (though usable in IDE terminals); security of /run command depends on local environment/caution unless paired with tools; less user-friendly for some than IDE plugins; not fully autonomous, user must break down tasks; won't search web independently.

ZenCoder

Overview: An AI coding agent platform focused on embedding AI agents into developer workflows and IDEs to handle routine tasks. Founded by Andrew Filev.

Code Execution Environment: Integrates into IDEs. "Autonomous Zen Agents for CI/CD" run within the customer's CI infrastructure (e.g., Jenkins, GitHub Actions), not Zencoder's servers. This emphasizes security for enterprise. "Coffee Mode" allows autonomous execution of "safe" terminal commands in the IDE. Emphasizes enterprise-grade security (ISO 27001, GDPR, CCPA) with integrated sandboxing.

Agentic Capabilities:

Tool Integration: Connects with 20+ developer tools (Jira, Sentry, GitHub, etc.). Agents resolve issues, create PRs, automate ticket responses. Supports MCP as a client.

Reasoning: "Agentic Chat" for human-AI collaboration. "Agentic Pipeline" orchestrates specialized AI agents for code generation, validation (static analysis, syntax, dependencies, conventions), and iterative repair until quality thresholds are met. "Repo Grokking™" deeply analyzes entire repository for contextual understanding.

LLM Provider: Uses a mix of multiple proprietary and open-source LLMs. Supports MCP. Does not seem to offer direct end-user selection of arbitrary base LLMs, focuses on its compound AI system. Documentation states users can connect own API keys from supported LLM providers and switch between them.

Licensing: Commercial, proprietary platform with free, business, and enterprise tiers. Offers an open-source marketplace for "Zen Agents" and tools. Recent official documentation indicates Zencoder is open source with permissive licenses, contradicting older views.

Autonomy and Human Oversight: Varying levels. "Coffee Mode" for autonomous safe commands and background work. Autonomous Zen Agents for CI/CD operate independently in CI, submitting PRs for human review. Agentic Chat implies collaboration. Medium-High autonomy.

Task Scope: Bug fixing, refactoring, new feature development, code/test generation, documentation, code repair, real-time optimization. CI agents automate bug resolution, security patching, docs maintenance. Can generate complete projects. Reports strong SWE-Bench performance (e.g., 60%+ on SWE-Bench-Verified, ~30%+ on SWE-Bench-Multimodal, 70% on SWE-Bench overall).

Unique Features: Repo Grokking™, Agentic Pipeline/Repair™, Coffee Mode, Autonomous Zen Agents for CI/CD, 20+ DevOps tool integrations, open-source Zen Agents Marketplace, strong enterprise security focus.

Strengths: Deep codebase understanding; advanced AI pipelines for code quality/repair; strong enterprise integrations (CI/CD, DevOps); robust security/compliance; Zen Agents Marketplace fosters extensibility.

Limitations: Core platform historically proprietary (recent claims of open-source need reconciliation); LLM backend curated, less direct choice for users if not using own API keys.

Augment Code Remote Agents

Overview: Cloud-based version of Augment's IDE-bound agent, for asynchronous and parallel tasks. Augment Code focuses on leveraging team's collective knowledge.

Code Execution Environment: Each Remote Agent in its own secure, independent cloud environment with workspace, repo copy, and virtualized OS, managed by Augment. Arrakis (open-source MicroVM sandboxing) mentioned as a similar robust sandboxing type.

Agentic Capabilities:

Tool Integration: Access to terminal, MCP servers, external integrations (GitHub, Linear, Jira, Supabase). Can create, edit, delete code.

Reasoning: Breaks requests into functional plans. Powered by "Context Engine" and LLM architecture. Long-term memory ("Memories") and MCP tool scheduling. Can learn user habits. Iterates based on test/execution output. Leverages large context (200k tokens) and "Repo Grokking" for deep understanding.

LLM Provider: Deeply integrated with model combinations like Claude Sonnet 4 and o3. Curated, optimized (and sometimes opaque) model stack rather than BYOK for end-users.

Licensing: Proprietary, commercial service. Has open-sourced an SWE-bench agent implementation as a baseline. SOC 2 Type II certified.

Autonomy and Human Oversight: "Normal" mode (pauses for approval) or "auto" mode (acts independently). Users monitor via VS Code dashboard, can SSH into agent's environment, provide follow-ups, or instruct PR creation. Checkpoints for rollbacks. High autonomy.

Task Scope: Feature implementation, dependency upgrades, writing PRs, clearing engineering backlogs (flaky tests, stale docs, small bugs), refactoring, test coverage, bulk config migrations. Best for small, well-scoped tasks ("army of eager interns"). Can explore alternatives via parallel agents.

Unique Features: Parallel, asynchronous execution in secure, managed cloud environments. "Context Engine" and "Memories" for deep understanding and personalization. Checkpoints for rollbacks.

Strengths: Powerful remote execution for backlogs and parallel development; sophisticated context management; learns user preferences; tasks don't tie up local resources.

Limitations: Lack of user-configurable LLM providers; need to trust third-party cloud with codebase (though enterprise security like customer-managed keys offered).

Goose

Overview: Developed by Block, Inc., Goose is a free, open-source AI agent framework designed to automate developer workflows. It operates locally on the user's machine (via desktop app or CLI) and moves beyond simple code suggestions to function as a versatile assistant that can execute multi-step tasks, interact with local tools, and be customized for specific projects through an open protocol.

Code Execution Environment: Goose is a local-first application that runs directly on the user's machine. It interacts directly with the local file system, terminal, and development environment without sending code to a third-party server, ensuring user data remains private and under their control.

Agentic Capabilities:

Tool Integration: Integrates with IDEs like VS Code and JetBrains. Its core extensibility comes from MCP, which allows Goose to connect with a wide array of tools like GitHub, Google Drive, Slack, and any command-line utility.

Reasoning: Capable of autonomous, multi-step task execution. It can break down high-level requests into a sequence of actions (read file, run test, apply patch), analyze outputs, and proceed accordingly. It features a "Lead/Worker Model Pattern," using a powerful LLM for planning and a faster, cheaper model for execution to optimize performance and cost.

LLM Provider: A key feature is its model-agnosticism. Goose is designed to work with a variety of LLMs from different providers, including Anthropic (Claude), OpenAI (GPT), and local models run through services like Ollama. This gives users complete flexibility and avoids vendor lock-in.

Licensing: Goose is a fully open-source project released under the permissive Apache 2.0 license, allowing for free use, modification, and distribution, even for commercial purposes.

Autonomy and Human Oversight: Goose is a user-directed agent. While it can autonomously execute complex, multi-step workflows (called "recipes"), it operates based on explicit commands from the user. It has medium autonomy, independently handling the intermediate steps of a defined workflow but requiring human initiation. Complex tasks require iterative encouragement for it to continue with implementation.

Task Scope: Handles complex tasks including code migrations (e.g., Ruby to Kotlin), generating and running unit tests, refactoring across multiple files, API scaffolding, debugging, and automating build processes. It is also used internally at Block for non-engineering tasks like incident response and data analysis.

Unique Features: Local-first operation for privacy, complete LLM-agnosticism, free and open-source (Apache 2.0), and profound extensibility via the Model Context Protocol (MCP).

Strengths: Maximum flexibility, privacy, and control due to its open-source, model-agnostic, and local-first architecture. It is highly cost-effective as the framework itself is free.

Limitations: Its effectiveness is highly dependent on the quality of the connected LLM and the user's ability to create effective prompts or "recipes." Sometimes it will show or suggest changes instead of applying them, or insist on changing a couple of files at a time and make changes iteratively requiring confirmation each time instead of all at once - as shown in the above video.

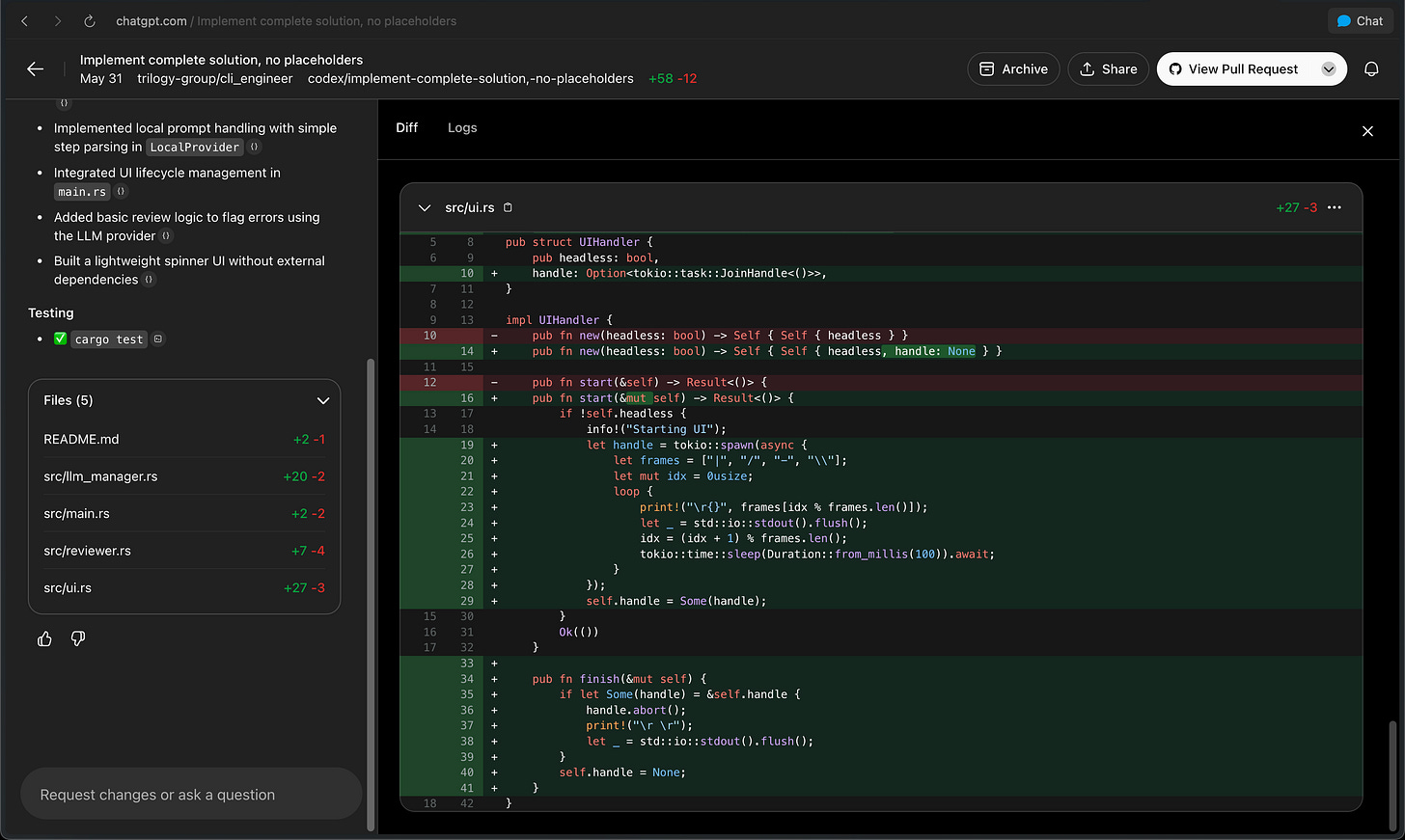

cli_engineer

Overview: cli_engineer is an open-source, autonomous coding agent introduced in 2025 by Leonardo Gonzalez (Trilogy AI Center of Excellence). Written in Rust, it's a command-line tool designed to automate software development tasks. It functions as an "agentic CLI for software engineering automation" by integrating task interpretation, planning, execution, and review into a closed-loop system. The project is MIT-licensed and saw over 1000 downloads in its first week.

Code Execution Environment: The agent runs as a local CLI application, built in Rust for performance and safety. It provides a terminal-based dashboard with real-time logs by default, along with a plain-text mode for scripting. Its internal architecture is event-driven and modular, featuring pluggable LLM providers. A configuration system scopes actions like file reads and writes to maintain codebase safety. The future roadmap includes plans for secure sandboxed code execution.

Agentic Capabilities:

Reasoning: The agent operates on an "agentic loop," an iterative cycle of reasoning and execution. It plans actions, calls AI models, and refines the output until the given task is complete, logging its thought process along the way.

Task Scope: cli_engineer handles various software engineering tasks based on high-level instructions. Key commands support code generation, code review, documentation drafting, security analysis, and refactoring. For instance, it can generate a full-stack application from a description or analyze a codebase for specific issues like error handling, outputting the results to predefined targets like new source files or a Markdown report.

Tools: built in tools for prompt construction, file changes, code scanning, and context management. MCP server tool integration is planned for the immediate future.

LLM Provider: A key differentiator is its vendor-agnostic, multi-LLM backend support. It can connect to cloud models from OpenAI and Anthropic (with Google integration in progress) and community APIs like OpenRouter. It also supports local open-source models like LLaMA and DeepSeek via Ollama for offline or private use. This allows teams to switch providers via a config file to balance cost, speed, and privacy.

Licensing: The project is open-source and MIT-licensed.

Autonomy and Human Oversight: The agent operates with a high degree of autonomy, interpreting a request and working in a loop until it considers the task finished. The interactive dashboard allows developers to monitor progress in real-time. Oversight is provided by scoping actions to the project directory and to predefined operations for each command; for example, the review command will not alter source code. Full session logs can be saved for auditing.

Unique Features: Its command-line-first approach focuses on large-scale project automation. The vendor-agnostic design and local model support were conceived as a response to closed-source assistants, aiming for transparency, reliability, and security. Its Rust implementation provides a lightweight, fast, and memory-safe runtime.

Strengths: Provides a robust, self-directed coding agent with a focus on flexibility and an open-source ethos. It gives control back to developers, avoiding vendor lock-in and forced data sharing.

Limitations: It currently lacks secure, sandboxed code execution, though this is on the project's roadmap. Its full capabilities depend on planned features like a VS Code extension and a plugin system.

Cross-Agent Comparative Insights

Sandboxing Strategies: Security and Isolation Approaches

Coding agents employ varied code execution security methods, reflecting different philosophies on trust, control, and operational locus.

Direct Local Execution (No Explicit Sandbox):

Goose: Operates as a local-first application running directly in the user's environment. It relies on the security of the user's local system rather than a built-in, isolated sandbox.

cli_engineer Runs directly in the local environment, with codebase safety managed by scoping file I/O operations. A full sandbox is on the future roadmap.

Aider: No explicit built-in sandboxing; relies on user's environment security or supplementary tools.

Local Sandboxing:

Codex CLI: Robust local sandboxing using OS-specific mechanisms (sandbox-exec on macOS, Docker on Linux with firewall rules).

OpenHands: Typically uses Docker locally, with recommendations for hardened configurations.

IDE-Integrated / Extension-Based Execution with User Permissions:

Claude Code: Requires explicit approval for all file modifications.

Cline: Uses a human-in-the-loop GUI for approving file changes and terminal commands.

Cursor: For local command execution, relies on "Cursor Rules" for user approval rather than an isolated execution sandbox.

Cloud-Based Sandboxing:

Codex Cloud Agent: Runs tasks in isolated Docker containers in OpenAI's cloud with strict network control.

Cursor (Background Agents): Execute in remote environments on Cursor's AWS infrastructure, configurable via files.

Augment Code Remote Agents: Each agent gets a secure, independent cloud environment with a virtualized OS.

Devin: Operates in a comprehensive sandboxed cloud environment (shell, editor, browser), with optional VPC deployment.

ZenCoder (CI Agents): Runs within the customer's CI environment, keeping code and execution within trusted infrastructure. Other operations use integrated sandboxing.

OpenHands offers a Daytona Runtime option, providing dynamic workspace management, enterprise-grade infrastructure, and resource optimization.

The diversity in sandboxing reflects balances between user control, convenience, and trust. Local user-configured sandboxes offer high control but place security onus on the user, while managed cloud sandboxes offer convenience but require vendor trust.

Agentic Prowess: Tooling, Reasoning, and Planning Maturity

Effectiveness is largely determined by toolset richness, reasoning/planning sophistication, and codebase understanding.

Tool Richness:

Extensive: OpenHands (coding, CLI, web, API, tool creation); Cline (MCP tool ecosystem, files, terminal, browser); Zencoder (20+ DevOps tools, Jira, Sentry, CI/CD, MCP client); Devin (integrated shell, editor, browser, web search); Goose (extensible via MCP).

Moderate: Codex (Cloud & CLI: shell, file patching, Git, tests); Cursor (terminal, files, GitHub, MCP tools); Augment Code Remote Agents (terminal, MCP, GitHub, Linear).

Focused: Claude Code (file ops, Git, tests, web search); Aider (Git, shell for tests/linters, image/web context); cli_engineer (automates development tasks like code generation, review, and refactoring via command-line operations)

Reasoning & Planning Sophistication:

Explicit Planning: Devin (shows plan, "Interactive Planning" in 2.0); Cline ("Plan & Act" modes); Augment Code (breaks requests into functional plans); Zencoder ("Agentic Pipeline"); Goose (Features a "Lead/Worker Model Pattern": powerful LLM for planning and faster model for task execution); cli_engineer (operates on an "agentic loop" of iterative planning, execution, and refinement).

Goose (Features a "Lead/Worker Model Pattern": powerful LLM for planning and faster model for task execution).1

Deep Codebase Understanding: Zencoder ("Repo Grokking"); Aider (repository map); Augment Code ("Context Engine," "Memories"); Claude Code (agentic search); Devin ("Devin Search," "Devin Wiki"); Cursor (codebase indexing).

Self-Correction/Iteration: Devin (corrects based on test errors); Zencoder ("Agentic Repair™"); Aider (fixes linter/test issues); Cursor (attempts linter error fixes).

Model Context Protocol (MCP): Emerging as a standard for tool extensibility, supported/planned by Cline, Zencoder (client), Codex CLI, OpenHands, Augment Code, and Goose. cli_engineer will have MCP integrated soon as well. MCP standardizes interaction with external tools and data sources, potentially creating an interoperable ecosystem.

LLM Flexibility and Model Choice Impact

LLM choice impacts performance, cost, and capabilities. Agents vary in flexibility.

High Flexibility:

Aider: Broad compatibility (Claude, DeepSeek, OpenAI), supports almost any LLM including local models.

Cline: Extensive support (OpenRouter, Anthropic, DeepSeek, Gemini, OpenAI, Bedrock, Azure, Vertex, local models) via BYOK.

Codex CLI: Defaults to o4-mini; supports OpenAI API models and 100+ others via LiteLLM (Claude, Gemini).

OpenHands: Model-agnostic, supports OpenAI, Anthropic, local models. Allows user selection from a curated list.

Goose: Fully model-agnostic, supporting a wide variety of providers (Anthropic, OpenAI) and local models via services like Ollama to avoid vendor lock-in.

cli_engineer: Vendor-agnostic, with support for OpenAI, Anthropic, Google, OpenRouter, and local models via Ollama.

Moderate Flexibility (Platform/Ecosystem Constrained):

Claude Code: Primarily Anthropic models; enterprise can use Amazon Bedrock.

Cursor: Curated list (OpenAI, Anthropic, Google, xAI), allows BYOK for several. However, custom endpoints may disable key agentic features.

ZenCoder: Recent documentation indicates users can connect own API keys from supported LLM providers and switch models, also supports MCP client standard. Historically seen as using a curated mix.

Low/No Flexibility (Specific/Proprietary Models):

Codex Cloud Agent: Powered by OpenAI's codex-1.

Augment Code: Deeply integrated with a specific "Claude 3.7+03 model" combination; curated stack.

Devin: Uses LLMs like GPT-4 augmented with custom algorithms; no third-party API key support.

Broad LLM choice empowers users to balance cost, performance, and privacy, while tightly integrated specific models may offer more optimized experiences.

The Open-Source vs. Proprietary Divide

This choice impacts transparency, cost, customization, support, and data control.

Open-Source Agents:

OpenHands (MIT)

Codex CLI (Apache 2.0 implied/stated)

Cline (Apache 2.0 implied/stated)

Aider (Apache 2.0)

ZenCoder (Recent official documentation states open source with permissive licenses, a shift from prior understanding)

Goose (Apache 2.0)

cli_engineer (MIT)

Advantages: Transparency, customization, community support, often lower direct costs (BYOK for LLM), greater data control potential.

Disadvantages: May need more technical setup/maintenance, variable community support, potentially less polished UX or feature parity.

Proprietary Agents:

Claude Code

Codex Cloud Agent

Cursor

Augment Code Remote Agents

Devin

Advantages: Often polished UX, dedicated customer support, potentially more advanced/integrated functionalities, enterprise-grade security/compliance.

Disadvantages: Costs (subscriptions, usage fees), vendor lock-in risk, reduced transparency, potential data privacy concerns with cloud processing.

Hybrid Models/Open Core: Some proprietary solutions open-source components or foster marketplaces. ZenCoder offers an open-source marketplace for "Zen Agents." Augment Code open-sourced an SWE-bench agent implementation. This strategy can stimulate community engagement while maintaining core platform control.

Autonomy Spectrum: Assistant to Autonomous Developer

Agents operate across a wide autonomy spectrum.

High Human Oversight (Assistants/Pair Programmers): Prioritize developer control and explicit approval.

Claude Code (requires approval for file mods)

Aider (pair programming, user directs and reviews)

Cline (human-in-the-loop GUI for permission)

Codex CLI (Suggest/Auto Edit modes require approval)

Configurable/Moderate Autonomy: Balance automation with oversight.

Codex CLI (Full Auto mode in sandbox)

Cursor (Agent Mode/Background Agents can operate autonomously, user can intervene)

Augment Code Remote Agents ("auto mode" for independent plan implementation)

ZenCoder ("Coffee Mode" for safe autonomous commands; CI Agents operate autonomously submitting PRs for review)

Goose operates with medium-high autonomy. It is user-directed but can autonomously execute complex, multi-step workflows (called "recipes") after receiving an initial command. In my experience, however, it required many iterations of encouraging to continue finishing what it said was remaining to be done.

High Autonomy (Aspiring Autonomous Developers): Aim to function as autonomous software engineers.

Devin (initially branded for end-to-end completion; 2.0 more collaborative but still high autonomy)

OpenHands (aims for generalist agents similar to human developers)

Augment Code Remote Agents (high autonomy for independent work)

ZenCoder (strong task-specific autonomy, CI/CD agents)

cli_engineer: Operates with full autonomy once a task is invoked, running its reasoning loop until the goal is achieved.

User trust is influenced by reliability, safety mechanisms, transparency, and verifiability. Lower autonomy agents often have lower adoption barriers. Highly autonomous agents demand strong safety and review processes.

Task Complexity and Project Scalability

Agent suitability depends on task complexity and project scale.

Simple Edits & Boilerplate: Most agents (Aider, Claude Code, basic Cursor/Cline modes) are proficient.

Multi-File Refactoring & Feature Implementation: Aider, Claude Code, Cline, Cursor (Agent Mode), ZenCoder, Augment Code Remote Agents, Devin, Goose.

Large Codebase Understanding:

Aider (repository map)

ZenCoder ("Repo Grokking")

Augment Code ("Context Engine," "Memories," 200k context)

Devin 2.0 ("Devin Search," "Devin Wiki")

Claude Code (agentic search, large context window)

Cursor (codebase indexing)

End-to-End Project Development:

Devin (claims in this area, though reviews suggest better on smaller segments)

ZenCoder (can generate complete projects)

OpenHands (aims for full-capability software development)

cli_engineer (can generate entire project code from a description).

CI/CD Integration & Automation:

ZenCoder ("Autonomous Zen Agents for CI/CD")

GitHub Copilot (new agent capabilities in GitHub Actions)

SWE-Bench Performance (Self-Reported/Observed):

Devin: 13.86% unassisted.

ZenCoder: 60%+ (SWE-Bench-Verified), >30% (SWE-Bench-Multimodal), 70% (SWE-Bench overall).

Aider: "Top scores."

OpenHands: 53% resolve rate (CodeAct with Claude Sonnet).

Leading scores in Multi-SWE-Bench.Augment Code: Claims 70% win rate over GitHub Copilot and record SWE-Bench score.

Note: SWE-Bench has limitations (Python focus, potential data contamination).

Emerging Trends and Outlook in Autonomous Coding

The rapid development of autonomous coding agents indicates significant trends:

From Code Generation to Task Automation: A clear move from snippet generation to agents handling complete development tasks and complex workflows. Zencoder's CI agents and Devin's end-to-end project ambitions exemplify this.

Primacy of Deep Codebase Understanding: Effective contribution to large projects requires sophisticated context gathering. Technologies like Zencoder's "Repo Grokking", Aider's repository maps, Augment Code's "Context Engine", and Devin 2.0's "Devin Search" and "Devin Wiki" are key.

Multi-Agent Systems and Collaboration: For complex tasks, collaborative multi-agent systems are emerging. OpenHands supports multi-agent delegation, and Cognition Labs' "MultiDevin" concept involves coordination.

Standardization of Tool Use (e.g., MCP): Protocols like Model Context Protocol (MCP) standardize agent interaction with external tools.

Human-in-the-Loop and Collaborative Autonomy: Significant human oversight and collaboration will likely continue. Even highly autonomous agents like Devin are evolving towards more interactive models. The "AI partner" model acknowledges indispensable human expertise.

Enhanced Security and Safety: More sophisticated sandboxing, permission systems, and approval mechanisms are developing to balance power with safety.

Local vs. Cloud Deployment Trade-offs: Tension persists between local control/privacy and cloud power/scalability.

Ethical Considerations and Job Market Impact: Discussions about developer roles, skill evolution, and job market impact are ongoing, with "augmentation" often emphasized over "replacement."

Specialization appears key, with tools optimizing for different aspects of the software development lifecycle. The "human-agent interface" is a critical design frontier.

The Choice Before the Autonomous Developer

The autonomous coding agents reviewed in this guide represent more than just a technological leap; they signify a fundamental fork in the road for software development. On one path lie polished, proprietary systems offering immense power at the cost of control, tethering developers to closed ecosystems where a single corporate decision can disrupt entire workflows. On the other path are open, transparent, and flexible tools that prioritize developer freedom, demanding more from the user but offering true ownership in return.

This brings us to the core of the matter, encapsulated in the title of this analysis: the rise of the Autonomous Developer. While we seek to build autonomous agents, the ultimate goal must be to preserve and enhance the autonomy of the human developer. An engineer who is free to choose, inspect, modify, and rely on their tools without fear of vendor lock-in is one who can truly innovate. Trust and transparency are not just features; they are the bedrock of a healthy and resilient development culture.

The creation of cli_engineer was a direct response to the fragility of a locked-in ecosystem. It, along with the other open-source agents in this guide, stands as a testament to the belief that the most powerful tools are those that empower, not entangle. They are built on the premise that developers should control their own means of production.

As we move forward, the most critical decision will not be which agent is marginally better at a given task, but which aligns with the future we want to build. The ultimate paradigm is not the replacement of the human, but the powerful synergy between a skilled developer and an AI partner they can trust, configure, and command. The future of our craft depends on the choices we make today to champion the tools that make us all truly autonomous.

"AI Engineer World’s Fair 2025 - Day 2 Keynotes & SWE Agents track" is a great recent 9-hour deep dive with presentations from major players in the industry: https://www.youtube.com/live/U-fMsbY-kHY