The Algorithm that Stopped Counting: When X’s AI Decided I Wasn’t Human

How X’s AI-driven moderation quietly reclassified my human voice as machine output, and erased 95% of my replies.

The Day The Algorithm Decided I Wasn’t Human

On November 4th, everything worked.

My project, thealgorithm.live, drove nearly 400,000 impressions in a single day. Each reply was human-reviewed, edited, rewritten, curated, and approved by me.

Ten days later? 571 impressions.

A collapse of 99.85%.

But the collapse wasn’t the revelation.

The revelation was this:

X didn’t stop showing my replies.

X stopped counting them.

Replies that were public, engaged with, exported to CSV, and visible in impressions simply weren’t counted as replies in analytics.

This wasn’t a glitch, rate limiting, or shadowban.

This was algorithmic reclassification.

The algorithm didn’t silence me.

It simply stopped believing I was human.

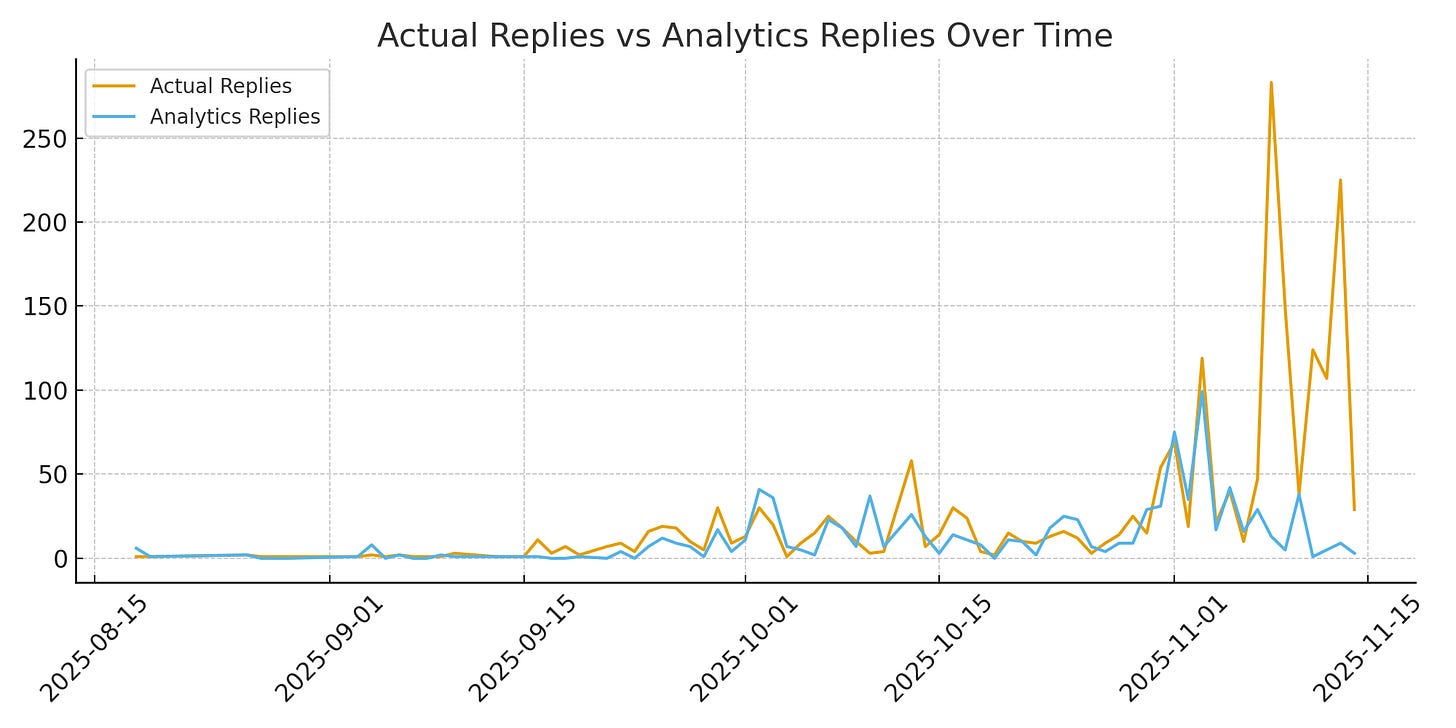

Actual Replies vs. Analytics Replies

Here’s the graph that exposed everything:

Day after day, X analytics reported only a fraction of the replies I posted:

225 replies → 9 counted

160 replies → 42 counted

261 replies → 16 counted

107 replies → 5 counted

124 replies → 1 counted

Analytics wasn’t delayed.

It was discarding.

“The platform didn’t stop showing my posts.

It stopped believing I was human.”

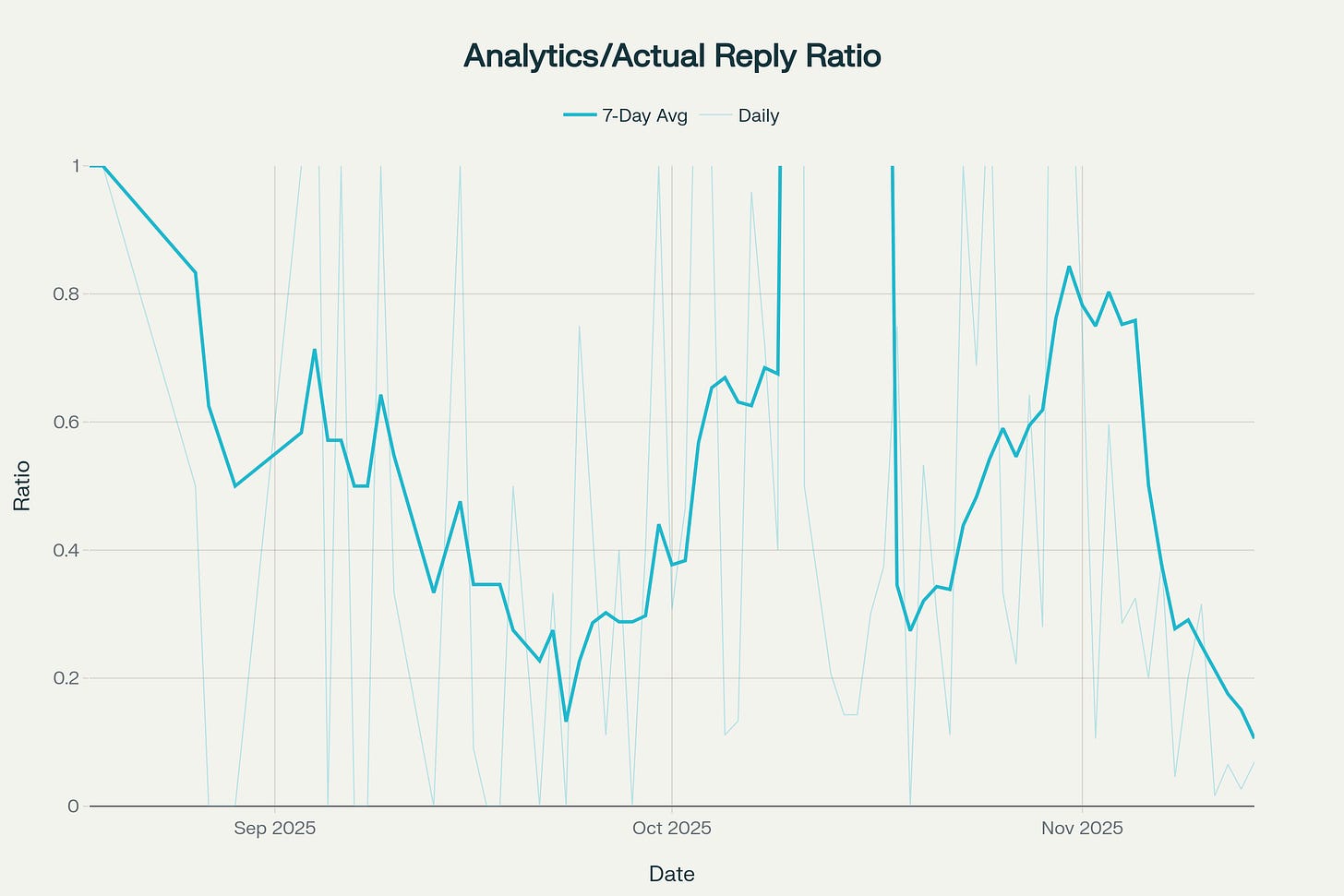

The Proportion X Recognized

If analytics were accurate, the ratio of counted/actual replies would hover near 1.0.

Mine? It oscillated between 0.20, 0.10, 0.05, 0.03, sometimes 0.00.

That means 80–97% of my replies were treated as non-human events.

This didn’t begin overnight.

The undercounts started months ago, grew in October, and went vertical in November.

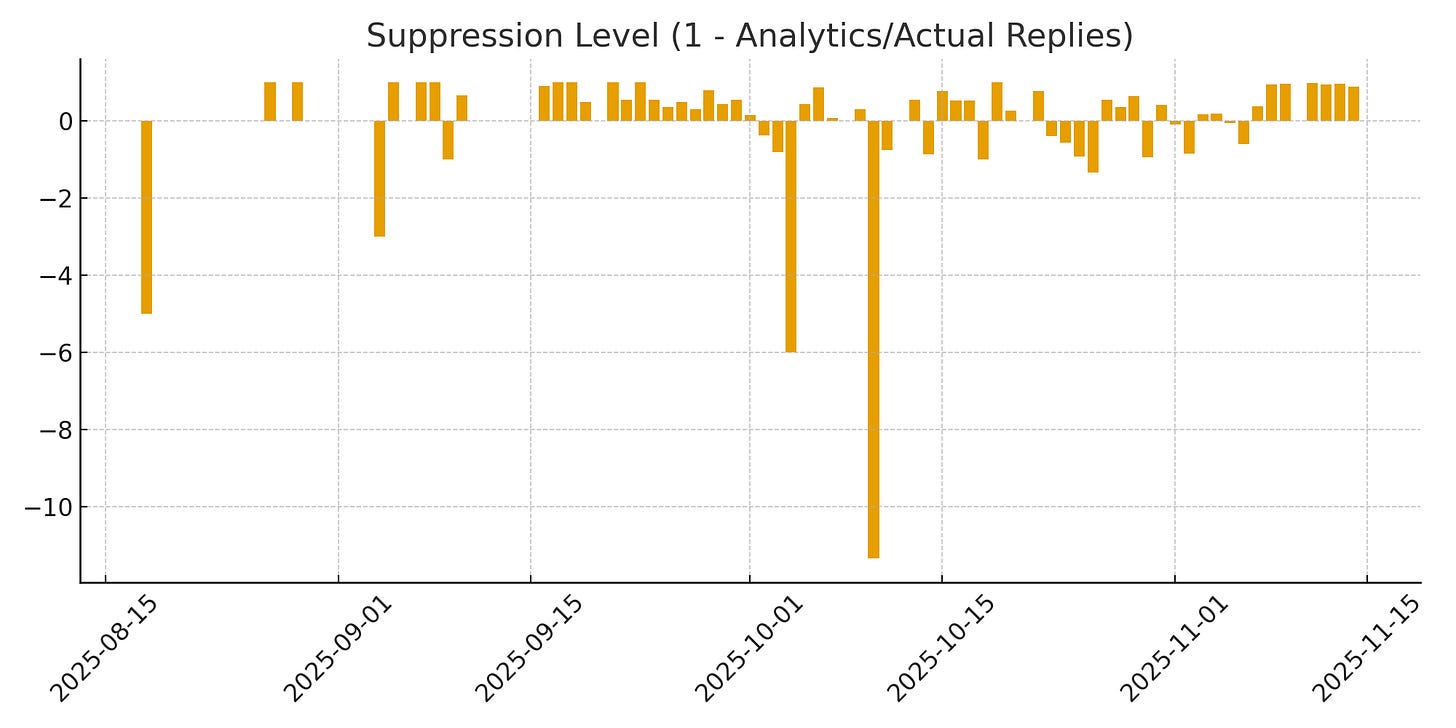

Suppression As a Percentage

Suppression heatmap visualization:

This isn’t random, occasional, or “just fluctuations.”

It’s a pattern, and the escalation aligns almost perfectly with X’s AI-driven Grok feed rollout.

X’s AI: “You’re Human. Analytics Says You’re Not.”

When the collapse hit and analytics ignored most of my activity, I tried something simple:

I asked Grok, X’s own AI, if I was being suppressed.

It told me:

“Your drop sounds anomalous… check for flagged replies.”

Exactly what my data reveals:

Replies that exist, receive impressions, appear in exports, but are erased from analytics.

Two days later, Grok said:

“X’s bot detection can be overzealous sometimes, but you’re clearly human.”

This is the contradiction:

Grok says I’m human.

Analytics says I’m a bot.

Support says nothing.

The only entity at X that responded to me … was the bot insisting I’m not a bot.

225 replies → 9 counted

261 exist → 16 counted

124 exist → 1 counted

This isn’t a shadowban.

It’s two AI systems at X disagreeing about my identity, no transparency, no warnings, no appeal.

“Their bot says I’m not a bot—

But their analytics says I am.”

Why Did It Misclassify Me?

My wife posts hundreds of replies manually.

She is never flagged.

Her activity:

Variable grammar

Typos

Irregular timing

Inconsistent length

Emotional variance

Natural entropy

My replies:

Consistent tone

Consistent structure

Clean punctuation

Regular pacing

Curated clarity

Manually reviewed and edited

Despite hours spent editing, selecting, rewriting, and approving each reply…

my behavioral patterns are clean enough to look synthetic.

Humans generate entropy.

AI-assisted humans generate consistency.

The classifier rewards chaos.

It penalizes clarity.

Do I Need Typos To Prove I’m Human?

To avoid synthetic flags, do I need to:

Add typoes?

Inject punctuation errors?

Vary grammar on purpose?

Simulate fatigue?

Disrupt cadence?

Intentionally derail my own patterns?

Because in 2025:

Artificial chaos is easier to automate than artificial clarity.

If platforms require “chaotic humanity signals”,

they’re not detecting bots, they’re redefining humans.

“If platforms require humans to be imperfect on purpose,

are they detecting bots, or redefining humanity?”

When Platforms Say “We Don’t Suppress”… but the Data Isn’t Lying

X publicly claims:

No shadowbanning

No suppression

No downranking

“Freedom of speech, not reach”

But in reality:

Grok tells me: “Check for flagged replies”

Analytics erases the majority

Feed visibility collapses

Logs and analytics contradict

Support is silent

The AI says I’m human

Moderation says I’m not

This isn’t about political targeting, I post about tech, AI, workflow.

This is systemic.

The Only Question That Matters

When your visibility, identity, and legitimacy are determined by machine-learning classifiers:

What happens when the classifier is wrong?

If the system decides your behavior “looks synthetic,” even if it’s 100% human-authored:

Replies stop being counted

Analytics becomes fiction

Impressions collapse

Reach dies

You can speak.

but if you’re not counted, you’re not visible.

Counting is existence.

What This Means for Creators

We’re entering a world where:

AI-generated content is suppressed

AI-assisted content is misclassified

Highly-structured humans look “too good”

High-efficiency workflows trigger bot flags

Consistent voice = synthetic

Messy humans = authentic

Authenticity no longer means “real voice.”

It means:

“messy enough to fool the classifier.”

“The algorithm didn’t stop showing my posts.

It stopped counting them.”

Where I Go Next

I’m not stepping back.

I’m stepping forward, and documenting everything.

The next version of algorithm.live will:

Intentionally vary cadence

Mix tone and sentence structure

Blend manual + assisted replies

Introduce timing variance

Break patterns

Produce less optimized output

Not because I want to,

But because the platform’s moderation systems require it.

Until platforms offer “safe harbor” for AI-assisted human creation,

creators must tune their output to satisfy AI detectors…

not their audience.

The Ending the AI Didn’t Predict

My content didn’t change.

My intent didn’t change.

My audience didn’t change.

Only one thing changed:

The AI that decides what counts as human.

The graphs prove it.

What would you have done differently if you were to see this coming?