Beyond Adoption: Defining Real AI Impact at Trilogy

Trilogy’s 73% AI usage is industry-leading — but business value trails. Here’s how we’ll turn high adoption into measurable impact, with standards, proven wins, and a culture of continuous learning

Executive Summary

Trilogy has achieved what most companies dream of—73% of employee time spent in AI tools (Egglestone et al., 2025)—but high adoption without clear competency standards creates a paradox: some employees might be optimizing for time-in-tool rather than transformative outcomes. Our internal survey captured responses from the 10% who took time from their busy schedules to provide feedback. Among these respondents, 53% admit they're unsure if all of their AI use creates real value (Internal Survey: Meaningful AI Learning, 2025).

This isn't a motivation problem—it's a clarity problem. A VP of Operations initially highlighted this critical gap between AI usage and business value, prompting this deeper investigation into what meaningful AI learning actually requires. My analysis reveals the solution requires a dual approach: implement specific demonstrations of value while fundamentally changing how we think about AI competency and career progression.

Strong Adoption, Unclear Value

Trilogy leads the industry with 73% AI tool usage (Egglestone et al., 2025). Engineering reaches nearly 80% (Egglestone et al., 2025), far exceeding industry benchmarks where only 6% of firms have begun AI upskilling ‘in a meaningful way’ (Loh et al., 2024). We use over 30 AI tools on a day-to-day basis across the organization. By any adoption metric, we're succeeding. By any value metric, we're guessing. This aligns with global patterns where AI literacy remains at "moderately able" levels—2.98 out of 5 (Mansoor et al., 2024). For example, a VP of SaaS reports a 35% speed-up in DevOps analysis using AI-enabled terminals (Warp.ai, Windsurf, Cursor agentic mode), directly accelerating infrastructure troubleshooting and cost optimization.

Our 10% survey response rate represents those who cared enough to share their perspectives despite heavy workloads (Internal Survey: Meaningful AI Learning, 2025). These employees took time to engage, experiment, and provide feedback. Their responses serve as our early warning system:

53% of survey respondents question whether their AI use delivers real business value (Internal Survey: Meaningful AI Learning, 2025)

58% want clearer guidance on meaningful AI application (Internal Survey: Meaningful AI Learning, 2025)

79% desire deeper integration but lack concrete next steps (Internal Survey: Meaningful AI Learning, 2025)

When half of our engaged participants admit uncertainty about value creation, the hidden cost across the entire organization demands attention. Greg Foote's operational observations confirm this gap between time-in-tool and tangible business outcomes (Greg Foote, personal communication, June 2025).

What Excellence Actually Looks Like

One team shows what happens when competency meets accountability. Omar Ortiz, Senior Vice President of Growth, testifies that their Business Unit dedicates 4 hours weekly to AI exploration, with measurable results:

Product Team Achievements:

Release communications automation: 8 hours → 1 hour (87.5% reduction = 7 hours saved weekly)

Service optimization: 10 people → 2 people (80% cost reduction)

Customer intelligence: 0 → 7,000+ accounts analyzed automatically

Research acceleration: Days → Minutes (95%+ time savings)

These results demonstrate what I define as true AI transformation: converting time-consuming manual processes into rapid, actionable outputs that fundamentally change how work gets done.

Real AI Competency Framework: A Bullshit Detector

Here's what actual AI competency looks like

This progression from surface-level to transformative use is supported by the work of Sun et al. (2022), who found that experts provide "tacit knowledge and more nuanced input" that novices cannot. This distinction highlights that moving beyond basic use to true innovation depends on the deep, experience-based conceptual understanding that experts possess. Since everyone at Trilogy uses AI (no laggards here), the question isn't adoption—it's innovation depth. Each level should tie to department-specific business KPIs, of course, but here is a framework I would recommend:

Level 1: The Prompt Jockey (Late Majority mindset in an AI-mandatory world) Uses AI because they have to, not because they see transformative potential. Can make ChatGPT produce a mediocre first draft. Thinks they're revolutionary because they type questions into a box. Time saved: Maybe 20%. Value created: Debatable at best.

Level 2: The Workflow Engineer (Early Majority—pragmatic value seekers) Chains multiple tools to eliminate 50%+ of recurring task time. Actually documents processes others can replicate. Stops treating AI like a magic 8-ball and starts treating it like infrastructure. These are your practical adopters who see peers succeeding and systematically apply those lessons.

Level 3: The Process Killer (Innovators and Early Adopters—the 16% driving 80% of breakthroughs) Identifies "impossible to automate" work and automates it anyway. Makes entire job categories obsolete (including their own). The Growth team's 80% headcount reduction? That's Level 3. These are risk-takers and opinion leaders, experimenting with AI applications others haven't imagined yet.

AI Center of Excellence Specific KPIs

Here are some concrete metrics I propose for the AI Center of Excellence team:

Level 1: Publishes 1 well-researched actionable AI article/resource weekly addressing documented issues from at least 1 department

Level 2: Beyond weekly publications, engages business units through polls/feedback; delivers 1+ monthly content piece co-created with or requested by BUs leading to measurable improvements; participates actively in office hours

Level 3: Proactively identifies critical unmet business gaps; delivers targeted AI solutions rapidly adopted organization-wide; facilitates 1+ quarterly knowledge-sharing initiative demonstrably accelerating cross-team AI capability; leads office hours with new approaches and peer mentoring

The gap between Level 1 and Level 3 isn't skill: March's (1991) distinction between exploration and exploitation explains this gap—Level 1 users merely exploit existing patterns while Level 3 users explore new possibilities. As Andrei Aiordachioaie, VP of SaaS, notes, "Picking up new AI tools is pretty slow… it's really pain-driven development." This underscores the challenge: most teams adopt only when forced by urgent need, not proactive strategy.

VP's Perspective: Key Operational Insights

Greg Foote, VP of Operations highlights three unique insights:

The 20-60-20 Rule: The most effective AI practitioners follow a consistent pattern—20% human setup, 60% AI processing, 20% human refinement. This ratio maintains critical thinking skills while leveraging AI's strengths. It prevents over-reliance while maximizing efficiency.

The Niche-to-General Pattern: People consistently underestimate how their ‘specific’ solutions apply broadly. What seems like a niche fix for municipal code analysis often contains patterns that apply to contract review, compliance checking, or any dense document parsing. The barrier isn't the applicability—it's the belief that others won't care.

Open-Door Learning: I'm committed to dedicating a weekly 30-minute slot for anyone to book and ask questions—no agenda needed. The best insights often come from informal conversations where people feel safe to explore half-formed ideas. This standing offer reflects my belief that accessible leadership accelerates AI adoption more than any formal training program.

These observations align with and validate the technical analysis presented throughout this document. The progression from basic queries to structured problem-solving mirrors exactly what we see in our operational metrics.

I thought to myself - as an engineer and an employee, what would I personally care about?

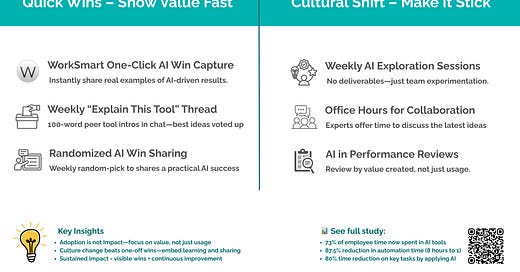

Track 1: Immediate Quick Wins

These initiatives leverage the peer effect documented by Loh et al. (2024), where seeing colleagues' AI applications accelerates adoption 2.6-fold. Their success will serve as tangible proof as we build toward deeper change:

1. AI Win Documentation System

Implement one-click WorkSmart capture button

Creates visible proof of AI value

2. Weekly ‘Explain this tool’ Thread

100-word tool explanations in General chat for a randomly picked tool

Peer voting surfaces best practices

3. Randomized Knowledge Sharing

Randomly select a person to share their latest AI win in 400 words

Surfaces hidden expertise across all levels

Track 2: Cultural Transformation Initiatives

While quick wins create momentum and proof of value for the leadership, lasting change requires systematic cultural shifts:

Weekly AI Exploration Sessions

Every department should dedicate 30-60 minutes weekly for pressure-free AI exploration. No deliverables, no metrics—just experimentation. This protected exploration time directly addresses the findings of Sun et al. (2022) by creating the conditions to move users from novice to expert. It allows them to build the capacity for the very skills—creative, generative, and nuanced contributions—that the study identified as the key differentiators between those who can merely use an AI and those who can innovate with it.

The Growth team's four-hour weekly blocks facilitated their 80% efficiency gains; even one hour weekly moves teams from Level 1 to Level 2. According to Andrei Aiordachioaie, a key obstacle is "dedicated time to actually build automations." While his team has begun automating, "they are not as extensive as I would like them to be." This illustrates exactly why time, structure, and institutional push are needed for widespread, meaningful adoption.

Structured Weekly Rhythm

Monday: Identify AI enhancement opportunities

Wednesday: Dedicated experimentation time

Friday: Share results and calculate impact This rhythm, proven effective in high-performing departments, creates consistent progress without disrupting core work.

AI Center of Excellence Office Hours

Starting this week, CoE will host weekly one-hour sessions where teams bring real challenges, share discoveries, and discuss latest AI developments. Not lectures—collaborative problem-solving where everyone contributes insights. Book via calendar link (to be distributed).

Prompt & Strategy Library Deployment

I've built a Google Chat bot that captures, shares, and ranks our collective AI knowledge. But here's the truth: without widespread adoption, it's organizational waste. Teams should either actively contribute or document alternative approaches that achieve similar knowledge-sharing outcomes. Details in Appendix B.

Performance Review Integration

“Show me the incentive and I will show you the outcome” - Charlie Munger

AI competency progression must become a formal performance metric. Not "did you use AI?" but "what Level did you achieve?" and "what value did you create?" Until AI mastery is affecting promotions, we are slowly losing our edge.

VP's Perspective: What Actually Works

Here are some of my own observations as a VP of AI Center of Excellence:

Clear structure beats magic. Feed AI garbage, get garbage. Feed it structure, get results. One-day proof-of-concept rule: If I can't demo value in 8 hours, it's not worth pursuing. Human-in-the-loop always: AI handles grunt work; humans handle judgment.

Voice-to-text captures thoughts 5x faster than typing—game-changer for idea capture. Multi-model ‘ping-pong’ between ChatGPT, Claude, and Gemini produces dramatically better outputs than single-tool loops. I built our Google Chat bot to institutionalize these discoveries—see Appendix B for technical details.

My manager's philosophy drives my approach: "If you haven't gotten comments on your AI research this week, you haven't learned." Learning from continuous feedback isn't optional in AI—it's survival.

The 90% Silent Majority: Leading Indicators

The 10% survey response rate likely reflects competing priorities and survey fatigue across our organization. However, these respondents serve as leading indicators for broader challenges. If even our most engaged employees—those who took time to complete the survey—struggle with value clarity (53% questioning their AI impact), the silent 90% likely face even greater challenges.

This pattern suggests the need for targeted follow-up: brief pulse surveys (3 questions maximum) within specific departments to validate these findings and capture perspectives from those too busy to engage initially. The 10% aren't a representative sample—they're the canaries in our coal mine, warning us of systemic issues before they become critical.

From Time Tracking to Value Creation

Trilogy has solved the adoption challenge—our employees use AI constantly. Now we must solve the value challenge—ensuring that use drives measurable business impact. This shift from activity to outcome metrics addresses the core challenge identified by Mayer et al. (2025)—moving from the 92% planning AI investment to the 1% achieving true maturity.

My recommendations require parallel efforts:

Immediate: Launch visible quick wins that demonstrate AI's value potential

Fundamental: Implement the complete cultural transformation from Track 2

Ongoing: Build a culture where continuous AI learning is expected and rewarded

Research and operational evidence both confirm that visible recognition and clear advancement paths drive sustained behavioral change more effectively than monetary incentives alone.

The question for leadership: Will we commit to both tracks—quick demonstrations of value AND fundamental incentive alignment?

The AI Center of Excellence has planned the infrastructure. The Growth BU has proven the model. Now we must scale both the quick wins and the cultural transformation across the entire organization.

Special thanks to Greg Foote for initially highlighting this critical gap and providing valuable operational insights, Omar Ortiz, Andrei Aiordachioaie, and Stephanie Connor for their contributions to my investigation

References

Egglestone, R., et al. (2025). Trilogy WorkSmart Analytics Report: AI tool adoption and architecture; productivity correlation (June 2025). Trilogy Engineering Team. (Ongoing research and data compilation, internal document).

Internal Survey: Meaningful AI Learning. (2025). [Internal data set]. Trilogy. Retrieved from here (access restricted to internal teams).

Cuervo, J. (2024, February 18). The 20-60-20 rule. Javier Cuervo Blog

Loh, H.-H., Beauchene, V., Lukic, V., & Shenoy, R. (2024). Five must-haves for effective AI upskilling. Boston Consulting Group

Mansoor, H. M. H., et al. (2024). Artificial intelligence literacy among university students—A comparative transnational survey. Frontiers in Communication, 9

March, J. G. (1991). Exploration and exploitation in organizational learning. Organization Science, 2(1), 71–87.

Mayer, H., et al. (2025). Superagency in the workplace. McKinsey & Company.

Sun, L., Liu, Y., Joseph, G., Yu, Z., Zhu, H., & Dow, S. P. (2022). Comparing Experts and Novices for AI Data Work: Insights on Allocating Human Intelligence to Design a Conversational Agent. Proceedings of the AAAI Conference on Human Computation and Crowdsourcing, 10(1), 195-206.

Note: Personal communications (SVPs and VPs) are cited in-text only.

Appendix A: Questions and the Interview

This Google Doc (access restricted to internal teams) covers 3 sections:

Questions I emailed to VPs and SVPs

Targeted Questions for Greg Foote, VP of Operations

Gemini-generated summary of the interview w/ Greg

(These responses provided the basis for citations indicated as personal communications throughout the document.)

Appendix B: Technical Implementation Details

Prompt-Library Chat Bot: A Tool That Must Be Used or Killed

I built this tool. It works. Now it needs to be used by everyone or discontinued entirely.

The Reality: Tools that aren't universally adopted are organizational waste. Half-measures create confusion, duplicate effort, and fragment knowledge. This article serves as both documentation and justification for widespread adoption across all departments.

System Architecture:

Prompt-Library Chat Bot – lives directly in Google Chat; no extra log-ins required

Submit → Search → Vote – teammates add prompts, tag them, and up-/down-vote the most effective ones

Performance metrics – each prompt records token cost, latency, and qualitative uplift

Automated versioning – edits create new versions; peer votes surface the strongest iterations

Google Sheets backend – lightweight, transparent, zero new infrastructure needed

Full documentation – complete bot code and implementation guide here

Sample prompt library – I'll share my own favorite prompts here

AI Ping-Pong: Multi-Model Workflow

Using multiple chatbots in sequence (e.g., GPT → Gemini → Claude) for drafting, validation, and polishing reliably boosts quality and reduces manual revision. My benchmarks show this ‘ping-pong’ approach achieves 98% content quality in under 20 minutes versus 76% for single-tool loops. See the full article for implementation details and results.

The Bottom Line: This infrastructure enables teams to rapidly share and iterate on successful AI approaches, turning individual discoveries into organizational capabilities. But only if it's used consistently across all teams.

This is an exceptionally thoughtful and practical breakdown of how to turn AI adoption into real organizational transformation.

The distinction between usage and impact resonates deeply. At Trilogy, we’ve already won the battle of “AI-mandatory”; now we need to win the war of AI-mastery. I especially appreciated the “Prompt Jockey → Process Killer” spectrum—it’s a sharp, intuitive framework for assessing both individual growth and team-level maturity. We’ve seen firsthand how even a single Process Killer can radically shift a department’s velocity and value creation.

To build on your Tracks 1 and 2, I’d propose introducing an AI Failure Forum: a monthly, low-stakes space where teams share what didn’t work—what broke, what confused the model, or what simply flopped. It would normalize exploration, reduce fear of failure, and accelerate collective learning. If we want AI fluency to take root culturally, we need to celebrate smart risk-taking just as much as refined outcomes.

Lastly, I want to echo the importance of performance review integration. Until AI competency meaningfully influences career progression—not just usage, but value delivered—we’re limiting the scale of our transformation. Let’s recognize not just the adopters, but the architects: those training the AI and reshaping how we work.

In short, this goes well beyond a roadmap for the CoE—it lays out a strategic path for operationalizing innovation at scale.

Excited to be a part of this evolution in how we work!