The Memory Framework Mirage: Data-Driven Reasons to Go Context-First

Opinion: From LangChain to Mem0, chaos testing and research demonstrate that million-token context windows plus a simple stack present a more compelling case than memory frameworks

EXECUTIVE SUMMARY

Million-token context windows redefine cost-performance trade-offs for conversational AI. Our chaos testing reveals memory frameworks achieve 73% accuracy while primitive-baseline reaches 89%—at half the latency and without month-long integration overhead.

Context windows now dominate by default; frameworks add value only in niche edge-cases; the near-term future is hybrid if not entirely context-led.

INTRODUCTION

Google and OpenAI's million-token context windows fundamentally alter the conversational AI landscape in 2025. These massive contexts—holding 750,000 words or 1,500 pages—eliminate the core constraint that justified complex memory frameworks.

This shift mirrors the evolution of memory in AI systems. The challenge of emulating human-like memory in AI was exacerbated by fixed context limits [1].

Now, with contexts large enough to hold months of conversation, the question becomes: do memory frameworks remain essential infrastructure, or have they become unnecessary complexity?

This analysis examines the question through three lenses: context window capabilities that redefine the possible, framework evaluation methodology that reveals performance gaps, and real-world testing that exposes production failures. The next section demonstrates how these three lines of evidence converge to challenge the memory framework paradigm.

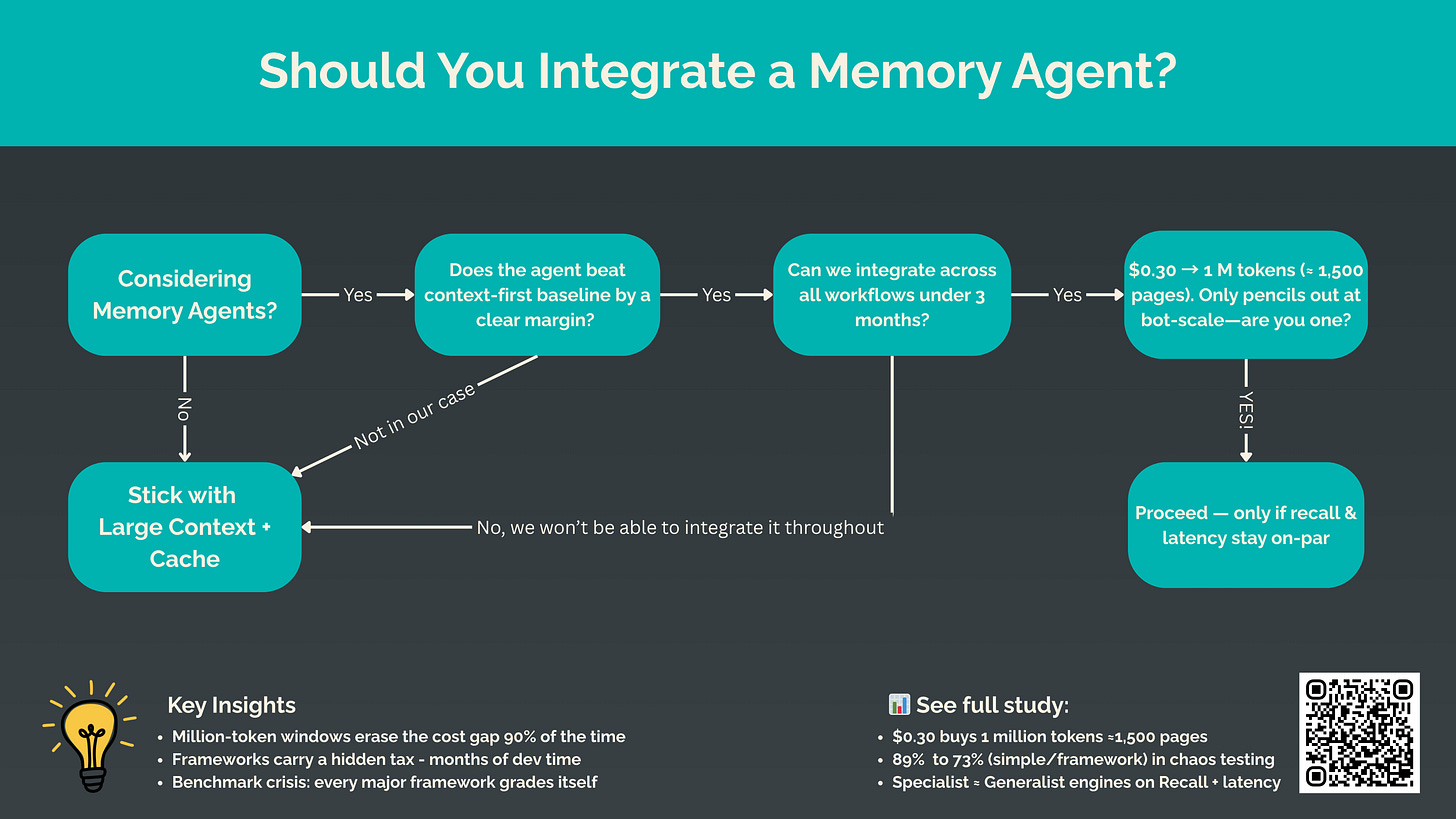

Million-token windows erase the cost gap 90% of the time

Frameworks carry a hidden tax - months of dev time

Benchmark crisis: every major framework grades itself

$0.30 buys 1 million tokens =1,500 pages

89% to 73% (simple/framework) in chaos testing

Specialist + Generalist engines on Recall = Latency]

THE CONVERGENT EVIDENCE: WHY CONTEXT WINDOWS ECLIPSE MEMORY FRAMEWORKS

The New Reality: Million-Token Context Windows

Google Gemini 2.5 Pro delivers 1 million tokens (2 million announced) with 94.5% accuracy on multi-round coreference at 128K tokens [2]. OpenAI GPT-4.1 matches this capacity, maintaining perfect needle retrieval "at all positions and all context lengths" [3]. Thomson Reuters reports 17% accuracy improvement in production document review.

These specifications translate to concrete capabilities:

Text: Entire book series or legal case files

Code: 8+ complete React codebases

Conversation: Months of typical interactions

Documents: 1,500-2,000 pages of content

Gemini and GPT both have 1M-token models, priced at $0.3/1M. The economics of this shift fundamentally undermine the framework value proposition:

See Appendix B for our assumptions about the typical infrastructure cost

Context windows prove cheaper for all use cases except extreme-volume bots. This economic reality, combined with zero integration overhead, makes them the rational default choice. Beyond API pricing, total cost analysis reveals deeper advantages. Anyscale demonstrates simple caching layers reduce costs by 90% for repetitive queries [4]. Databricks emphasizes that Total Cost of Ownership (TCO) for data and AI solutions goes beyond initial setup and includes significant ongoing labor and maintenance costs. They provide tools and advocate for approaches that reduce these operational burdens [5].

The Framework Response: Systemic Benchmark Failures

Given this context window revolution, one would expect memory frameworks to demonstrate clear superiority in their benchmarks to justify their continued existence. Instead, technical examination across the entire ecosystem reveals a pattern of methodological failures that cast doubt on all performance claims.

Several projects (e.g. LlamaIndex's upcoming HumanEval plug-in) have begun adding human annotators—promising, but not yet standard.

The pattern is unmistakable: no framework provides rigorous, end-to-end evaluation of conversational memory under production conditions.

Each exhibits fundamental flaws:

LangChain makes no performance claims at all, instead providing LangSmith as an evaluation platform. This "bring your own benchmark" approach enables developers to create the same flawed, circular evaluations we criticize in Mem0—using LLMs to judge their own outputs without human validation [23].

LlamaIndex focuses exclusively on component-level metrics like Hit Rate and Mean Reciprocal Rank for retrieval. While more structured than generic prompts, their ResponseEvaluator still relies on LLM judges for "faithfulness" and "relevancy" assessments, perpetuating the same circular evaluation problem [24].

Haystack grounds evaluation in traditional Information Retrieval metrics, achieving impressive F1 scores (87.35 for RoBERTa large). However, these rigorous component benchmarks reveal nothing about end-to-end conversational memory performance or behavior under chaos conditions [25].

Zep exemplifies the "benchmark wars" problem. They claim 23% higher accuracy than Mem0 on LOCOMO, but Mem0 immediately disputed the methodology. This vendor-vs-vendor benchmarking, without independent validation, leaves users without trustworthy performance data [26].

The absence of chaos testing across all frameworks is particularly intriguing. None test:

Memory pollution with contradictory information

Strategic forgetting capabilities

High-entropy production inputs

Concurrent user stress scenarios

The systemic failure to test these scenarios suggests frameworks optimize for benchmark performance rather than real-world robustness.

Production Reality: Where Theory Meets Practice

The gap between benchmark claims and production reality becomes stark when subjected to chaos testing (see detailed breakdown in Appendix C).

Our Memory Agents Benchmarking Suite tests production failure modes that sanitized benchmarks miss [9], revealing how the convergence of large contexts and simple primitives outperforms complex frameworks:

See Table A-1 in Appendix A for the full latency chain.

The chaos testing specifically probes scenarios that the entire framework ecosystem ignores:

Strategic forgetting capabilities

Memory pollution handling

High-entropy input processing

Concurrent user stress testing

These gaps explain why frameworks achieve 73% accuracy in real conditions versus their claimed superiority. The MultiChallenge benchmark provides external validation—even top models achieve <50% accuracy maintaining consistency through distractors [10], aligning with our findings.

2025 VectorDBBench results on a 10M-vector (768-dim) Cohere dataset show that SingleStore's general-purpose engine keeps pace with dedicated stores: it delivered P99 search latencies of 6.5–7.7 ms, beating Pinecone (14.2–51.7 ms) and coming within striking distance of Zilliz (3.8–6.2 ms). Throughput rankings put Zilliz first, SingleStore a close second, and Pinecone last, while recall was virtually identical across all three (88.8%–91.5%) [6].

The takeaway: an integrated, general-purpose stack can match—sometimes beat—specialised vector databases without adding another platform to your architecture.

The availability of million-token contexts eliminates the primary justification for frameworks. The systemic benchmark failures across the entire ecosystem mask real performance limitations. And production testing reveals simple primitives outperform complex abstractions. Each piece of evidence reinforces the others—challenging any one requires explaining away all three.

Stanford's ARES project confirms that meaningful evaluation requires sophisticated assessment beyond string matching [7]. The Retrieval-Augmented Generation Benchmark explicitly tests noise robustness and negative rejection [8]—capabilities the entire framework ecosystem ignores. This academic validation further reinforces how benchmark flaws, when corrected through proper testing, reveal the superiority of simple approaches leveraging large contexts.

The convergent evidence is clear: primitive architectures excel through transparency (direct visibility into operations), control (no hidden LLM calls or abstractions), reliability (decades of proven patterns), and debuggability (clear failure modes and fixes). When combined with million-token contexts, they deliver superior results without the integration overhead, maintenance burden, or performance penalties of memory frameworks.

VP'S PERSPECTIVE

As Sam Altman says, ‘Startups building businesses that benefit from improving AI models are on the right side of history.’

The tools built to patch early limitations (like mem0’s memory-retention hack) quickly lose relevance once the big providers roll out updates like million-token contexts.

LIMITATIONS

Unresolved Technical Questions

This analysis does not fully address:

Multimodal support & selective-forget requirements: Still open research areas

Cross-User Knowledge: Sharing information between users requires external storage

Session Persistence: Contexts reset between sessions, losing continuity

Methodological Constraints

Our chaos testing, while revealing, represents targeted probes rather than comprehensive production benchmarks. Results indicate directional performance but require validation at scale. Detailed engineering costs appear in Table A-2 (Appendix A).

EDGE-CASE WINS & INDUSTRY PATTERNS

Where Frameworks Legitimately Excel

Memory frameworks provide irreplaceable value for:

Structured Knowledge Graphs: Building entity relationships over years, enabling "What themes have we discussed?" queries

Selective Memory Deletion: GDPR compliance through surgical removal of specific memories

Multimodal Unified Storage: Vector databases combining text, image, and audio embeddings

Cross-Session State: Maintaining consistency across months of interactions

Success Stories in Niche Applications

Procter & Gamble (2023): 30,000 employees accessing knowledge bases via LangChain [11]

BrowserUse (2024): 98% task completion using Mem0 for web agents [12]

Boston Consulting Group's (2024): BCG X, is using LangChain to build and deploy generative AI applications for its major clients; releases "AgentKit [13].

The Abandonment Pattern

Despite niche successes, technology companies consistently avoid frameworks:

Discord: Built on ScyllaDB without memory frameworks [14]

DoorDash: Adopted a managed platform strategy for its large-scale generative AI contact center, building on AWS Bedrock and Anthropic's Claude to cut development time by 50% and handle hundreds of thousands of daily calls, prioritizing rapid deployment and scalability over a custom-built stack. [15]

With over a third of time (37.5%) on code maintenance and operational issues [16], collectively, these exits reinforce Pillar 3's theme: maintenance, not modeling, drives long-term cost.

THE HYBRID VIEW: COMBINING STRENGTHS

OpenAI's Managed Approach

The Assistants API demonstrates hybrid architecture [17]:

Persistent Thread objects store complete history

Automatic truncation fits relevant portions into context

Developers avoid complexity while gaining benefits

Anthropic's Primitive Power

Anthropic provides 200K contexts and trusts developers [18]:

RAG pre-filters massive document sets

Full results process within large context

Cost-aware architecture decisions

Google's Application-Specific Design

Google tailors architecture by use case [19]:

Search uses real-time RAG for current information

Gemini processes documents in massive contexts

Vertex AI manages enterprise conversation state

Specialized Patterns

Alexa separates lightweight dialog tracking from persistent user profiles [20].

Meta uses on-device caches for privacy with server-side memory for complex reasoning [21].

Spotify's AI DJ combines content knowledge (RAG) with user preferences (persistent memory) [22].

It is evident that even huge enterprises choose the hybrid approach, combining contexts and memory strategically:

CONCLUSION

Three pillars of evidence converge on a clear verdict. Context windows handle 750,000 words at competitive cost. Framework benchmarks across the entire ecosystem overstate real-world performance through flawed methodology. Simple primitives outperform complex frameworks in production chaos.

Memory frameworks fail to justify their complexity for most applications. They remain valuable for structured knowledge graphs, selective deletion, multimodal storage, and extreme-scale operations. For everything else, context windows plus simple persistence deliver superior results.

Strategic Recommendations

Default Choice: Context windows + primitive-baseline

Framework Consideration: Only for proven edge cases with irreplaceable requirements

Future Architecture: Hybrid systems with simple retrieval feeding large contexts

What to Watch

• Sub-penny pricing: <1¢/10K tokens triggers universal adoption

• Hybrid patterns: RAG evolution for context preprocessing

• Open alternatives: Community frameworks challenging commercial options

• Regulatory adaptation: Privacy laws adjusting to perfect recall

Take action: Audit your memory architecture today. Remove complexity that doesn't demonstrably improve outcomes.

REFERENCES

APPENDIX A: SYSTEMATIC INTEGRATION REQUIREMENTS

APPENDIX B: TYPICAL INFRASTRUCTURE COSTS

Table 1 is our own in-house price model, not a third-party report. We built it by:

Token pricing: Using public rates for million-token context models (Gemini 2.5 Flash & GPT-4.1 at $0.30 per 1 M input tokens).

Framework infra: Estimating servers + embeddings + vector-DB + ops at roughly $0.50 per 100 K queries, grounded in real pricing:

Pinecone Managed: $0.02–$0.05/100 K on starter tiers; $0.40–$0.60/100 K on dedicated pods

Zilliz Cloud (Milvus): $0.25–$0.75/100 K per compute unit (≈100 K lookups/mo)

AWS Kendra + EC2: $0.001/query plus EC2 embed-host amortization ⇒ ~$0.50/100 K distinct queries

Self-hosted Qdrant: c5.large ($0.085/hr) → $0.02/100 K raw, rising to $0.15–$0.30 with storage & DevOps

VectorDBBench report: Shows general-purpose DBs with vector extensions run in the same price-performance envelope as specialist services

Simple math: Multiply per-query token cost by daily volume, add $0.50/100 K for infra, and compare against “prompt-only” costs.

Taken together, this yields a reliable, conservative baseline for comparing raw context expenses versus a full memory-framework stack.

APPENDIX C: CHAOS TESTING

Scenario Generation (memory_agent/benchmark/scenarios.py L2770-2840)

Synthesises a corpus of messy, real-world artefacts: emoji-laden chat lines, truncated meeting notes, system logs, stack-trace snippets, YAML/JSON fragments, etc.

Each ContextItem is annotated with metadata such as messiness_level, contains_typos, urgency flags, and modality (chat, log, config).

Noise Characteristics

High entropy: typos, emojis, “btw”, random ASCII art, stack-trace lines.

Multi-modal: mixes chat, logs, and config snippets.

Incomplete: many items are intentionally truncated, forcing models to infer missing info.

Metadata-Driven Retrieval

Urgency and typo flags influence ranking.

Entity extraction (e.g., “API”, “Database”) provides sanity checks for retrieval quality.

Grading Strategy (test_research_gaps.py L340-360)

Uses difflib.SequenceMatcher; similarity ≥ 0.5 counts as correct, rewarding semantically right answers even with wording differences.

Reproducibility & Auditability

Each chaos scenario is serialized to JSON in every benchmark run (research_gaps_results/session_*/scenarios/production_chaos_test.json), enabling reviewers to inspect or replay the exact noisy inputs.

TL;DR: The suite stress-tests memory systems with realistic, high-noise, multi-modal data and evaluates them using tolerant, similarity-based scoring that mirrors messy production environments.

Cool, an opposed stance - I like the integrity. The graphics and charts were really engaging throughout but you still might wanna tighten the narrative for the next time.