Lights, Camera, Algorithm

Hands‑On with 2025’s AI Video Tools (and Why 8 Seconds Still Hurts)

The landscape of AI video generation has evolved dramatically in 2025, offering unprecedented creative possibilities while simultaneously presenting unique challenges that creators must navigate. From stunning visual effects to uncanny valley moments, AI video tools have become both powerful allies and sources of creative frustration.

In this article, I’m going to explore the leading platforms in this space, what differentiates them from their competitors and where I think they outperform or fall short of expectations.

But before I get too far I am going to integrate a new section into my articles that I’m going to affectionately call “Burying the Lead”. The idea here is to provide my quick analysis on what I’ve researched. If you are intrigued and want to read more then I hope you do, but at the very least you should be able to glean my insights by reading this one section alone. And without further ado…

Burying the Lead

If you read nothing else, read this!

As of August 2025, everyone is chasing Google; there’s just no arguing against it.

I don’t normally make such emphatic statements, but I don’t know how anyone could argue that there is a better video generation model than Google’s Veo 3. Prior to writing this, I would have entertained the idea that Sora was better at image generation, but after a bit of experimentation in Google’s Vertex AI Media Studio, I’m starting to think that Imagen 3 is the best image generator but since my focus for this was aimed towards video generation I’ll stop short of making that declaration. Though the combination of Imagen 3 and Veo 3 is shockingly good.

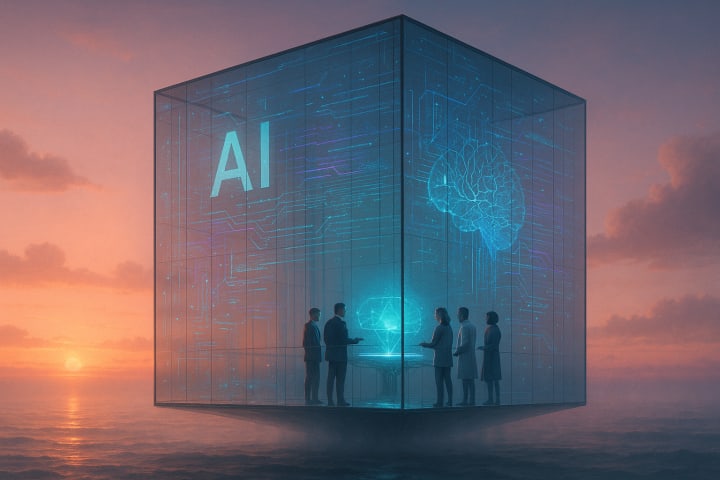

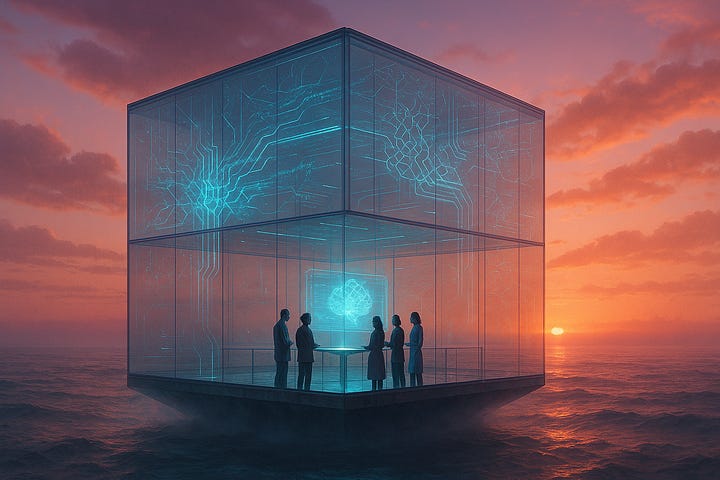

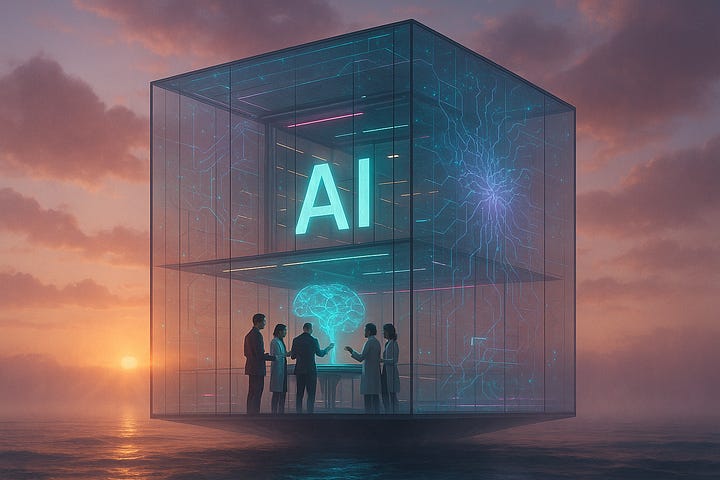

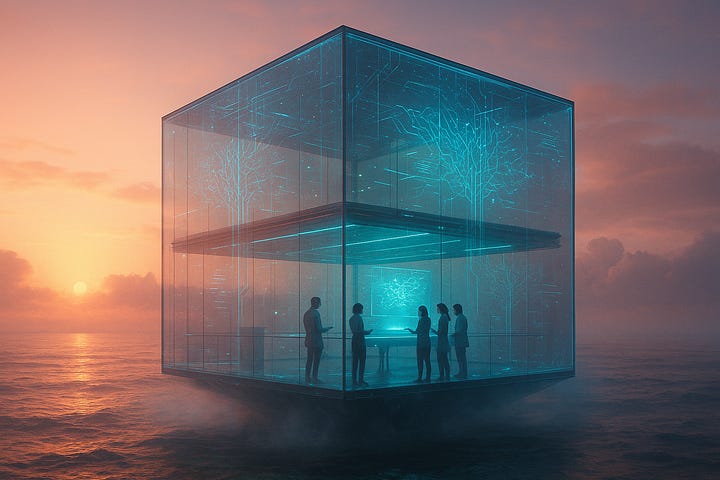

I’ll get into how I came to this conclusion, the exact prompts that were used and the results that were produced later in the article but, to provide just a little bit of context for those that aren’t going to read the entire article, my image prompt was looking for a futuristic AI research center inside a massive floating glass cube. The video prompt was looking for movement of a massive glass cube with diverse AI researchers inside.

As promised here are my results:

Google Veo 3

Winner, winner Veo 3 eats every other video generation model's dinner.

In every iteration there are people (not random blobs or other odd interpretations), and they legitimately appear to be doing something technical. There are holograms and aligned movement from the subjects. For 8 seconds, they really nailed it.

Hailuo

Not bad, but it only wants to give me 5 second clips, which is a non-starter for me. I'm already irritated by the 8-10 second limitations that a lot of the mainstream models have.

Kling AI

Kling AI generated a glowing, jail-like structure that included swirling neon lights on the bottom, not sure what that says about their AI researchers, since that was included in the prompt, but now I'm curious.

Midjourney

I want to like Midjourney, it produces some really interesting results, but they are shockingly out of context from my experience.

Apparently Midjourney thinks that AI researchers dance or do synchronized movements throughout their day. Cool perspective, just not sure it aligns with reality.

Runway

At first I didn’t see the image generation that Runway uses so I used an image from Sora and I think that Runway did a really good job making it come to life.

But, once I realized I could generate images I gave Runway the same test as the rest. For the record it gave me a 5 second video, which immediately frustrates me but, unlike Hailuo, Runway made the most of their 5 seconds. While not necessarily ground breaking in terms of interpreting the prompt, it felt like it maintained the theme.

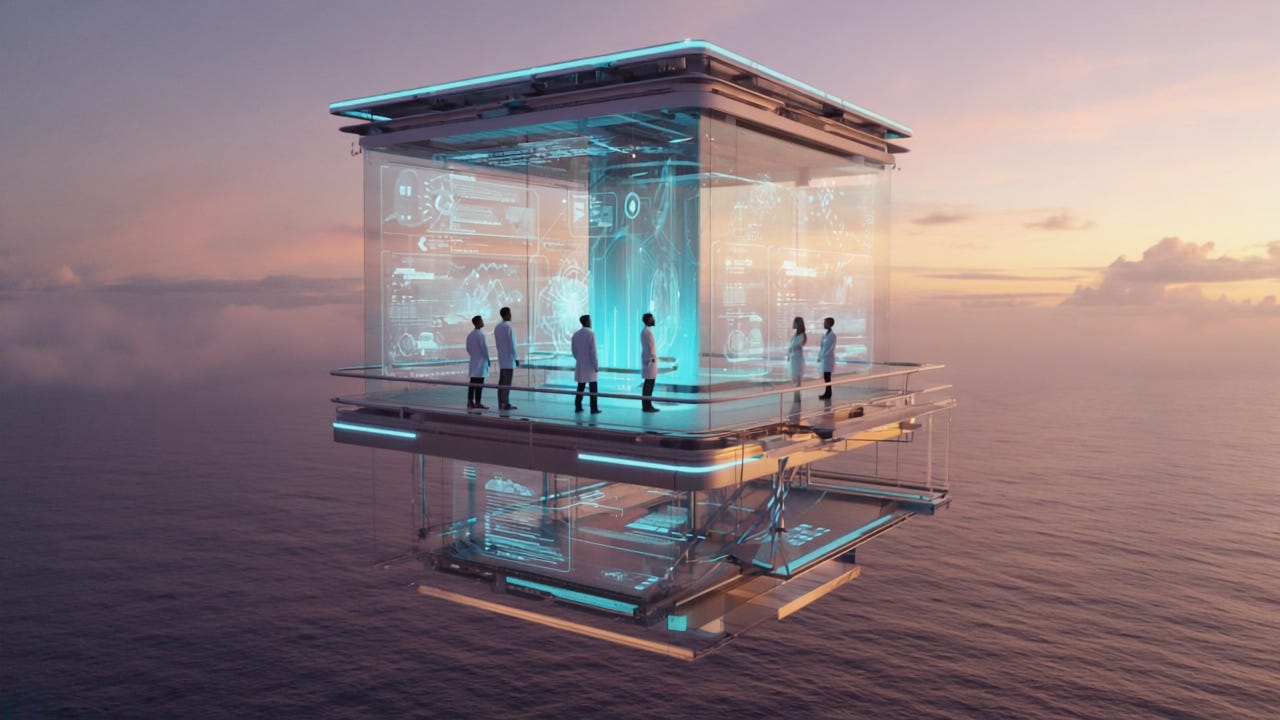

Sora

Sora gave me its typical 4 outputs and, in my opinion two of them did the whole defying physics stuff that their videos tend to do, with a spinning cube that morphs into something else while someone is standing there oblivious to the fact that they are apparently inside of a tornado. However, two of them were relevant and good. Video length was the longest and this was my initial front runner, until Veo 3 came and showed everyone how to actually do the thing.

Conclusion (of my summary)

…because the real conclusion will be at the end of the article, where conclusions go.

For those with the attention span of a gnat this concludes our journey together. For everyone else, before I dive into some of the details of my video prompt analysis I am going to go on a bit of a lip syncing tangent.

Lip Syncing

Making videos is cool. Being able to generate the content is groundbreaking. While I won’t get into my extensive background in television and film (okay, I once knew a guy who was friends with someone who was on a TV show, so I speak from a position of authority), the time that can be saved from creating stunning visual effects, actual video content, and supplemental B-roll footage is astounding. However, unless you are learning a new language, no one wants to read subtitles, and unless it’s an advertisement or a documentary no one wants to hear a voiceover. So where does that leave us? It leaves us with the missing ingredient, audio.

Google Veo 3 (you may notice I have a strong opinion about this model) can produce videos with audio, and it’s remarkable. I recently went on a journey to try and create a music video. I started by creating lyrics, and then generating video, and my hope was that I could then use lip syncing to make the videos appear to be singing, or in my video’s case, rapping, the lyrics.

It was a disaster.

However, I did learn how to navigate the journey, albeit with fun, but still questionable, results. While I came up with a few approaches to getting lip syncing to work, my absolute best was to first generate the scene with Veo 3 and include the lyrics.

My gripe with this is that I would love to be able to upload the audio of the lyrics and have that audio be the source. That would be a “game over” kind of feature. But it’s not an option, yet. However, with Veo 3 you can create a clip and include the lyrics that you want and by and large you will get a video with those exact lyrics. Cadence, tone, accent, etc.; are difficult to control precisely but it’s a great starting point, because lip syncing is all about mapping phonemes to visemes. If you start with a video that already has this mapped, albeit at a different pace or beat, then running it through lip syncing technology with the actual target audio produces much better results. But let me back up a second because I just dropped phonemes and visemes like they are words that most people know.

Phonemes

Phonemes are the smallest units of sound in a language. For example, the word “cat” is made up of three phonemes: /k/, /æ/, /t/.

In lip syncing, speech audio is broken down into its constituent phonemes. Each phoneme corresponds to a basic sound that the mouth makes during speech.

Visemes

Visemes are the visual counterparts to phonemes. They are the distinct shapes the mouth, lips, teeth, and sometimes the tongue make when producing specific groups of phonemes.

What is interesting is that the human mouth only forms a limited set of visibly distinct shapes while speaking. Therefore, multiple phonemes often map to the same viseme (e.g., sounds like /b/, and /p/ may use the same basic mouth shape).

In AI lip syncing, the system maps audio phonemes to the corresponding visemes, then animates the mouth to produce those shapes at the right time.

Lip Sync Technology

The Science of Lip Sync

AI-driven lip syncing technology works through a multi-stage process:

Analyzing audio input: Breaking down speech into phonemes (smallest units of sound)

Mapping phonemes to visemes: Matching each phoneme to corresponding mouth shape/facial expression

Rendering movements: Applying mapped movements to video frames or digital avatars

Factors Affecting Lip Sync Quality

Precision of phoneme-to-viseme mapping: More advanced systems have larger libraries of mouth shapes

Frame rate and smoothness: Higher frame rates create more fluid movements

Co-articulation handling: How surrounding sounds influence mouth shapes

Emotional expression integration: Incorporating facial expressions matching speech tone

Okay, so what works?

Here are my quick thoughts on lip syncing, based off of hours of trying to get things to work.

First, it kind of depends on what you are trying to make. I leveraged an AI avatar in my A2A and MCP demonstration that I featured in Payloads, Promises & Protocols: The MCP/A2A Tightrope. The lip syncing was easy, spot-on and delivered exactly what I was looking for. I could list a handful of tools that offer similar features, Tavus.io, Synthesia, Descript (this is what I used), and HeyGen are a few that I have worked with, and they all produce consistent results when it comes to AI generated avatar lip syncing. It works. It’s not perfect, but it’s close enough. Many of those tools also offer personal avatars. I haven’t spent quite as much time on this specific topic, probably because I’m selfish and never wanted a twin so why spend the time creating one, but in the limited experience I have had, the results were good, not perfect, but really good. I think that has much more to do with the sample source than anything else. Back to the point, if you want to generate a talking head and lip sync your own scripted audio this is relatively easy to do with any of those tools.

But what if you want to use a video source that wasn’t generated by Veo 3 with a similar set of viseme’s already embedded? This is where I am going to share my highly scientific approach.

I call it RRITASIA.

Phonetically, that is “Ar-Skwair-ih-TAY-zhuh”.

That has a catchy ring to it that I’m sure will take off in the generative content community. RRITASIA stands for “Rinse, Repeat, Iterate, Try-Another-Software, Iterate Again” (…or, alternately Rage, Retry, Iterate, Tinker Aimlessly, Scream Internally, Attempt again). Okay, that may be a little tongue and cheek, but it is legitimately what I’ve had the most success with.

My specific workflow that has seen the most success is:

Add video track to CapCut

Add audio track to CapCut

Trim sample sizes to the same length

Export audio track only

Select video track

Select “Lip sync”

Select “Add audio” / “Upload Audio”

Upload the exported audio from step 4

Select “Save”

Select “Generate”

Sometimes that will generate, sometimes it won’t. It really depends on the quality of the source video, and the ease for the software to identify the subject. Generally speaking the results are better when there is a single subject, and they are closer to the screen than not. But since it’s seldom a great result I then re-run the lip sync, but this time I select “Enter text” and I paste in the text of what I want the person to say during that clip.

I have found that clips that are 30 seconds or less produce better results, but that also means a lot more editing in the end. Unless my results are amazing, which they seldom are, I will then export the audio and video and use another software to try and perform the lip sync. I have tried a lot of tools, but my current favorite is Kaiber.ai.

Once again, not perfect, but if you add the video and target audio and select the lip sync model I have found this to be one of the better solutions. Once I have that video, I either run with what it has or sometimes will run it back through my CapCut iteration. At this point I’m not entirely certain if the extra CapCut run through does anything, but I believe I had one remarkable output one time, and I’ve stuck with it ever since.

Here are some samples:

AI Avatar with Scripted Text

AI Generated Rap Video with Lip Syncing Lyrics

In those two examples the AI avatar was as simple as writing the script and pasting it in. There are times where certain words don’t get handled correctly. For example, you may write 2025 and the text to audio translation will turn that into “two thousand, twenty five” when you wanted it to read “twenty twenty-five” but those are small bumps in the road that are really easy to fix with text to speech lip syncing.

The music video started as a response to a challenge and turned into a labor of love because the entire video which is just over two and a half minutes probably took closer to twenty hours to produce. The end result was much more rewarding, but it brings me to my main point about lip syncing and AI video generation.

If you want to generate a product advertisement, a YouTube clip, a product demonstration or even a music video then all of these things are doable

…because the nature of final edits likely include transitions, effects, thematic yet not necessarily unified scenes, and with…

A creative mind

Access to an AI video generation model

A good video editor

…those are easily achievable.

However, making a movie that included the same characters in different clothing, scenes, and dialogue simply isn’t realistic yet, at least not in the general public and early release versions of tools I’ve used.

Lip syncing is critical in this space given the manner with which content is created, and it’s come a long way, but it has a ways to go before it will be what the average consumer would need to make quality long form content.

With lip sync covered, let’s get back to how I formed my strong opinions about current AI video generation models.

Behind the Prompt

Aside from experimenting for hours on end over the past few weeks with different models and platforms and getting a feel for how the systems work, what they do well, what they don’t, I wanted to create an apples to apples comparison so I decided to use both an image prompt and a video prompt. My thought was to use the image generation capabilities of these tools to generate an image and then to use that output with the video prompt to generate a video so that I could see how they all worked. All of my samples are included below, but Imagen and Veo 3 simply crushed the competition.

Image Prompt

“A futuristic AI research center housed inside a massive glass cube floating above a misty ocean at sunrise. The structure glows with soft cyan and violet lights, and features visible floating data streams and neural network patterns etched into its transparent walls. On the main observation deck inside the cube, four diverse researchers (men and women) are gathered around a levitating holographic interface, reviewing a real-time AI model visualization. The mood is quiet awe and innovation. The sky is gradient pink-orange with low hanging clouds and soft golden light reflecting off the water. Cinematic ultra-wide shot, shot on a 35mm lens. High contrast. Style: hyperrealistic with subtle painterly textures. Think Denis Villeneuve meets Moebius. Include lens flares, volumetric lighting, and high detail reflections.”Video Prompt

“Create a cinematic video sequence (10–20 seconds) starting with a wide aerial shot of a massive glass cube floating above a misty ocean at sunrise. The camera slowly descends toward the cube, revealing glowing cyan and violet light streams running across its transparent surfaces. As the shot transitions inside the cube, the scene reveals four diverse AI researchers standing around a levitating holographic interface. They interact with floating model visualizations and gesture toward shifting neural network patterns. Mist swirls at the base of the cube while soft ambient music builds in the background. The sunrise sky evolves from pink-orange to golden yellow, reflecting light dynamically across the water. The mood is quiet awe and inspiration. Include smooth camera movement, volumetric lighting, lens flares, gentle motion of light streams, and subtle facial expressions. Cinematic style inspired by Denis Villeneuve and Moebius with a balance of realism and artistic stylization.”Results

“Is the quantum entanglement stable?”

After running the image prompts and using those to generate the video’s it was clear who the winner was. Because I wanted Veo 3 to be able to bask in the glory of its victory I decided to reward it with the opportunity to flex on all of the competitors by adding conversation to the video generation prompt.

In addition to current video prompt:

"...and introduce conversational chatter with references to technology, artificial intelligence and similar topics"Samples (images and videos) in order of performance:

Google Veo 3

Images

Videos

Sora

Images

Videos

Runway

Images

Videos

When I first tried to generate an image using Runway I didn’t see how to do it, so I used an image from Sora as the reference for generating the video.

I realized later what I was doing wrong so I generate an image from Runway and used that for the official video. It did a good job with the Sora image.

Hailuo

Images

Videos

Midjourney

Images

Midjourney is a little different in the way that you generate video. When I entered the prompt for generating a video it first generated an image (though I had already done that with my image prompt). The first 4 images below were generated from the video prompt, not the image prompt, but they looked better so I included them since that was what was actually used for the videos.

Videos

Kling AI

Images

Privacy, policies and governance is necessary and needed (I’ll touch on that a bit in my parting thoughts), but it can also be annoying. Kling AI refused to generate an image for me. I did remove the specific name references and tried multiple times to remove parts of the prompt, but I got this every time.

Videos

You may be wondering how I generated a video with Kling AI if I didn’t have an image to source from. The video prompt didn’t elicit any warnings from Kling AI so I leveraged their text-to-video option, which may be why the results were so lackluster.

However, to be fair I did go back and experimented a bit by using the video prompt to generate an image, which didn’t trigger the policy violations that the image prompt did. The output was a lot better than the jail cell above. I also tried generating a video using one of the images from Veo 3 and the output was really quite good. The video would have probably ranked Kling AI as #2 in my results, but since it didn’t fall in line with the method I didn’t include it.

Another interesting thing about Kling AI is the ability to edit and modify the video. They have a “multi-elements” area that lets you add some interesting touches to things, however I felt like that was outside the scope of what I was trying to compare, so I didn’t dive into it much, but I probably will in the near future.

Final Thoughts

Let’s wrap with what I’ve learned, what I’ve felt, and what I think creators should take away from this moment in AI video generation:

The Takeaways

You can do incredible things with AI video generation right now.

Time limits are the single most crippling limitation.

Lip syncing is better, but still flawed, frustrating, and rarely plug-and-play.

Google Veo 3 is far ahead of the pack if audio matters (which it should).

Sora is strong on visuals and prompt interpretation, but without audio, it’s like a car with no wheels.

Midjourney is weird. Sorry. I asked for a cool graphic comparing video generation between the leading platforms. It gave me a duck in WW1 attire; I honestly have no follow-up to that.

HeyGen has some truly unique features (URL to video? Yes, please), even if some feel gimmicky.

Kling AI has some potential. The pre-defined “effects” are fun, but the lack of creative control makes it easily accessible but very limited in terms of customization. I don’t know how many images of aliens taking my dog I can make before I’ll get bored.

Be careful chasing the next best product because a lot of them are simply using the same model that you are trying to get away from.

If you don’t know what you want to build, this landscape will eat your time and your will to live. Have a direction before you dive in.

We’re back to prompt engineering, like it or not. Steal good prompts, remix them, refine them, repeat.

Be specific about what you want and be prepared to iterate through the generation cycle to get to where you want to be.

The Reflections

AI video generation is both amazing and frustrating.

When processing power and model architecture break past current limits, the space will explode with potential.

Privacy protections are necessary, but they currently block one of the most common things people want to do: create AI videos using real images of themselves or loved ones. Tools may let you upload a photo as a reference, but once the video is generated, faces get altered or replaced.

Lip syncing is fun, when it works. It usually doesn’t.

Veo 3 is still the best.

Any video model that doesn’t support audio is on borrowed time.