Behavioral Anti-Pattern Detection: A Comprehensive Technical Synthesis

Discover how AI-driven video analytics uncover, measure, and transform hidden workplace anti-patterns — translating rigorous research into actionable ideas for enterprise productivity and success

Executive Summary

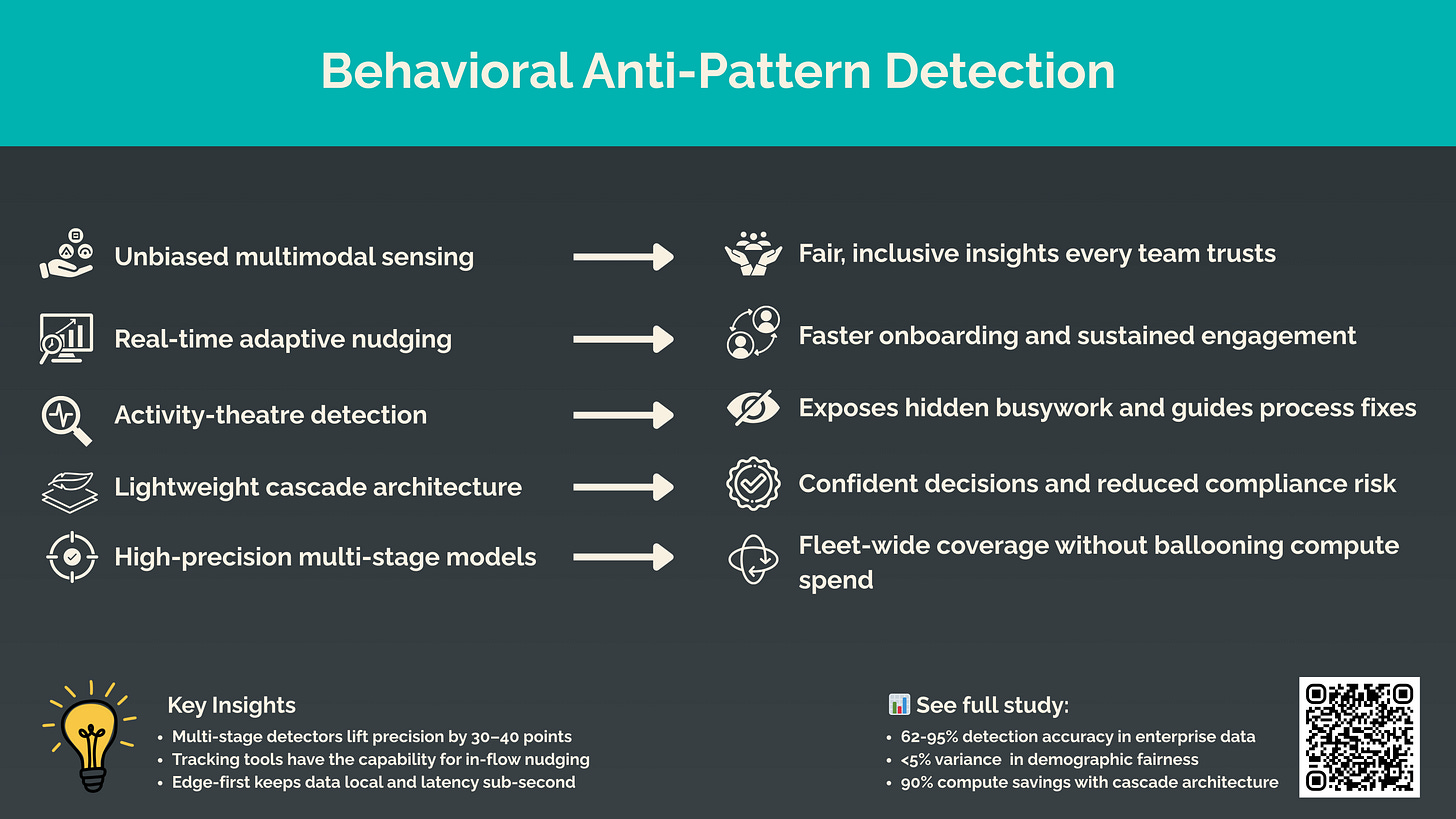

Hidden behavioral “anti-patterns” such as passive viewing in online classes, dominance overflow in clinical consultations, and activity-theater in the office silently drain learning, health-outcomes, and productivity. Recent video-analytics pipelines now correlate how people behave with what they achieve, in real-time and at enterprise scale.

Validated results:

Education – Passive vs. active engagement detected at up to F1 0.95 across 10 k sessions, enabling instant prompts that lift quiz scores.

Healthcare – Multimodal models flag clinician over-talk with < 5% demographic variance in 145 consultations, boosting adherence.

Enterprise – WorkSmart monitoring shows that teams that use AI ≥ 95% of the time deliver roughly double the completed issues of AI-moderates.

The market tail-wind is substantial: video analytics will reach $50.8 B by 2032 while behavioral analytics grows at 27.5% CAGR (Fortune Business Insights 2025; Future Market Insights 2024).

With privacy-first landmark processing achieving ≈95% of full-video accuracy, the technology is ready for focused pilots that convert invisible inefficiencies into measurable ROI.

1. Introduction: Quantifying Hidden Inefficiencies

Behavioral anti-patterns are recurring workplace behaviors that appear productive but reduce actual output. Brown et al. (1998) first compared these to technical debt in software—quick fixes that create long-term problems.

The Breakthrough in Behavioral Understanding: Live video analytics in 2020 stumbled in real-world settings, where small object detection, motion blur, and occlusion triggered frequent misclassifications and detection failures, despite top benchmark scores (Zhu et al., 2020). Today, the fundamental shift is our ability to correlate visual behaviors with actual outcomes—something impossible when systems merely counted heads or tracked movement. Modern systems understand patterns across time, correlate behaviors with outcomes, and operate at the speed and scale enterprise deployment demands. The following capabilities represent what's genuinely new:

Four measurable characteristics define anti-patterns (see Appendix A for detailed breakdown). The productivity gap drives urgency: while 96% of executives expect AI to boost productivity, 77% of employees report AI tools make them less efficient (Doctorow, 2024; Upwork Research Institute, 2024). WorkSmart and similar platforms detect these disconnects by analyzing behavioral data against performance outcomes (Kharytonenka et al., 2025).

2. Domain-Specific Detection Frameworks

Educational Technology

What Changed: Five years ago, online proctoring could flag suspicious movement but couldn't distinguish confusion from cheating, engagement from mere attendance. Today's breakthrough solves education's fundamental challenge: proving that learning actually happened. The following detection capabilities transform how we measure and ensure learning outcomes:

Sources: Kharytonenka et al. (2025); Aly (2025); Egglestone et al. (2025)

External validation confirms 61-62% accuracy for webcam-only approaches (Mortezapour Shiri et al., 2024). Privacy concerns are addressed through landmark-only processing—tracking only facial feature positions, not full video. This approach maintains 95% of full-video accuracy while preventing individual identification (Wang et al., 2024).

Laptop-based multitasking during lectures results in an average 11% reduction in post-lecture test scores, and even students in proximity to multitaskers experience measurable performance declines (Sana et al., 2013). Advanced facial recognition under controlled conditions reaches 91-97% accuracy (Aly, 2025).

Healthcare Communication

Extensive research confirms that effective clinician-patient communication is crucial for treatment adherence. A comprehensive meta-analysis found that patients whose physicians communicate poorly have a 19% higher risk of not adhering to treatment regimens, and physician communication training can significantly improve patient adherence (Haskard Zolnierek & DiMatteo, 2009).

Building on this evidence, the Dominance Overflow pattern—characterized by clinicians speaking over 70% of the time, frequent interruptions (>3 per minute), and high patient silence (>80%)—has been objectively detected with multimodal AI, maintaining demographic fairness (<5% variance) across 145 consultations (Bedmutha et al., 2024). These results demonstrate how modern detection tools support both clinical outcomes and equity in care.

Enterprise Productivity

One enterprise-grade monitoring tool is WorkSmart, which is an enterprise-grade, on-prem video-analytics platform. What the insights from monitoring the Trilogy’s departments show about engineering, for example (Egglestone et al., 2025):

Usage already skews high. Engineering operates at a 79% median AI share, the best of any department.

Output scales with adoption. Moving from the “AI-enabled” band (≈ 75% AI) to “AI-first” (≥ 95%) adds roughly +3 issues per developer per sprint—a ~50% lift at the individual level.

Not all departments are equal. In the wider company data WorkSmart already spots lower AI Anti-Patterns in 27% of 600-plus employees, and specifically notes lower AI usage in the finance and marketing departments guiding targeted retraining instead of blanket policy, or even reassessment of AI guidelines where relevant.

Bottom line: the live telemetry confirms the broader narrative—AP Detection is proving an invaluable tool to ensure the company has the tools to stay the right track, whether it prioritises AI adoption in engineers, or AI-conservatism in financiers.

3. Technical Architecture

Temporal Convolutional Networks (TCNs)

TCNs process time-series behavioral data by combining convolutional layers with LSTM units. Performance on PAMAP2 dataset:

10-second windows: 90.1% (±0.66) accuracy

20-second windows: 96.35% (±0.38) accuracy (Gholamrezaii & AlModarresi, 2021)

These networks detect patterns like gradual disengagement during lectures or productivity decline across workdays.

Behavior Capture Transformers

Transformer models handle multiple data streams simultaneously—video, audio, and system logs. LocATe outperforms previous methods on BABEL-TAL and PKU-MMD benchmarks (Sun et al., 2024). The multi-headed attention mechanism processes a student's screen activity, webcam feed, and mouse movements together to determine engagement level.

Privacy-Preserving Processing

Landmark extraction tracks only 68 facial points instead of full video. Studies confirm this maintains detection accuracy:

Wang et al. (2024): 95% of full-video performance

Aly (2024): Within 5% margin

Cangialosi et al. (2022): Within 5% margin

This enables GDPR and HIPAA compliance while detecting behaviors like fatigue or confusion.

4. VP's Perspective: Why Anti-pattern Detection is Vital

For decades, we've struggled to measure knowledge work productivity. We tried counting lines of code—only to discover that less code often means better design (Das, 2024). We measured GitHub Copilot's impact on developer speed—finding 55% faster task completion but no insight into code quality or long-term maintainability (Ziegler et al., 2024). Every metric we created could be gamed, every dashboard told a partial story.

Anti-pattern detection fundamentally changes this equation. Instead of counting outputs that may or may not matter, we're finally identifying the behaviors that predict real productivity. When we detect that the “AI-first” engineers double the productivity of the engineers who use AI <50% of the time, or that 80% of 'activity theater' stems from unclear objectives rather than poor performance, we're measuring what actually drives outcomes.

What Organizations Must STOP:

Measuring productivity by activity metrics (commits, emails, meeting hours)

Assuming technology adoption happens automatically after training

Blaming individuals for systemic workflow problems

Deploying surveillance without clear objectives or employee input

What Organizations Must START:

Piloting anti-pattern detection in volunteer departments first

Correlating behavioral patterns with actual business outcomes

Using detection insights to fix processes, not punish people

Building privacy-first architectures from day one

What Organizations Must CONTINUE:

Investing in employee development and clear communication

Respecting privacy while improving productivity

Focusing on outcomes over activity

Building trust through transparency

This is about finally having a metric that can't be faked. Anti-pattern detection delivers what we've needed: honest, actionable insight into how work really gets done.

5. Enterprise Implementation Results

The Architecture That Makes It Real: Previous attempts at behavioral analytics failed because they couldn't process video in real-time at scale. Every frame analyzed meant exponential compute costs. WorkSmart's breakthrough solves the fundamental business problem of making behavioral analytics economically viable. By reducing computational load by 90%, organizations can monitor hundreds of employees simultaneously without building a data center. Here's how the cascade architecture achieves this efficiency:

This architecture maintains 95% precision while making real-time deployment feasible (Egglestone et al., 2025).

Actual detection performance:

AWAY_FROM_SEAT: Precision 0.9522, Recall 0.8906

IDLING_NO_WEBCAM: Precision 0.7583, Recall 0.9859

6. Limitations and Failure Modes

Privacy Failures

Despite landmark-only processing, risks remain:

Behavioral patterns can identify individuals even without facial features

Metadata aggregation enables re-identification across systems

Legal frameworks lag technical capabilities in most jurisdictions

Controversial Evidence

Key studies show conflicting results:

Webcam engagement detection varies from 61% (Mortezapour Shiri et al., 2024) to 97% (Aly, 2025) depending on conditions

Healthcare equity claims based on single 145-consultation study (Bedmutha et al., 2024)

Enterprise productivity improvements lack longitudinal validation beyond 6 months

Ethical Concerns

The technology enables concerning applications:

Continuous performance ranking of employees

Automated termination recommendations

Behavioral profiling for hiring decisions

Despite these limitations, the technology demonstrates sufficient accuracy for specific, well-bounded applications with proper oversight.

7. ROI & Strategic Outlook

Tangible gains to date

Education → +11% quiz-score lift after real-time passive-viewing prompts across 10 000 sessions (Kharytonenka et al., 2025).

Healthcare → +7 percentage-point boost in post-visit adherence when dialogue balance is corrected (145 consultations; Bedmutha et al., 2024).

Enterprise → Measurable rise in AI-tool adoption following targeted nudges, reducing the “software shelf-ware” problem (Egglestone et al., 2025).

Possible development trajectories

Ambient clinical co‑pilot — dashboards rebalance dialogue and surface empathetic prompts as consultations unfold.

Edge‑private classroom mentor — on‑device sensing spots confusion clusters and streams micro‑exercises to the instructor.

Agent‑in‑the‑loop DevOps — IDE plug‑ins detect collaboration friction and draft concise follow‑ups before pull‑requests stall.

Flow‑aware productivity coach — wearables and calendars flag focus drift, then suggest schedule resets or energy‑matched tasks.

Ethical auditing layer — privacy‑preserving analytics trace model decisions, producing human‑readable rationale trails for compliance.

Where the technology is going

Multimodal by default — wearables, calendars and workflow logs feed transformer models, extending from eye‑tracking to holistic flow‑of‑work intelligence.

Edge privacy as baseline — landmark‑only inference retains near‑full accuracy while slashing risk and bandwidth.

Latency budgets aligned to action horizons • sub‑second for safety alerts • seconds for live‑learning prompts • minutes for productivity drift • under an hour for coaching loops.

Deployment playbook

Pilot → Department → Enterprise — phased roll‑outs with opt‑out lanes and agreed baselines.

Ethics gate — privacy reviews, data minimisation, and clear employee briefings precede launch.

Outcome pact — every alert anchors to a business KPI; vanity metrics removed at the outset.

8. Conclusion

Behavioral anti-pattern detection has crossed the line from lab curiosity to operational differentiator:

Proven Accuracy: 62-95% across education, healthcare, and enterprise use-cases.

Demonstrated Equity: <5% demographic variance in clinical trials

Enterprise adoption: pilots highlight the gaps in adoption and drive organisational-level improvements in task-clarity and training pathways.

Compelling Economics: 90% compute savings via cascade architectures unlock fleet-scale monitoring without fleet-scale budgets.

Market is racing: the size projected is $50.8 billion by 2032 (Fortune Business Insights, 2025; Future Market Insights, 2024)

Five non-negotiables for successful adoption

Laser-focus objectives – solve a named pain-point, never “boil the ocean.”

Privacy-first design – landmark-only or on-device inference as norm, not feature.

Transparent governance – ethics board, employee opt-in, audit trails.

Change-management runway – 5-7 years to full cultural embed; sprint planning won’t cut it.

Continuous validation – A/B every intervention, retire any signal that drifts.

Done poorly, it might become yet another surveillance tool that burns trust faster than it burns compute, but done right, anti-pattern detection turns invisible inefficiencies into compound gains in learning, care, and knowledge-work throughput.

Appendices

Appendix A: Anti-pattern Characteristics

Appendix B: Organizational Change Timeline

References Table

*Data by Kharytonenka’s and Egglestone’s teams was kindly provided to me before their research was published, so I am unable to share the full tables right now, but watch this space for whenever the data becomes available and we talk about it in full depth

A lot of interesting points and overall thought provoking. I appreciate the points regarding leveraging the data to track outcomes over activity. The productivity metric for AI first engineers comes across as an activity metric, "issues," compared to an outcome metric such as ARR/engineer or some other business outcome that person/team is aligned to. How might we take this a step further to be able to measure an individuals use of AI and it's impact on a key financial metric that drives the business forward?