Executive Summary

Commercial visual-testing Software as a Service (SaaS) platforms currently offer the most reliable path to pixel-perfect regression detection. In a recent study, Percy achieved a 100% diff-detection rate across 260 comparisons with only 2.5 hours of setup [6]. While AI-vision prototypes, often driven by models like GPT-4o and Gemini, are starting to add semantic understanding, they still face limitations in baseline management, cross-browser infrastructure, and result consistency [7]. For instance, GPT-4o and Gemini prototypes identified some injected CSS bugs but missed subtle pixel shifts and produced inconsistent outputs [8].

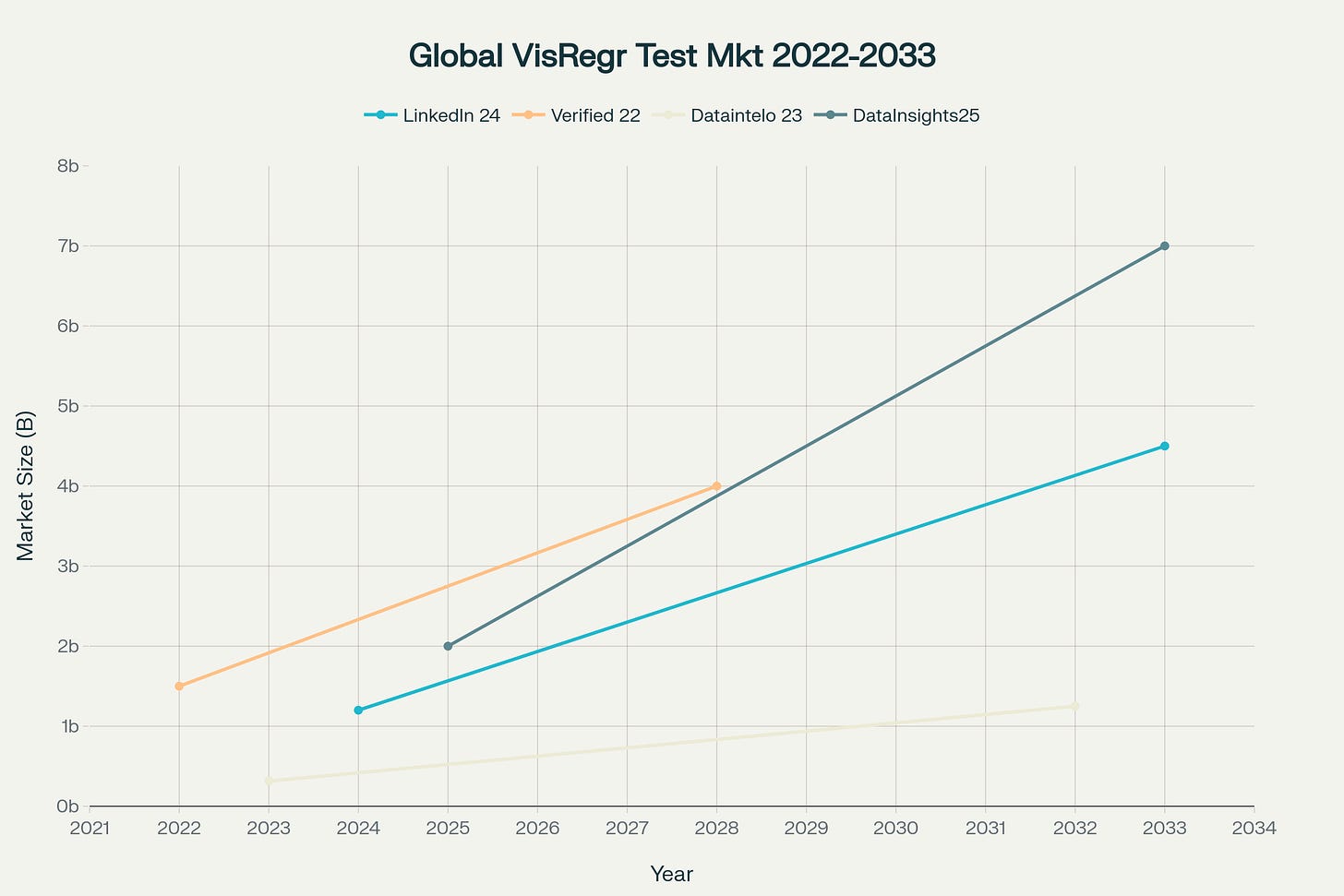

The market is increasingly favoring hybrid workflows. SaaS tools are integrating AI to reduce false positives, while LLM vendors are working to lower costs and latency. Analysts forecast a Compound Annual Growth Rate (CAGR) of 15-17% for visual-regression solutions through 2033 [9]. A key opportunity lies in marrying commercial baselines and branching capabilities with LLM-generated natural-language diff reports and accessibility hints.

1. Why Visual AI Matters Now

Rapid advances in computer vision and multimodal large‑language models (LLMs) have shaken up UI verification. The days of running thousands of pixel‑diff tests, often with a human in the loop, are disappearing. New AI techniques promise to cut both test volume and review time, but only if they are deployed thoughtfully. This analysis pairs hands‑on experiments with broader market observations; all raw data lives in the public repo: https://github.com/dp-pcs/TestableApp.

1.1 Business Stakes

First impressions of a user interface can form in as little as 50 milliseconds. Visual bugs, such as a hidden mobile "Checkout" button, can severely impact conversion rates, leading to revenue loss and damage to brand trust.

1.2 Traditional Pixel Diffing

Traditional tools capture baseline screenshots and then compare new builds pixel by pixel. While excellent for precision, they often generate a high volume of "noise" from benign changes like minor font rendering variations, overwhelming Quality Assurance (QA) teams.

1.3 Rise of Visual AI

Modern visual AI engines utilize computer vision heuristics or deep neural networks to intelligently ignore non-critical changes (like anti-aliasing) and identify meaningful layout shifts. This approach aims to reduce false positives and provide more relevant feedback to testers [10].

2. What the Traditional Toolchain Gives You (and Doesn’t)

3. Where Commercial Visual Platforms Excel

Applitools and Percy bolt seamlessly onto your CI pipeline, diff screenshots across browsers, and surface issues through rich dashboards. While I didn’t run a head‑to‑head on integration breadth, industry docs make it clear that both vendors can marry functional and visual assertions into one approval flow, something you cannot get out‑of‑the‑box with Playwright, Cypress, or Selenium alone, though they can work with both Applitools and Percy as well.

4. The DIY Temptation: LLMs + Playwright

My initial hypothesis: skip the SaaS bill by piping Playwright screenshots into vision‑capable LLMs (GPT‑4o, Gemini). In theory:

Playwright → drives the app and captures screenshots.

LLM API → compares reference vs. candidate images and replies “same” or “different.”

Voilà, DIY visual testing with no per‑screenshot fees. Brilliant and guaranteed to work, right? Right?

5. Experimental Findings

A comparative study evaluated commercial visual testing platforms against custom LLM-driven prototypes across several metrics [11].

UI Testing Approaches Comparison

5.1 Detection Accuracy

Percy and Applitools demonstrated high accuracy, flagging every injected regression [12]. In contrast, the GPT-4o prototype missed subtle one-pixel shifts, although it did correctly identify a disabled "Save" button [13].

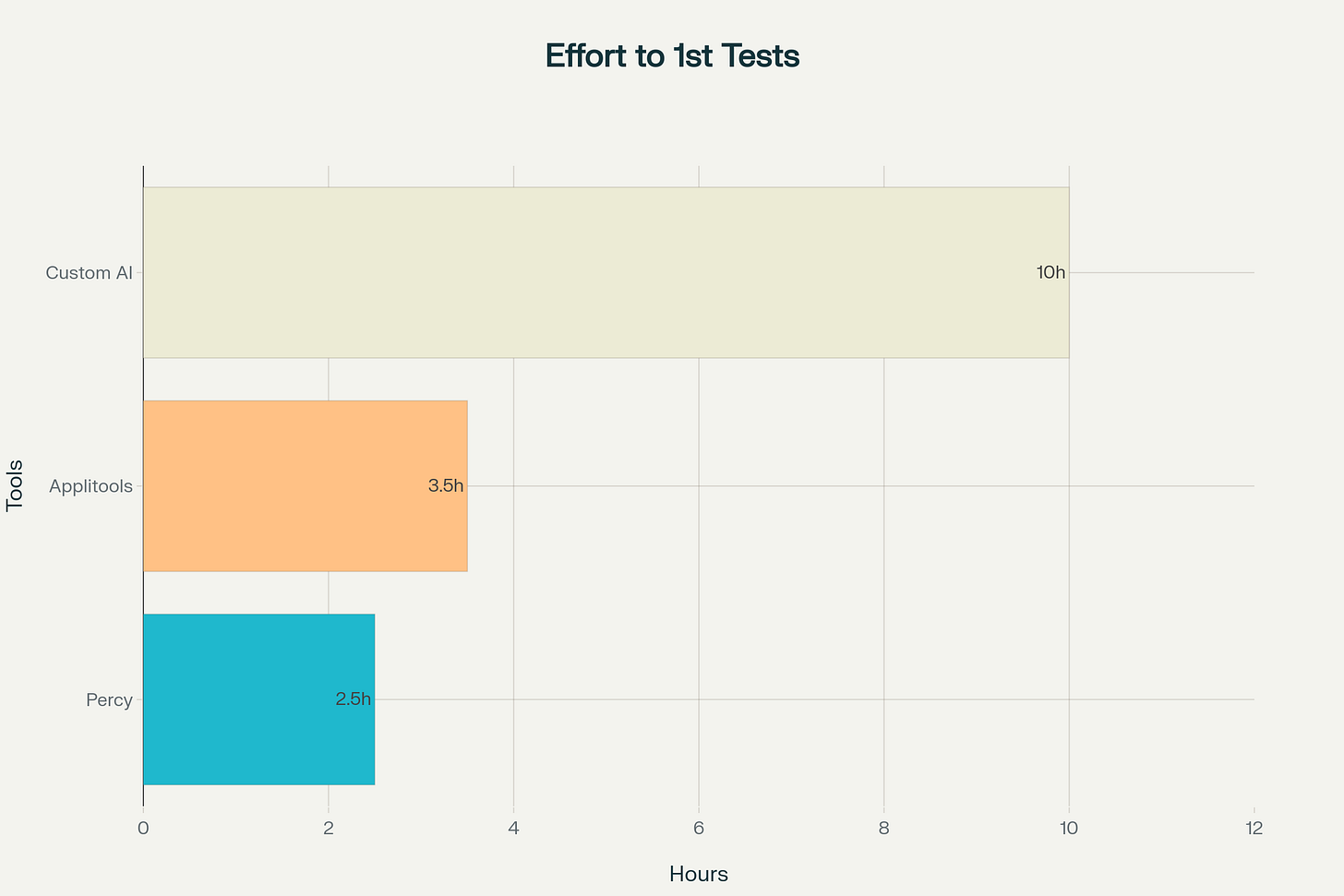

5.2 Engineering Effort

Commercial SaaS tools provided essentially turnkey solutions for baseline management, Command Line Interface (CLI) integration, and approval workflows [14]. Building equivalent infrastructure for LLM-based testing required significant bespoke scripting, prompt tuning, and image-coordinate mapping [15].

5.3 Cost Dynamics

While LLM vision APIs generally offer a lower cost per image, they transfer a considerable maintenance burden to the development team [16]. Hidden expenses can include GPU inference time during Continuous Integration (CI) and the need for re-prompting to stabilize outputs [17].

5.4 Why Do Commercial Tools Outperform?

The platforms never reveal their secret sauce, but likely factors include:

Custom CV models trained on UI artifacts, not general imagery.

Heuristic noise filters (anti‑aliasing, animation frames) that cut false positives.

Domain‑specific prompt engineering or layered diff passes invisible to end‑users.

Replicating that stack with raw LLM APIs would demand non‑trivial R&D.

6. Initial Tool Exploration and Challenges

My initial aim was to evaluate a broader set of AI tools for UI testing using vision. The top candidates identified were Applitools, Percy (BrowserStack), Functionize, Testim, testRigor, Reflect, and LambdaTest. Each of these is recognized for leveraging computer vision and visual AI to detect UI issues, regressions, and inconsistencies across web and mobile applications.

Applitools: Widely recognized as an industry leader in visual AI testing [1], its Visual AI engine, "Eyes," uses advanced machine learning to compare UI screenshots, intelligently ignore minor or dynamic changes, and highlight meaningful visual differences [2]. It integrates with all major test frameworks (Selenium, Cypress, Playwright, etc.) [3] and supports web, mobile, PDF, and component testing. Applitools also offers autonomous test creation and self-healing capabilities, making it a go-to for enterprise-grade visual validation.

Percy (BrowserStack): Percy provides automated visual regression testing by capturing DOM snapshots and comparing pixel diffs across browsers and viewports. It features parallel screenshot processing, a rich diff review UI, smart grouping to ignore dynamic or flaky content, and seamless integration with Playwright, Cypress, and Selenium.

Functionize: Functionize employs advanced computer vision and machine learning techniques to ensure tests are robust and sensitive to the right degree, eliminating flaky results [11]. The platform's visual testing capabilities use machine learning and computer vision to verify every part of the UI, detecting even granular changes while modeling timing thresholds of real user experiences. It provides detailed waterfall displays showing load times for each element and offers enterprise-grade scalability for large-scale testing needs.

Testim (Tricentis Testim): Testim uses AI and machine learning to create, execute, and maintain UI tests. Its smart locators and self-healing features adapt to UI changes, reducing test flakiness and maintenance. Testim's visual validation features allow for pixel-perfect comparisons and visual regression detection, making it a strong choice for teams seeking robust, scalable, and low-code UI automation.

testRigor: testRigor is a generative AI-powered tool that enables visual UI testing using plain English commands [4]. Its Vision AI can interpret UI elements contextually, handle dynamic content, and perform OCR-based validations [5]. It's especially useful for teams looking for codeless, cross-platform, and highly maintainable visual test automation.

Reflect: Reflect is a no-code, AI-driven visual regression testing platform. It uses generative AI to create and maintain tests, automatically adapts to UI changes, and provides visual approval workflows. Reflect is ideal for teams that want to minimize manual test maintenance and maximize visual coverage without writing code.

LambdaTest: LambdaTest offers AI-powered visual regression testing in the cloud, supporting automated screenshot comparisons across 3000+ browsers and devices. Its smart visual comparison engine highlights only meaningful UI changes, reducing noise and false positives. LambdaTest is popular for its scalability, real device coverage, and seamless CI/CD integrations.

However, conflicting information regarding which of these tools genuinely employed AI vision for UI testing, coupled with the significant time commitment already invested in setting up the test environment and integrating with Applitools and Percy, led to the decision to narrow the scope of the study. Of the tools not fully explored, Functionize was of particular interest, but the requirement to schedule a sales call for a free trial proved to be a barrier.

7. Interpreting Analyst Forecasts

Visual-testing expenditure is projected to quadruple this decade, a trend driven by the increasing adoption of DevOps practices and the augmentation of testing processes with AI. For instance, the AI in Software Testing market is projected to grow from $1.9 billion in 2023 to $10.6 billion by 2033 with an 18.7% CAGR [18]. The Visual Regression Testing market is forecasted to grow from $315 million in 2023 to $1.25 billion by 2032 at a 16.5% CAGR [19]. Venture reports highlight three converging trends shaping this growth:

Smart branching & baselines: Modern tools now track Git branches and can automatically promote accepted snapshots, reducing merge conflicts and pain points in development workflows.

AI-driven triage: Visual AI systems are becoming more adept at clustering diffs, automatically ignoring irrelevant elements like ad slots, and even drafting pull request comments, thereby reducing manual review effort.

Multimodal LLMs: Models such as GPT-4o and Gemini are enabling natural-language queries (e.g., "Is the hero banner readable?"), UI element locating, and automated accessibility captioning.

8. Practical Recommendations

8.1 Short Term (next 6 months)

Continue to utilize commercial platforms like Percy or Applitools as the foundation for regression testing.

Experiment with integrating GPT-4o or Gemini into a sidecar script that processes Percy diffs and generates easily understandable summaries for designers.

Capture additional metadata, such as WCAG contrast ratios and responsive breakpoints, to provide more context for LLMs.

8.2 Medium Term (6-18 months)

Explore vision-fine-tuning on project-specific screenshots to reduce "hallucinations" or inaccurate outputs from AI models.

Evaluate hybrid platforms that combine baseline infrastructure with semantic diffing capabilities, such as Sauce Visual with AI, Functionize, or Keysight Eggplant [20]. This includes considering strategic alliances like the one between Eggplant and Sauce Labs for AI-driven automated testing [21].

8.3 Long Term

Plan for the implementation of autonomous agent workflows where multimodal models can both perform exploratory clicks and generate new visual tests.

Allocate budget for larger snapshot datasets to train organization-specific Visual Language Models (VLMs), especially if on-premise privacy is a concern.

9. Limitations & Future Work

It's important to note that the study referenced ran on a single React demo and involved eight synthetic CSS bugs, which may not fully represent the complexities of live production environments. Future work should focus on:

Scaling breadth: Expanding testing to include mobile interfaces, dark modes, Right-to-Left (RTL) locales, and animations.

Double-blind prompts: Systematically varying vision prompts and measuring the variance in results.

Performance & flakiness: Tracking the consistency of LLM outputs across 30 or more CI runs to quantify non-determinism.

Conclusion

While pixel-diff SaaS tools currently remain dominant for mission-critical UI regression testing because they pair accuracy with industrial baseline management [22], AI vision is advancing rapidly. Semantically aware LLMs are already capable of describing visual differences, suggesting fixes, and even driving UI flows.

The most effective strategy today is a layered approach: leveraging battle-hardened visual platforms to handle baselines while AI augments insight and accessibility. Teams that embrace this hybrid model will ship faster, cut false positives, and be ready when AI vision finally rivals human-level perception.

Sources

[1] Top 10 Visual Testing Tools - Applitools: https://applitools.com/blog/top-10-visual-testing-tools/

[2] Automated Regression Testing with Visual AI - Applitools: https://applitools.com/blog/automated-regression-testing-with-visual-ai/

[3] How AI-Powered Computer Vision is Revolutionizing Software Testing: https://www.mabl.com/blog/how-ai-powered-computer-vision-is-revolutionizing-software-testing

[4] AI-Driven Test Automation Techniques for Multimodal Systems: https://www.testim.io/blog/ai-driven-test-automation-techniques/

[5] Vision AI and how testRigor uses it: https://www.testrigor.com/blog/vision-ai-how-testrigor-uses-it/

[6] Comparing Applitools vs BrowserStack Percy: https://www.browserstack.com/percy/compare-applitools

[7] AI in Software Testing: QA & Artificial Intelligence Guide: https://www.perfecto.io/blog/ai-in-software-testing

[8] How Vision-Based AI Agents Work in UI Test Automation - AskUI: https://www.askui.com/blog-posts/vision-ai-ui-testing

[9] Visual Regression Testing Market Report - Dataintelo: https://dataintelo.com/report/global-visual-regression-testing-market

[10] Tricentis Tosca - Vision AI: https://www.tricentis.com/products/automated-testing-tosca/vision-ai

[11] Visual Testing - Automated Visual Regression Testing - Functionize: https://www.functionize.com/visual-testing

[12] Baseline Management | Sauce Labs Documentation: https://docs.saucelabs.com/visual-testing/workflows/baseline-management/

[13] Using GPT-4o vision capabilities to automate web testing - Reddit: https://www.reddit.com/r/softwaretesting/comments/1cu95xj/using_gpt4o_vision_capabilities_to_automate_web/

[14] Smart Branching and Baseline Management: Transforming Visual: https://www.lambdatest.com/blog/smart-branching-and-baseline-management/

[15] Developing an Automated UI Controller using GPT Agents - AskUI: https://www.askui.com/blog-posts/developing-an-automated-ui-controller-using-gpt-agents-and-gpt-4-vision

[16] OpenAI gpt-4-vision-preview Pricing Calculator | API Cost Estimation: https://www.helicone.ai/llm-cost/provider/openai/model/gpt-4-vision-preview

[17] The Developer's Guide to UI Testing Automation with Llama 3.2: https://www.ionio.ai/blog/how-we-automate-ui-testing-with-multimodal-llms-llama-3-2-and-gemini-api

[18] AI in Software Testing Market Size, Share | CAGR of 18%: https://www.precedenceresearch.com/ai-in-software-testing-market

[19] Global Visual Regression Testing Market: Insights on Key Growth: https://www.linkedin.com/pulse/global-visual-regression-testing-market-insights-w4ztf/

[20] How to Automate UI Testing with Visual Verification - Keysight: https://www.keysight.com/us/en/solutions/automate-ui-testing-with-visual-verification.html

[21] Eggplant x Sauce Labs: from partnership to product integration: https://saucelabs.com/blog/eggplant-sauce-labs-partnership-to-product-integration

[22] Seeing is Believing: How AI is Transforming Visual Regression Testing: https://www.virtuosoqa.com/post/ai-is-transforming-visual-regression-testing