Before we dive into the nuts-and-bolts of prompts, phonetics, and lip-sync tricks, take a look at the final results—two AI-generated rap-battle videos that set the bar for what follows:

“Stanford vs. Gauntlet AI — Battle of the Software Education Titans”

“Tesla vs. Waymo — Vision-Only vs. LiDAR Showdown”

Seen them? Great. The rest of this guide reverse-engineers exactly how each track, lyric sheet, and frame came together—so by the end you’ll have every step (and every command) needed to craft your own AI-powered music video.

Open AI o3 is usually my go-to architect. I use it to generate Product Requirement Documents and Implementation Plans to unleash unto software engineering agents – though I've also had success with Grok 3 Think, Gemini 2.5 Pro, and Claude 4 Opus. So too, I recommend a good reasoning model as the starting point for the crucial keystone in a music video: the lyrics. Of course, context engineering is a prerequisite. For "Stanford vs. Gauntlet AI" I knew o3 was quite familiar with Stanford (and could leverage tool use for specific searches), so I focused on guiding it toward https://www.gauntletai.com/ to research it a bit. It did a wonderful job of incorporating very specific domain knowledge and trivia about each program into the lyrics. For "Tesla vs. Waymo" I leveraged my Nexus Agents to do deep research on the topic and fed the prompt and the report as input to generate the lyrics.

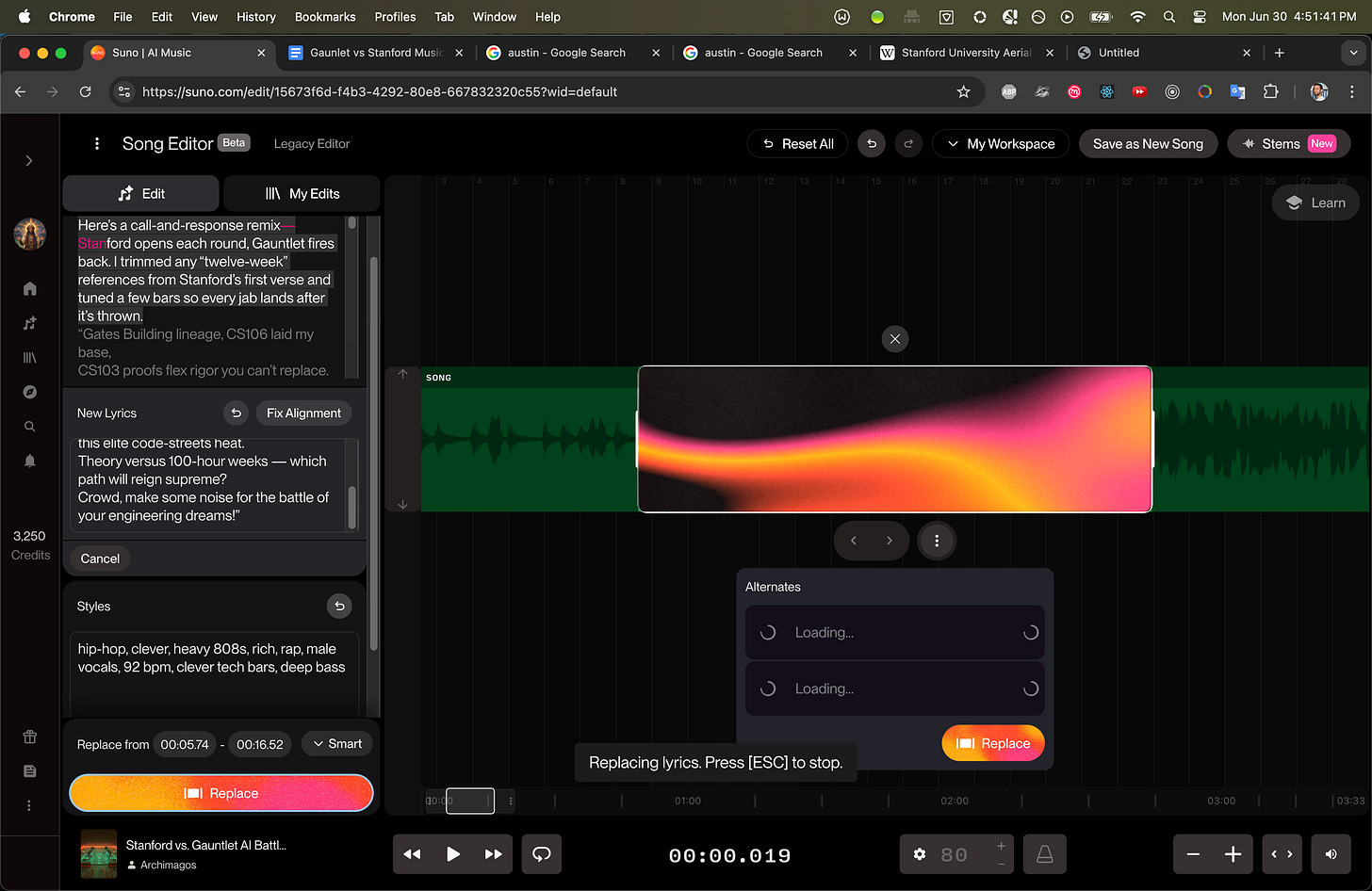

Some back and forth to refine the lyrics, and we're off to Suno to generate the music.

Pro tip: make the lyrics phonetic for jargon. I had to change LiDAR to "Lie-Dahr" so it would be pronounced correctly. You'll need the equivalent of a high "top-p" for this. The key is to sample from many generated songs until you find a banger. For "Stanford vs. Gauntlet AI" I was a stickler and kept trying over a dozen times until I found one good enough. For "Tesla vs. Waymo" I was lucky and struck gold on my third try. Another pro tip: after identifying the gem you want to export, first select the vertical-dots menu -> Create -> Remaster. A few moments later, you'll have a much higher quality mix.

Now for the hard part: the video. There are many text-to-video models and pipelines available (including my ttv-pipeline) that can use advanced features like image-to-video, first+last frame, and segment chaining. There are also several audio-to-video models that will generate "talking head" avatars. However, none of these are fully aligned with the challenging task of creating a music video. The continuity challenge of music (rhythm, melody, timbre, harmony, etc.) make it unfeasible to "stitch together" audio segments from different sources. Therefore, we need to independently generate video clips that align well with the audio's phrasing, subject matter, feeling, and in the most challenging settings, spoken word.

For the latter, Google's Veo 3 shines as a text-to-video-with-speech model unlike anything else on the market. Regardless, getting a spoken word segment to line up with perfect lip syncing to the audio track is not for the faint of heart. Sometimes you get lucky with a few tweaks. Sometimes it takes a carefully-tuned custom curve speed adjustment to get the timing to match up. CapCut makes this not too painful.

For one of the clips, I experimented with a few solutions Wav2Lip to manipulate a video to lip sync with an audio track. It was difficult to get it to line up with the overall composition, and a bit underwhelming / anti-climactic, but I could see this being more impactful for longer clips when it works well. Here are the best three options I've found

1. Wav2Lip (classic, still the easiest to drive)

Why use it? Ultra-simple CLI; runs on a single GPU; good sync accuracy.

Quick Start:

git clone https://github.com/Rudrabha/Wav2Lip

cd Wav2Lip

conda create -n wav2lip python=3.8 -y && conda activate wav2lip

pip install -r requirements.txt

# download a checkpoint to checkpoints/ (see repo)

python inference.py \

--checkpoint_path checkpoints/wav2lip_gan.pth \

--face original_video.mp4 \

--audio new_track.wav \

--outfile lipsynced.mp4

The script auto-extracts audio if you pass a second video instead of new_track.wav. github.com

Tips for crisp results

Add --pads 0 20 0 0 if the chin is being cropped. github.com

Keep the input at ≤720 p or down-sample with --resize_factor 1.5 if you see artefacts. github.com

When you’re happy, merge the new lipsynced video with the final audio mix in FFmpeg:

ffmpeg -i lipsynced.mp4 -i final_audio.wav -c:v copy -c:a aac -shortest final_output.mp4

2. MuseTalk (v1.5, April 2025)

Why use it? Sharper visuals than Wav2Lip, 30 fps real-time on an RTX V100, multi-lingual.

git clone https://github.com/TMElyralab/MuseTalk

cd MuseTalk && bash download_weights.sh # grabs all checkpoints

bash inference.sh v1.5 normal \

--video_path original_video.mp4 \

--audio_path new_track.wav \

--result_dir results/musetalk

The script preserves the input fps (ideal is 25 fps) and writes results/musetalk/result.mp4.

When to pick it:

You need crisper HD output or you intend to run lip-syncing live (MuseTalk offers a realtime flag for webcam/OBS pipelines).

3. LatentSync (ByteDance, diffusion-based)

Why use it? Highest visual fidelity ≥ 1080 p; diffusion keeps skin texture pristine.

Open-source checkpoints dropped in Jan 2025. It runs via a single script (latentsync_infer.py on GitHub) or ready-made cloud endpoints on Fal/Sieve/Replicate. Expect slower inference than MuseTalk or Wav2Lip because of the diffusion sampler.

For source materials in some image-to-video / frame-to-video clips, I turned to WikiPedia & Wikimedia Commons for royalty-free reference images (Gates Building, Donald Knuth, Jensen Huang, Jan Koum, Waymo). Many many models will refuse to take a famous person's image as input for video generation, so I had to get creative with other models: Higgsfield to animate Knuth, Seedance (via Dreamina) to combine Huang and Koum.

Conclusion

Creating an AI-driven music video is less a linear pipeline than a loop of experimentation where the right tool at each stage unlocks the next. Start with a reasoning-first language model (OpenAI o3 or one of its capable peers) to nail the lyrics, because everything downstream lives or dies on that foundation of context-rich words. Feed those lyrics into Suno, stay relentless in your sampling until the track “clicks,” and always remaster before you export.

From there, brace for the real puzzle: synchronizing visuals to audio. Text-to-video giants like Veo 3 can give you stunning spoken-word footage, but timing and lip-sync will fight you. Expect micro-edits in CapCut, and keep a trio of fallback lip-sync engines ready for surgical fixes. Reference imagery is fair game. Just plan for refusals on famous faces and have alternate models in your back pocket.

The overarching lesson? Treat every step (lyrics, music, video, sync) as iterative search. Sample widely, refine aggressively, and don’t hesitate to swap tools when one stalls. With the right mix of reasoning models, generative audio, and adaptive video techniques, you can move from raw prompt to polished, on-beat music video that feels handcrafted – without ever touching a traditional studio.