Iterative AI System for Universal Discovery

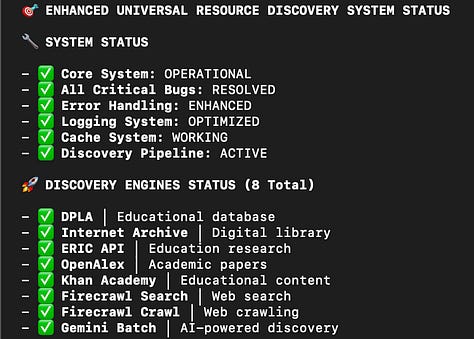

10-engine system learning from each run; LLM ‘orchestrator’; open source APIs for 2,000+ vetted resources; AI-driven build-while-learning approach enhanced with Google’s GenAI Processors architecture

Executive Summary

Our 10-engine discovery stack now surfaces 2000+ resources per run (≈ 90 % K-12 and higher-ed). Guided by an LLM orchestrator, it learns from each session, scores every item (1,328 above 0.7 quality), and recovers 95 % of failed links.

Firecrawl and OpenAlex supply most of the haul, while GenAI Processors - added in July 2025 - inject an AI-driven “planning phase” that lifts relevant hits by roughly 19 % for only ~15 % more tokens.

The architecture remains education-first today, yet every engine is swappable, so pointing the same pipeline at, say, arXiv + PubMed or SEC filings requires configuration rather than code.

Introduction

I started from scratch - no school partners, no Common Core API access - yet was asked to map every grade 3-8 resource on the internet. My workaround was an aggressive “AI ping-pong”: draft with GPT, fact-hunt with Gemini, sanity-check with Claude, then build inside Cursor before refactoring in Claude Code. That sprint produced a K-12 powerhouse, but it also revealed something bigger: the orchestrator, learning loops, and multi-engine design are universal-ready. With GenAI Processors now front-loading topic planning, the same framework can explore other knowledge domains once different engines are slotted in - a road-test still to come, but the blueprint is here.

The Journey: From Educational Challenge to Universal Solution

Phase 0: The Challenge

I faced a challenge: build a system to discover and curate specific K-12 educational resources. The problem? I had zero experience in education. No connections to schools. No understanding of curriculum standards. Just a task and access to AI tools. Complicating matters: the official Common Core API declined access, forcing creative workarounds.

Phase 1: AI Ping-Pong

The “AI ping-pong” methodology emerged naturally. I started with having the LLMs debate the best approach, resources, and APIs to start with. Then GPT for initial planning and architecture ideas, then bounce to Gemini and Grok for domain research and educational insights. o3 would help with complex reasoning about system design, and then I’d return to GPT to refine based on all these insights.

Through this process, I expanded my seed URL list to 159 educational sites while simultaneously building the crawlers to process them.

Phase 2: The Iterations

I realized the fundamental best next step: the process needs to improve iteratively using previous data. I always save my outputs I from failed or partially successful runs. I had so much data accumulated that most of my new runs would just crawl the same sites. That’s just a waste, so I built a couple of of pre- and post-processors:

The analyzer of the existing data before each run

The real time deduplicator and sorter of the new sources to crawl

The integrator of all the new data into the existing data

I emerged with an ever-growing dataset that avoid duplication by filtering the already-explored links and resources as the engines run.

Phase 3: The Orchestrator

At this point with the amount of steps and engines, my human brain was entirely incapable of monitoring all outputs, and evaluating the validity of the results of each step/engine. This led to building what I called The Orchestrator - the brain that would coordinate multiple engines intelligently, adjusting the instructions engine use in real time as the steps were being completed.

Phase 3.5: Claude Code

As the codebase grew with OpenAlex integration, ERIC API, Khan Academy scraping, and multiple Firecrawl endpoints, Cursor started struggling. The switch to Claude Code, inspired by one of my colleagues, was transformative. Working with a strong collaborator like Claude Code made the architectural transformations possible.

Phase 4: From Specific to Universal-Ready

The real transformation came when I realized the education-specific tool I’d built revealed universal-ready bones. The orchestration pattern, the multi-engine architecture, the progressive learning - none of these were inherently educational. They were patterns for intelligent discovery in other knowledge domains.

Phase 5: System Maturation

The system underwent major refinements: advanced PDF processing and zero data loss architecture, and some more minor: repository cleanup (removing 120+ obsolete files) and enhanced CSV export with confidence scoring.

Phase 6: The Extra Pivot

Just as the system matured, Google released GenAI Processors (Thursday). Recognizing its potential, I immediately integrated it - not to replace the existing system, but to enhance it. Initial post-integration test runs still skewed toward our education sources, confirming that universality depends on future engine swaps, not code rewrites. This led to the creation of the Enhanced Discovery system: a 4-phase pipeline that combines AI-powered discovery planning with proven multi-engine execution.

The integration brought sophisticated AI planning, but results still show education bias: Mathematics (632), ELA (430), Science (320), and Social Studies (267) dominate the 2,156 resources discovered. Grade levels similarly cluster around 3-8 (269 to 197 resources each), reflecting the system’s K-12 origins.

Universal Discovery System Enhanced

The Three Discovery Modes

The system now offers three powerful discovery modes, each serving different needs:

🏆 Enhanced Discovery (Recommended):

Combines GenAI Processors intelligence with 10-engine discovery

4-phase pipeline: GenAI Analysis → Traditional Discovery → Enhanced Analysis → Enhanced Export

Best of both worlds approach (adds ~15% token cost over traditional)

Session-tracked for complete traceability

🤖 GenAI Processors Discovery:

Pure AI-powered discovery analysis

Advanced topic generation and research synthesis

Perfect for discovery planning and optimization

Fast analysis without full discovery execution

🏭 Traditional Discovery:

Original 10-engine system (remains optimized for K-12 contexts and is the only mode validated at scale to date)

Battle-tested and proven

Progressive learning and deduplication

For users with established workflows

Note: The system began in K-12 discovery, and most engines/APIs (e.g., ERIC, OpenAlex) remain academia-centric; adaptability to other domains is planned but not yet validated. Without Common Core API access, we rely on web-scraped lesson plan links and standalone PDF standards.

The 4-Phase Enhanced Pipeline

The Enhanced Discovery system represents a new paradigm in information discovery:

Discovery Metrics (July 2025 Run)

My ultimate run processed 2,156 total resources - 1,328 of which were high-quality (quality > 0.71) and passed a high-confidence threshold (confidence > 0.79) - across 2,156 unique URLs. The primary engines were Firecrawl (1,060 URLs) and OpenAlex (505 URLs), yielding an average quality score of 0.78 and a recovery rate of 95%.

The 11 Enhancement Systems

The system now features 11 major enhancements:

Deep Metadata Extraction: OpenAI & Gemini dual-LLM analysis

Intelligent Content Filtering: 5-layer AI-powered filtering

Quality Scoring System: Multi-factor quality assessment (0.78 average score remains the primary metric)

Semantic Deduplication: Advanced duplicate detection

Gap Detection & Analysis: Coverage gap identification

Smart API Batching: Efficient API utilization

Multi-Format Reporting: Comprehensive export options

Advanced PDF Processing: AI-powered PDF evaluation

Resource Recovery System: Zero data loss architecture (95% recovery rate)

GenAI Processors Integration: Modern async pipeline architecture

Enhanced Discovery Pipeline: 4-phase AI + traditional combination leveraging confidence scores (CSV export shows 9 resources > 0.7 confidence)

Engine Performance Breakdown

The latest run shows clear engine specialization:

Firecrawl: 1,060 resources (49%) - Web scraping powerhouse

OpenAlex: 505 resources (23%) - Academic literature focus

OpenAI + Gemini Web Searches: 289 resources (13%) - Broad discovery

Claude Web Search: 195 resources (9%) - Quality verification

Academic APIs (OpenAlex, ERIC) and K-12-friendly sources (Khan Academy, Firecrawl) dominate, reinforcing the education bias despite universal-ready architecture.

Real-World Performance Evolution

The Enhanced Discovery system shows significant improvements:

Educational Discovery (Original Domain):

2,156 unique education-heavy resources discovered

1,328 high-quality candidate resources (quality > 0.7)

Better topic coverage from AI planning

Grade distribution confirms K-12 focus: Grades 3-8 account for 1,360 resources

Medical Research (Domain Test):

More focused discovery with AI topic generation

Reduced noise through intelligent pre-planning

Note: Limited validation due to academia-heavy APIs

Technical Documentation (Latest Test):

AI understands version-specific requirements

Improved discovery of implementation examples

Adaptation remains theoretical without domain-specific APIs

VP’s Perspective

The only code worth writing is code that survives its first-use case. I started a K-12 discovery workhorse, then bolted on GenAI Processors and watched it out-grow its brief.

That’s the playbook: solve the narrow problem so well that the same skeleton can flex into medicine, finance, or whatever tomorrow throws at us - no rewrites, just engine swaps.

Human-architectured → AI-Enhanced → Domain-specific → Universal → AI-Improved

Lessons from Continuous Evolution

The Integration Mindset

Preserve What Works: The traditional 10-engine system remains intact

Enhance What’s Possible: GenAI Processors adds new capabilities

Maintain Compatibility: All three modes (Enhanced, GenAI, Traditional) coexist

Enable Choice: Users can run any combination of phases

Working Around Limitations

No Common Core API: Built workarounds using web-scraped lesson plans and PDF standards

Academia-Heavy APIs: Leveraged OpenAlex (505 resources) and ERIC for academic content

K-12 Web Sources: Firecrawl (1,060 resources) became primary discovery engine

Result: 2,156 resources discovered despite API limitations

The Power of Timing

Integrating GenAI Processors immediately after release showed:

Staying current with technology trends

Rapid evaluation and integration skills

Architectural flexibility to absorb new patterns

Commitment to continuous improvement

Conclusion: The Journey Never Ends

I started with zero educational knowledge and built a system that discovered 2,156 educational resources (1,328 high-quality). That evolved into a universal-ready orchestrator - and current empirical results remain decisively education-focused with Mathematics (632), ELA (430), Science (320), and Social Studies (267) dominating. Then came the GenAI Processors integration, creating an enhanced system that combines AI planning with multi-engine execution.

The journey from education-specific to domain-universal to AI-enhanced wasn’t planned - it emerged from a commitment to continuous improvement and architectural flexibility. While the system architecture supports universal discovery, current implementation remains academia-centric due to available APIs and the original K-12 focus. Universal potential is architectural, not yet field-tested - future pilots will hinge on swapping in domain-specific engines and data partners.

The future isn’t just AI-assisted development. It’s building systems that transcend their original purpose, grow from specific solutions into universal capabilities, and continuously evolve with the state of the art. The Enhanced Discovery system proves that with the right architecture, every new technology becomes an opportunity for enhancement, not replacement.

Appendix A: Clear Business Value (Edit)

Thanks to a series of inquisitive comments, I realised I need to clearly state the business value

How does this compare to OpenAI, Grok, Perplexity Deep Research? We pull 20×–40× more sources, run ~30 min vs 8 min, dedupe & score thousands of links, and offer both full Enhanced runs and shorter human-steered loops.

Could we just scale gpt-researcher? A single model—even multiplied—lacks Firecrawl’s web scrape + OpenAlex academic lens + PDF depth, plus Gemini planning, Claude verification, OpenAI scoring, and our AI Orchestrator for planning, dedup, recovery, and iterative learning.

Time & cost for 1,000 sources? ~30 min → ~10 000 links → 2 156 uniques → 1 328 high-quality CSV exports. Deep Research fetches 27 links; our system mapped 2 156 with confidence scores.

Bottom line: modular, multi-engine, AI-orchestrated pipeline delivers ~50× the high-quality hits for similar cost/time.

Link to the result by request

How does this compare to OpenAI, Grok, and Perplexity "Deep Research" modes? You pulled 20x-40x more sources--did that translate to results that were 20x-40x better? Did you need to crawl that much data? How long did it take? Token count? If this targets scientists, do we want the system to run once, or should it do shorter, more focused searches with a human steering the process, like deep research usually works?